Argon

Before there was Raven, there was Argon: a NASA-developed, ground-based demonstration module that helped to rapidly mature – as an integrated system – the individual sensors, algorithms, and system technologies a spacecraft would need to perform rendezvous and proximity operations (RPO) at multiple ranges.

How Argon Works

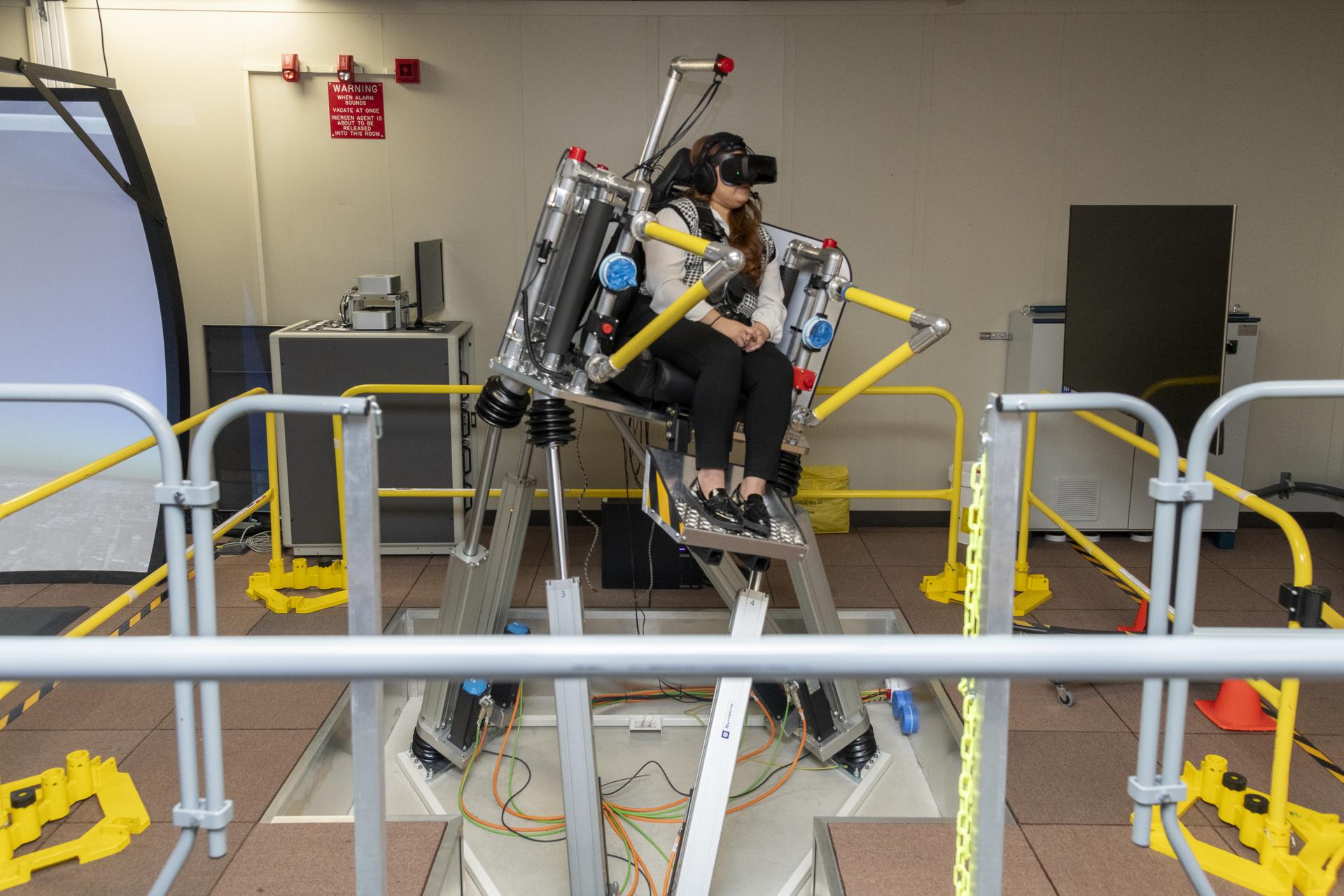

Argon integrated essential RPO components and unique algorithms into a system that autonomously imaged, visually captured and tracked dynamic and static targets. Demonstrations at various ranges tested the components’ capabilities and ensured that the system smoothly transitioned among each simulated servicing-mission phase.

Would Argon Ever Fly in Space?

Argon was a ground-based demonstration module, and as such, was not designed to fly “as-is” in space. However, as a system, Argon matured individual technologies, components, and algorithms that may one day fly within the integrated RPO system that SSPD is developing.

Argon’s cameras and the Vision Navigation Sensor (VNS) (described below) have already acquired flight experience through the Hubble Space Telescope Servicing Mission 4 and the STORRM experiment. SpaceCubeTM has flown on MISSE-7 (Materials International Space Station Experiment), and the STP-H4 (Space Test Program-Houston 4) payload delivered by the H-II Transfer Vehicle 4 in August 2013.

Argon Components

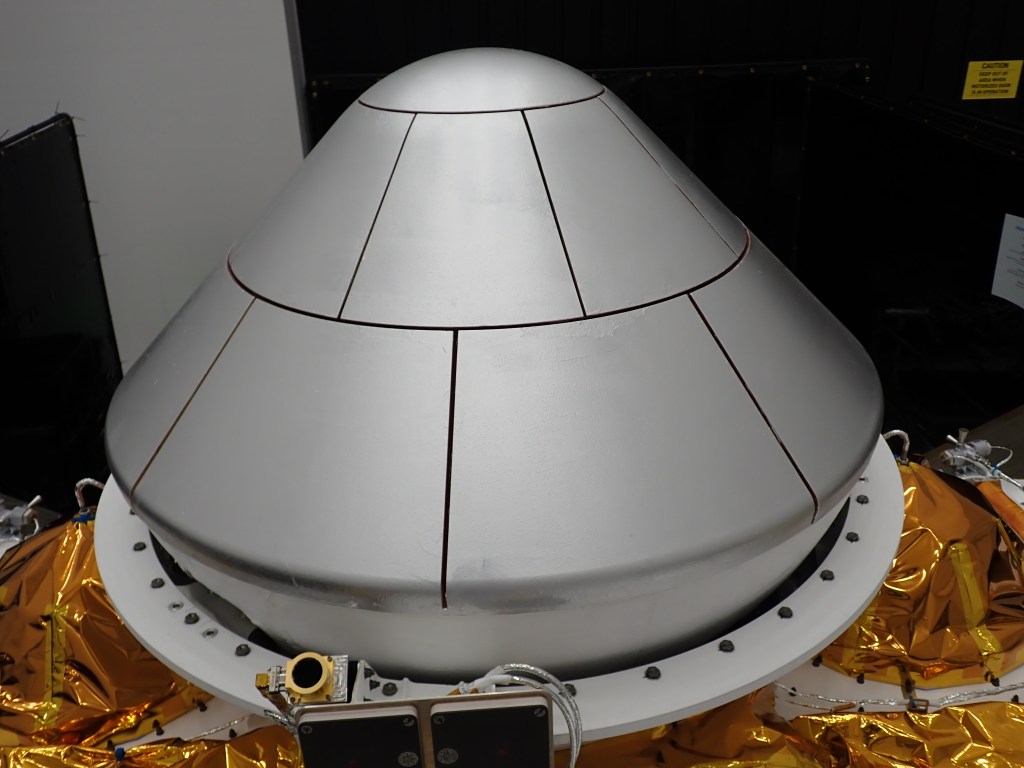

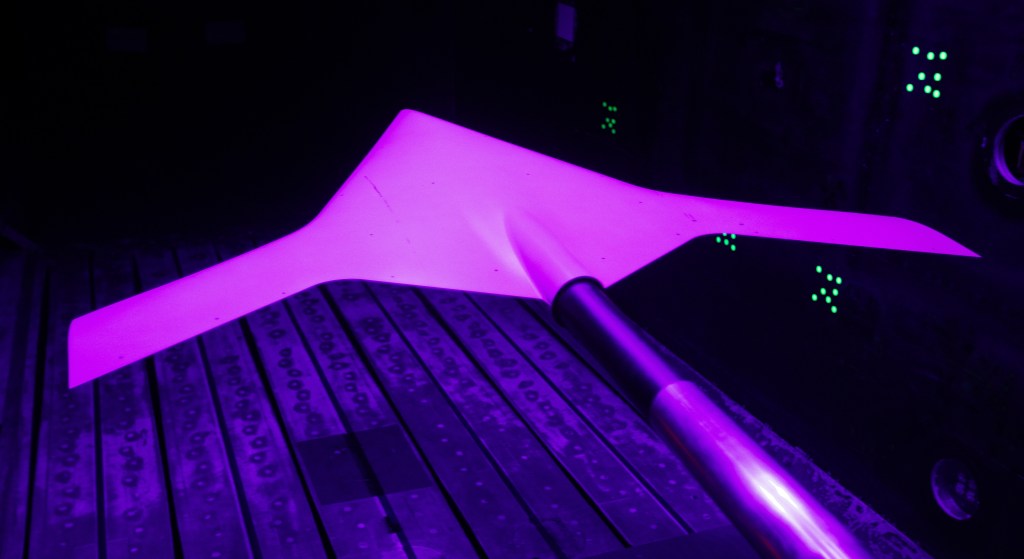

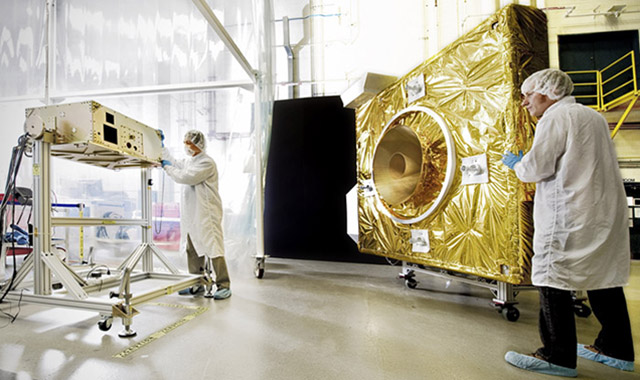

The Argon module (image below, on stand, in 2012) houses a collection of RPO instruments: cameras, sensors, computers, algorithms and avionics integrated into a single enclosure. After Argon was mounted on either a dynamic or static platform (dolly, robot arm with six degrees of freedom, moving vehicle, etc.), it was aimed at diverse targets (such as a mock satellite, at right) to collect data.

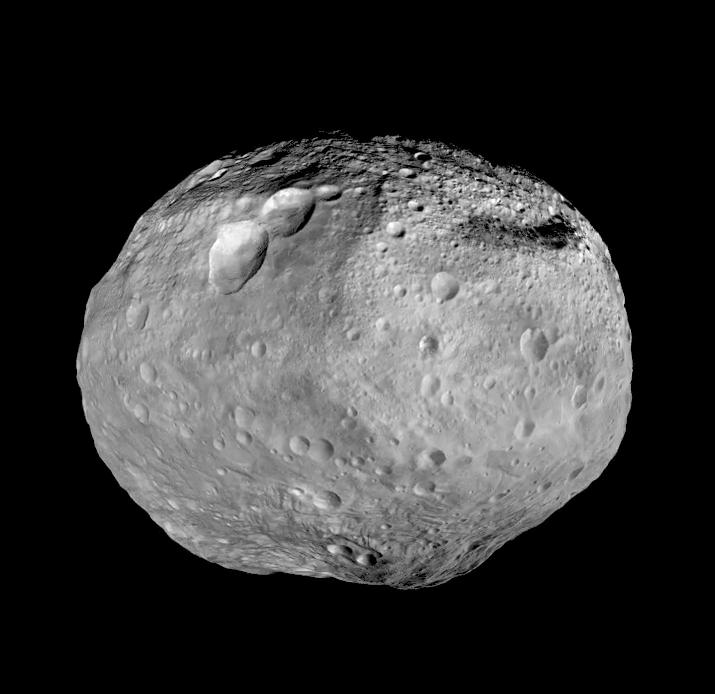

Vision Navigation Sensor: VNS is a flash LIDAR: a laser transmitter that flashes pulses of light onto a target (in Argon’s case, a mock satellite) to gauge its relative distance. VNS’s active laser detector then receives and records the flash lidar light that bounces off of the target, producing full-field range (distance) and intensity images that are used for navigation. A similar unit flew during the 2011 STORRM demonstration on STS-134. Argon is maturing this technology for use in the Orion Multi-Purpose Crew vehicle.

Long-range and short-range optical cameras: The long-range camera acts as a telescope and provides a narrow field of view, while the short-range camera delivers a wide field of view. Together, they deliver reliable optical image data to the SpaceCubeTM processor. Argon uses two of the three optical cameras that flew on the Relative Navigation System (RNS) demonstration on STS-125, the Hubble Space Telescope Servicing Mission 4.

Situational Awareness Camera: A complement to the long- and short-range cameras, this device delivers an image identical to what a human eye would see. Ground operators use this view as a “sanity check” to confirm the SpaceCubeTM processor’s analysis.

SpaceCubeTM : A space-qualified, reconfigurable, multiprocessor platform that delivers 15 to 25 times the computational power of a typical flight processor. SpaceCubeTM receives the image and sensor data from Argon’s optical cameras and VNS, and runs the algorithms that match the data against pre-loaded software models of the target. See images of SpaceCube on Flickr