System Engineering Handbook: Appendix

Contents

- Appendix A: Acronyms

- Appendix B: Glossary

- Appendix C: How to Write a Good Requirement

- C.1 Use of Correct Terms

- C.2 Editorial Checklist

- C.3 General Goodness Checklist

- C.4 Requirements Validation Checklist

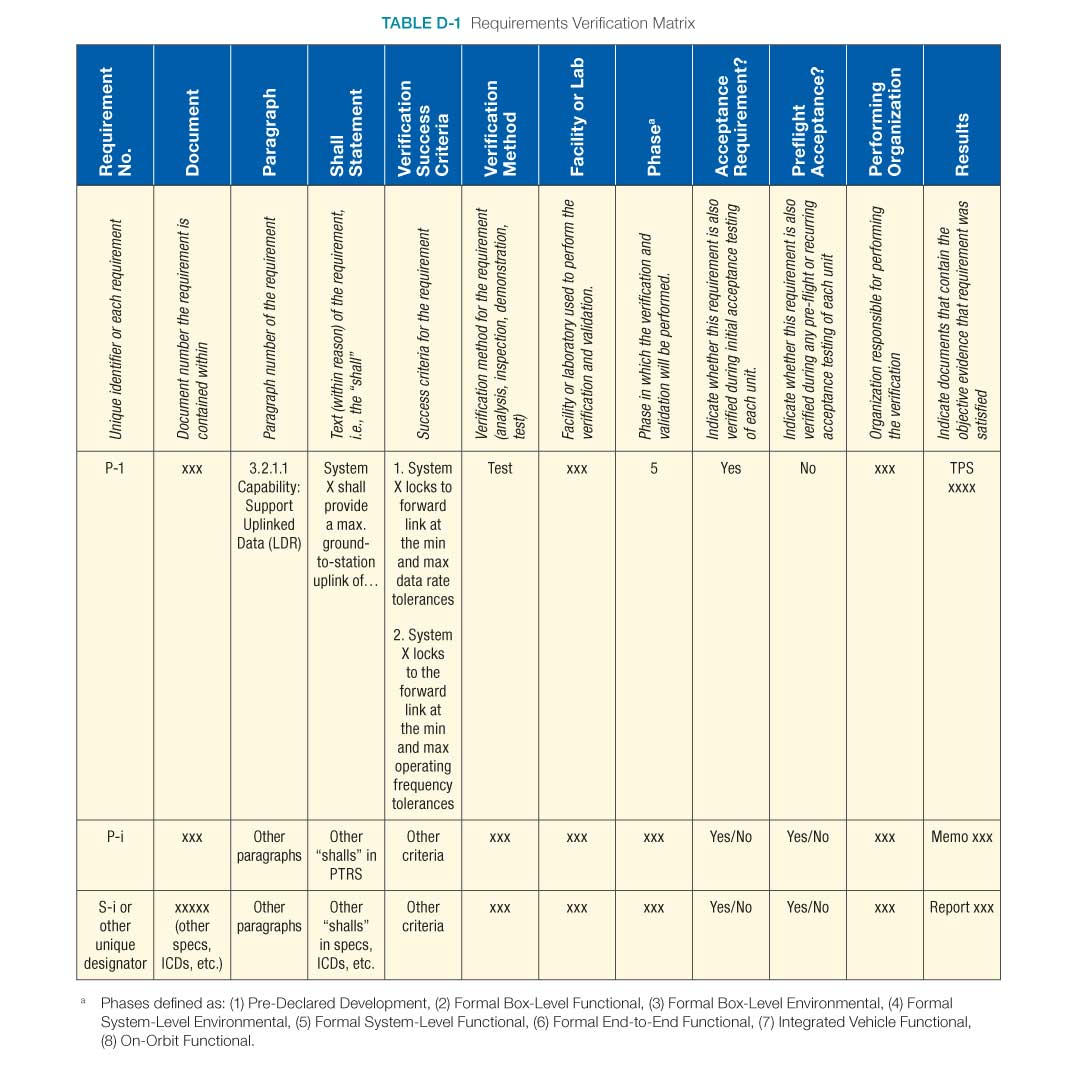

- Appendix D: Requirements Verification Matrix

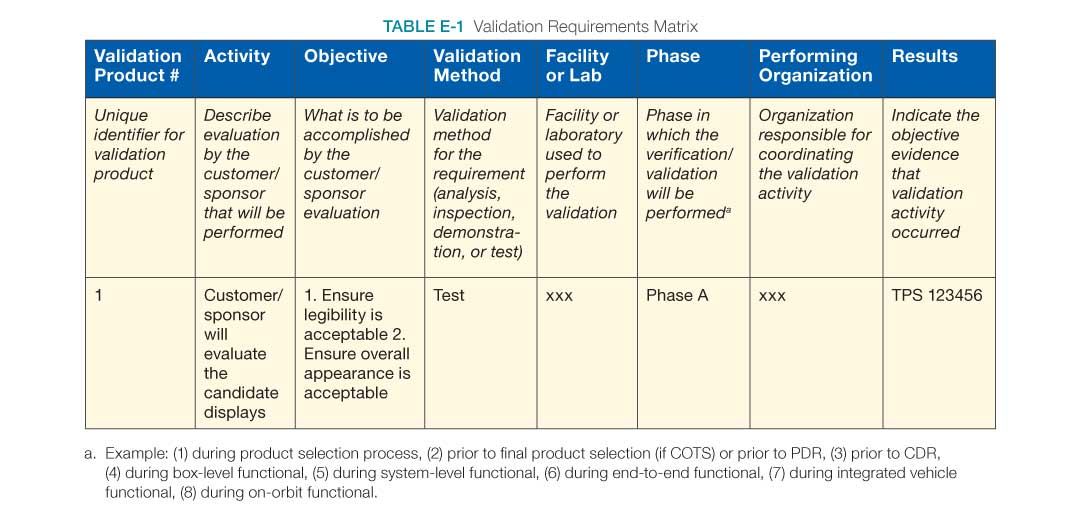

- Appendix E: Creating the Validation Plan with a Validation Requirements Matrix

- Appendix F: Functional, Timing, and State Analysis

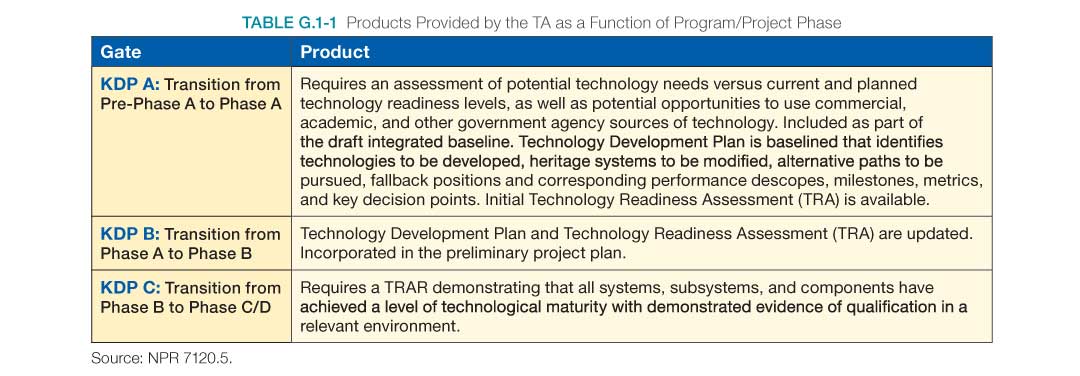

- Appendix G: Technology Assessment/Insertion

- G.1 Introduction, Purpose, and Scope

- G.2 Inputs/Entry Criteria

- G.3 How to Do Technology Assessment

- G.4 Establishing TRLs

- Appendix H: Integration Plan Outline

- H.1 Purpose

- H.2 Questions/Checklist

- H.3 Integration Plan Contents

- 1.0 Purpose of Document

- 2.0 Scope of Project

- 3.0 Integration Strategy

- 4.0 Phase 1 Integration

- 5.0 Multiple Phase Integration Steps (1 or N steps)

- Appendix I: Integration Plan Outline

- Sample Outline

- 1.0 Introduction

- 1.1 Purpose and Scope

- 1.2 Responsibility and Change Authority

- 1.3 Definitions

- 2.0 Applicable and Reference Documents

- 2.1 Applicable Documents

- 2.2 Reference Documents

- 2.3 Order of Precedence

- 3.0 System Description

- 3.1 System Requirements Flowdown

- 3.2 System Architecture

- 3.3 End Item Architectures

- 3.4 Ground Support Equipment

- 3.5 Other Architecture Descriptions

- 4.0 Verification and Validation Process

- 4.1 Verification and Validation Management Responsibilities

- 4.2 Verification Methods

- 4.3 Validation Methods

- 4.4 Certification Process

- 4.5 Acceptance Testing

- 5.0 Verification and Validation Implementation

- 5.1 System Design and Verification and Validation Flow

- 5.2 Test Articles

- 5.3 Support Equipment

- 5.4 Facilities

- 6.0 End Item Verification and Validation

- 6.1 End Item A

- 6.n End Item n

- 7.0 System Verification and Validation

- 7.1 End-Item Integration

- 7.2 Complete System Integration

- 8.0 Program Verification and Validation

- 8.1 Vehicle Integration

- 8.2 End-to-End Integration

- 8.3 On-Orbit V&V Activities

- 9.0 System Certification Products

- Appendix A: Acronyms and Abbreviations

- Appendix B: Definition of Terms

- Appendix C: Requirement Verification Matrix

- Appendix D: Validation Matrix

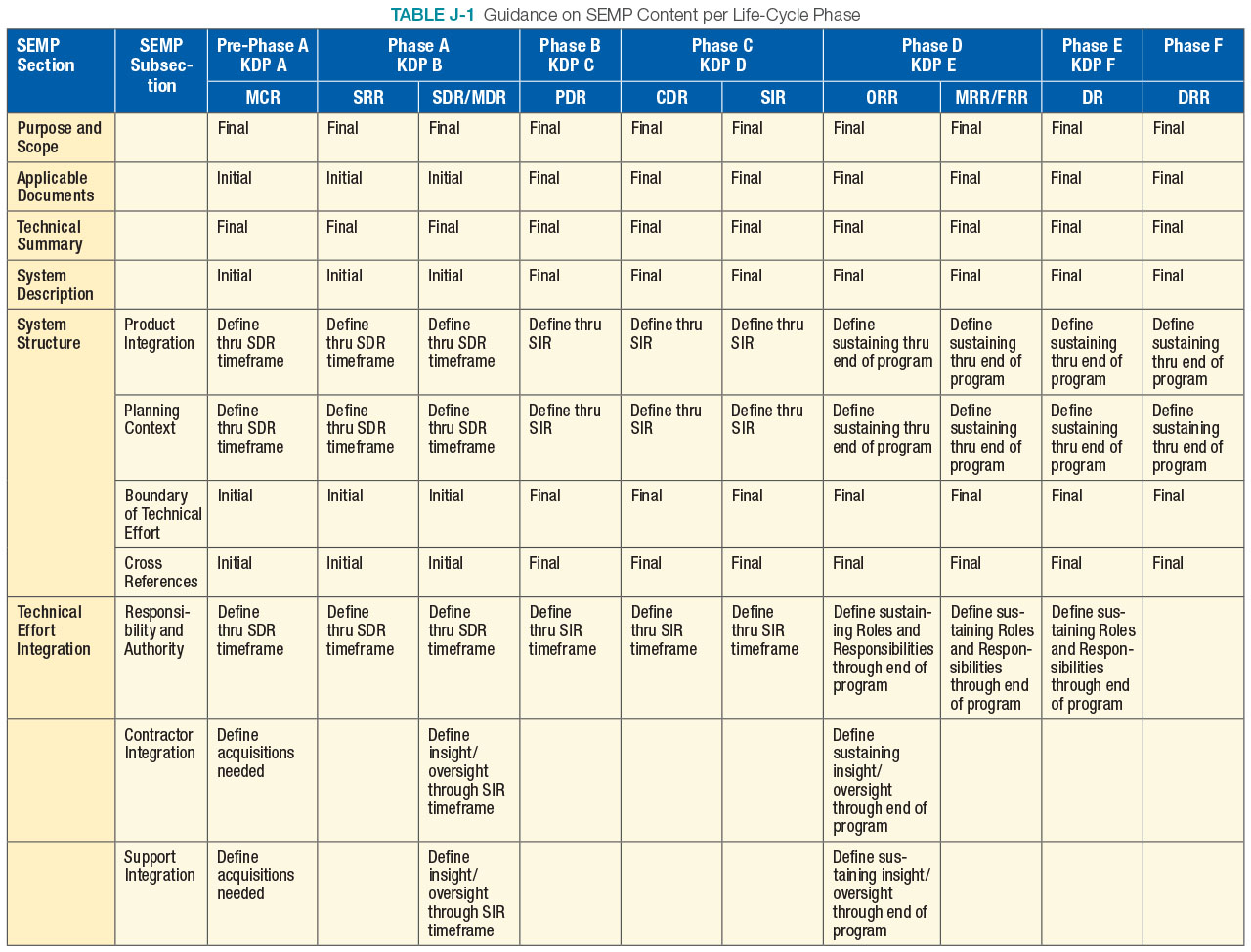

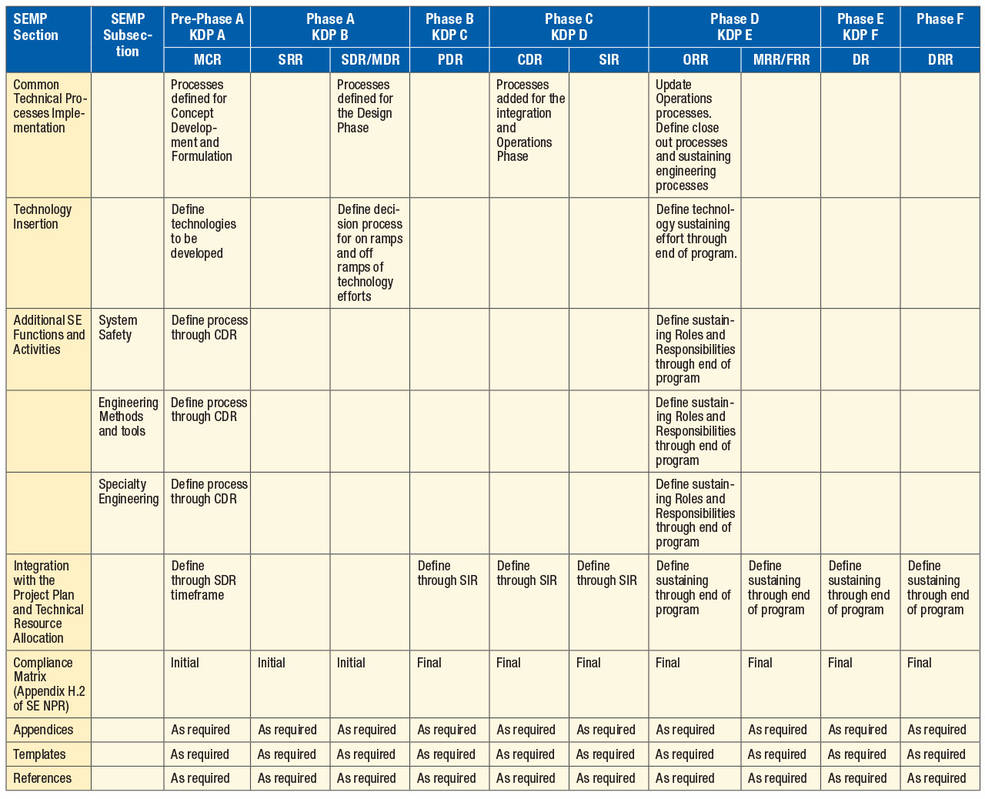

- Appendix J: SEMP Content Outline

- J.1 SEMP Content

- J.2 Terms Used

- J.3 Annotated Outline

- 1.0 Purpose and Scope

- 2.0 Applicable Documents

- 3.0 Technical Summary

- 4.0 Technical Effort Integration

- 5.0 Common Technical Processes Implementation

- 6.0 Technology Insertion

- 7.0 Additional SE Functions and Activities

- 8.0 Integration with the Project Plan and Technical Resource Allocation

- 9.0 Compliance Matrices

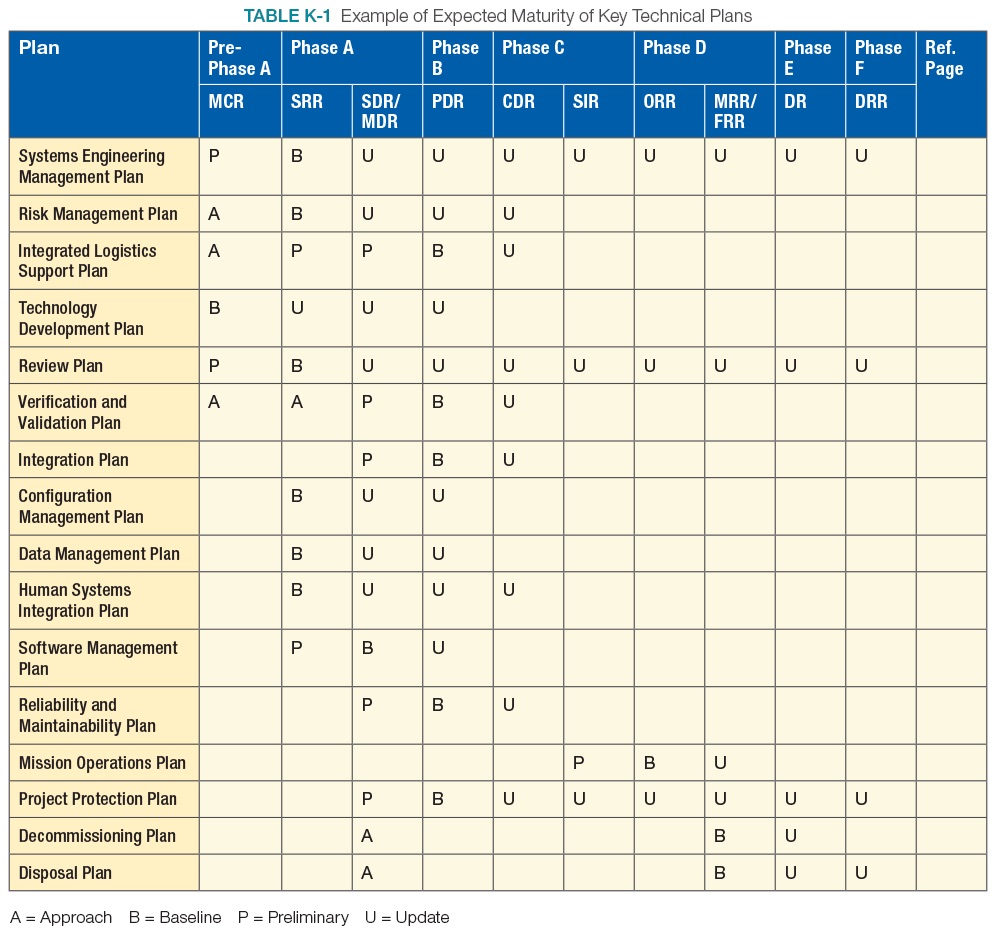

- Appendix K: Technical Plans

- Appendix L: Interface Requirements Document Outline

- 1.0 Introduction

- 1.1 Purpose and Scope

- 1.2 Precedence

- 1.3 Responsibility and Change Authority

- 2.0 Documents

- 2.1 Applicable Documents

- 2.2 Reference Documents

- 3.0 Interfaces

- 3.1 General

- 3.2 Interface Requirements

- Appendix M: CM Plan Outline

- Appendix N: Guidance on Technical Peer Reviews/Inspections

- Appendix O: Reserved

- Appendix P: SOW Review Checklist

- Appendix Q: Reserved

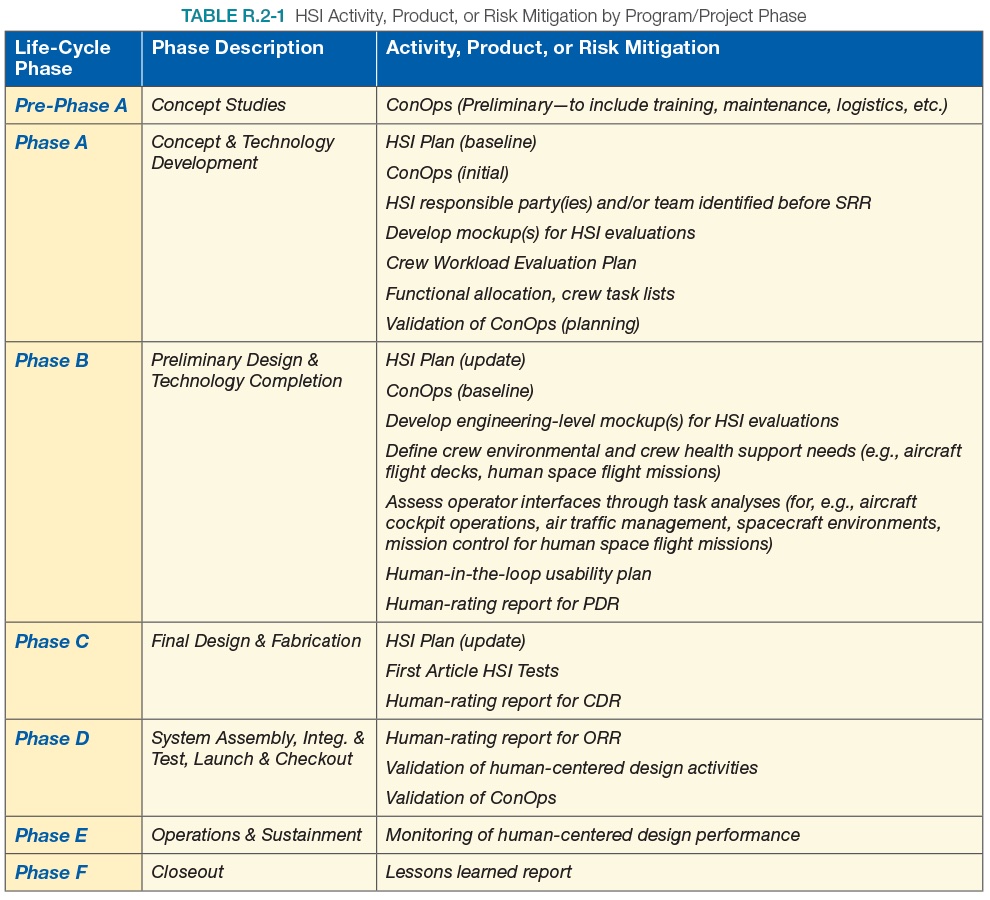

- Appendix R: HSI Plan Content Outline

- R.1 HSI Plan Overview

- R.2 HSI Plan Content Outline

- 1.0 Introduction

- 2.0 Applicable Documents

- 3.0 HSI Objectives

- 4.0 HSI Strategy

- HSI RELEVANCE

- HSI STRATEGY

- 5.0 HSI Requirements, Organization, and Risk Management

- HSI DOMAINS

- HSI REQUIREMENTS

- 6.0 HSI Implementation

- HSI IMPLEMENTATION

- HSI PLAN UPDATES

- Appendix S: Concept of Operations Annotated Outline

- Cover Page

- Table of Contents

- 1.0 Introduction

- 1.1 Project Description

- 1.2 Overview of the Envisioned System

- 2.0 Documents

- 2.1 Applicable Documents

- 2.2 Reference Documents

- 3.0 Description of Envisioned System

- 3.1 Needs, Goals and Objectives of Envisioned System

- 3.2 Overview of System and Key Elements

- 3.3 Interfaces

- 3.4 Modes of Operations

- 3.5 Proposed Capabilities

- 4.0 Physical Environment

- 5.0 Support Environment

- 6.0 Operational Scenarios, Use Cases and/or Design Reference Missions

- 6.1 Nominal Conditions

- 6.2 Off-Nominal Conditions

- 7.0 Impact Considerations

- 7.1 Environmental Impacts

- 7.2 Organizational Impacts

- 7.3 Scientific/Technical Impacts

- 8.0 Risks and Potential Issues

- Appendix A: Acronyms

- Appendix B: Glossary of Terms

- Appendix T: Systems Engineering in Phase E

- T.1 Overview

- T.2 Transition from Development to Operations

- T.3 System Engineering Processes in Phase E

- T.3.1 System Design Processes

- T.3.3 Technical Management Processes

Appendix A: Acronyms

AADL Architecture Analysis and Design Language

AD2 Advancement Degree of Difficulty Assessment

AIAA American Institute of Aeronautics and Astronautics

AO Announcement of Opportunity

AS9100 Aerospace Quality Management Standard

ASME American Society of Mechanical Engineers

ASQ American Society for Quality

CAIB Columbia Accident Investigation Board

CCB Configuration Control Board

CDR Critical Design Review

CE Concurrent Engineering or Chief Engineer

CEQ Council on Environmental Quality

CERR Critical Event Readiness Review

CHSIP Commercial Human Systems Integration Processes

CI Configuration Item

CM Configuration Management

CMO Configuration Management Organization

ConOps Concept of Operations

COSPAR Committee on Space Research

COTS Commercial Off-The-Shelf

CPI Critical Program Information

CR Change Request

CRM Continuous Risk Management

CSA Configuration Status Accounting

D&C Design and Construction

DDT&E Design, Development, Test, and Evaluation

DM Data Management

DOD (U.S.) Department of Defense

DODAF DOD Architecture Framework

DR Decommissioning Review

DRM Design Reference Mission

DRR Disposal Readiness Review

EDL Entry, Descent, and Landing

EEE Electrical, Electronic, and Electromechanical

EFFBD Enhanced Functional Flow Block Diagram

EIA Electronic Industries Alliance

EMC Electromagnetic Compatibility

EMI Electromagnetic Interference

EO (U.S.) Executive Order

EOM End of Mission

EVM Earned Value Management

FA Formulation Agreement

FAD Formulation Authorization Document

FAR Federal Acquisition Regulation

FCA Functional Configuration Audit

FFBD Functional Flow Block Diagram

FIPS Federal Information Processing Standard

FM Fault Management

FMEA Failure Modes and Effects Analysis

FMR Financial Management Requirements

FRR Flight Readiness Review

FTE Full Time Equivalent

GEO Geostationary

GOTS Government Off-The-Shelf

GSE Government-Supplied Equipment or Ground Support Equipment

GSFC Goddard Space Flight Center

HCD Human-Centered Design

HF Human Factors

HITL Human-In-The-Loop

HQ Headquarters

HSI Human Systems Integration

HSIP Human System Integration Plan

HWIL HardWare-In-the-Loop

I&T Integration and Test

ICD Interface Control Document/Drawing

ICP Interface Control Plan

IDD Interface Definition Document

IDEF0 Integration Definition (for functional modeling)

IEEE Institute of Electrical and Electronics Engineers

ILS Integrated Logistics Support

INCOSE International Council on Systems Engineering

IPT Integrated Product Team

IRD Interface Requirements Document

ISO International Organization for Standardization

IT Information Technology

ITA Internal Task Agreement

ITAR International Traffic in Arms Regulation

IV&V Independent Verification and Validation

IVHM Integrated Vehicle Health Management

IWG Interface Working Group

JCL Joint (cost and schedule) Confidence Level

JPL Jet Propulsion Laboratory

KBSI Knowledge Based Systems, Inc.

KDP Key Decision Point

KDR Key Driving Requirement

KPP Key Performance Parameter

KSC Kennedy Space Center

LCC Life Cycle Cost

LEO Low Earth Orbit or Low Earth Orbiting

M&S Modeling and Simulation or Models and Simulations

MBSE Model-Based Systems Engineering

MCR Mission Concept Review

MDAA Mission Directorate Associate Administrator

MDR Mission Definition Review

MEL Master Equipment List

MODAF (U.K.) Ministry of Defense Architecture Framework

MOE Measure of Effectiveness

MOP Measure of Performance

MOTS Modified Off-The-Shelf

MOU Memorandum of Understanding

MRB Material Review Board

MRR Mission Readiness Review

MSFC Marshall Space Flight Center

NASA (U.S.) National Aeronautics and Space Administration

NEN NASA Engineering Network

NEPA National Environmental Policy Act

NFS NASA FAR Supplement

NGO Needs, Goals, and Objectives

NIAT NASA Integrated Action Team

NID NASA Interim Directive

NOA New Obligation Authority

NOAA (U.S.) National Oceanic and Atmospheric Administration

NODIS NASA Online Directives Information System

NPD NASA Policy Directive

NPR NASA Procedural Requirements

NRC (U.S.) Nuclear Regulatory Commission

NSTS National Space Transportation System

OCE (NASA) Office of the Chief Engineer

OCIO (NASA) Office of the Chief Information Officer

OCL Object Constraint Language

OMB (U.S.) Office of Management and Budget

ORR Operational Readiness Review

OTS Off-the-Shelf

OWL Web Ontology Language

PBS Product Breakdown Structure

PCA Physical Configuration Audit or Program Commitment Agreement

PD/NSC (U.S.) Presidential Directive/National Security Council

PDR Preliminary Design Review

PFAR Post-Flight Assessment Review

PI Performance Index or Principal Investigator

PIR Program Implementation Review

PKI Public Key Infrastructure

PLAR Post-Launch Assessment Review

PM Program Manager or Project Manager

PMC Program Management Council

PPD (U.S.) Presidential Policy Directive

PRA Probabilistic Risk Assessment

PRD Project Requirements Document

PRR Production Readiness Review

QA Quality Assurance

QVT Query View Transformations

R&M Reliability and Maintainability

R&T Research and Technology

RACI Responsible, Accountable, Consulted, Informed

REC Record of Environmental Consideration

RF Radio Frequency

RFA Requests for Action

RFP Request for Proposal

RID Review Item Discrepancy or Review Item Disposition

RIDM Risk-Informed Decision-Making

RM Risk Management

RMA Rapid Mission Architecture

RUL Remaining Useful Life

SAR System Acceptance Review or Safety Analysis Report (DOE)

SBU Sensitive But Unclassified

SDR Program/System Definition Review

SE Systems Engineering

SECoP Systems Engineering Community of Practice

SEMP Systems Engineering Management Plan

SI International System of Units (French: Système international d’unités)

SIR System Integration Review

SMA Safety and Mission Assurance

SME Subject Matter Expert

SOW Statement Of Work

SP Special Publication

SRD System Requirements Document

SRR Program/System Requirements Review

SRS Software Requirements Specification

STI Scientific and Technical Information

STS Space Transportation System

SysML System Modeling Language

T&E Test and Evaluation

TA Technical Authority

TBD To Be Determined

TBR To Be Resolved

ToR Terms of Reference

TPM Technical Performance Measure

TRL Technology Readiness Level

TRR Test Readiness Review

TVC Thrust Vector Controller

UFE Unallocated Future Expenses

UML Unified Modeling Language

V&V Verification and Validation

WBS Work Breakdown Structure

WYE Work Year Equivalent

XMI XML Metadata Interchange

XML Extensible Markup Language

Appendix B: Glossary

A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z

– A –

Acceptable Risk: The risk that is understood and agreed to by the program/project, governing authority, mission directorate, and other customer(s) such that no further specific mitigating action is required.

Acquisition: The process for obtaining the systems, research, services, construction, and supplies that NASA needs to fulfill its missions. Acquisition, which may include procurement (contracting for products and services), begins with an idea or proposal that aligns with the NASA Strategic Plan and fulfills an identified need and ends with the completion of the program or project or the final disposition of the product or service.

Activity: A set of tasks that describe the technical effort to accomplish a process and help generate expected outcomes.

Advancement Degree of Difficulty Assessment (AD2): The process to develop an understanding of what is required to advance the level of system maturity.

Allocated Baseline (Phase C): The allocated baseline is the approved performance-oriented configuration documentation for a CI to be developed that describes the functional and interface characteristics that are allocated from a higher level requirements document or a CI and the verification required to demonstrate achievement of those specified characteristics. The allocated baseline extends the top-level performance requirements of the functional baseline to sufficient detail for initiating manufacturing or coding of a CI. The allocated baseline is controlled by NASA. The allocated baseline(s) is typically established at the Preliminary Design Review.

Analysis: Use of mathematical modeling and analytical techniques to predict the compliance of a design to its requirements based on calculated data or data derived from lower system structure end product validations.

Analysis of Alternatives: A formal analysis method that compares alternative approaches by estimating their ability to satisfy mission requirements through an effectiveness analysis and by estimating their life cycle costs through a cost analysis. The results of these two analyses are used together to produce a cost-effectiveness comparison that allows decision makers to assess the relative value or potential programmatic returns of the alternatives. An analysis of alternatives broadly examines multiple elements of program or project alternatives (including technical performance, risk, LCC, and programmatic aspects).

Analytic Hierarchy Process: A multi-attribute methodology that provides a proven, effective means to deal with complex decision- making and can assist with identifying and weighting selection criteria, analyzing the data collected for the criteria, and expediting the decision-making process.

Anomaly: The unexpected performance of intended function.

Approval: Authorization by a required management official to proceed with a proposed course of action. Approvals are documented.

Approval (for Implementation): The acknowledgment by the decision authority that the program/project has met stakeholder expectations and formulation requirements, and is ready to proceed to implementation. By approving a program/project, the decision authority commits the budget resources necessary to continue into implementation. Approval (for Implementation) is documented.

Architecture (System): Architecture is the high-level unifying structure that defines a system. It provides a set of rules, guidelines, and constraints that defines a cohesive and coherent structure consisting of constituent parts, relationships and connections that establish how those parts fit and work together. It addresses the concepts, properties and characteristics of the system and is represented by entities such as functions, functional flows, interfaces, relationships, resource flow items, physical elements, containers, modes, links, communication resources, etc. The entities are not independent but interrelated in the architecture through the relationships between them (NASA HQ).

Architecture (ISO Definition): Fundamental concepts or properties of a system in its environment embodied in its elements, relationships, and in the principles of its design and evolution (ISO 42010).

As-Deployed Baseline: The as-deployed baseline occurs at the Operational Readiness Review. At this point, the design is considered to be functional and ready for flight. All changes will have been incorporated into the documentation.

Automated: Automation refers to the allocation of system functions to machines (hardware or software) versus humans.

Autonomous: Autonomy refers to the relative locations and scope of decision-making and control functions between two locations within a system or across the system boundary.

– B –

Baseline: An agreed-to set of requirements, designs, or documents that will have changes controlled through a formal approval and monitoring process.

Bidirectional Traceability : The ability to trace any given requirement/expectation to its parent requirement/expectation and to its allocated children requirements/expectations.

Brassboard: A medium fidelity functional unit that typically tries to make use of as much operational hardware/software as possible and begins to address scaling issues associated with the operational system. It does not have the engineering pedigree in all aspects, but is structured to be able to operate in simulated operational environments in order to assess performance of critical functions.

Breadboard: A low fidelity unit that demonstrates function only, without respect to form or fit in the case of hardware, or platform in the case of software. It often uses commercial and/or ad hoc components and is not intended to provide definitive information regarding operational performance.

– C –

Component Facilities: Complexes that are geographically separated from the NASA Center or institution to which they are assigned, but are still part of the Agency.

Concept of Operations (ConOps) (Concept Documentation): Developed early in Pre-Phase A, the ConOps describes the overall high-level concept of how the system will be used to meet stakeholder expectations, usually in a time-sequenced manner. It describes the system from an operational perspective and helps facilitate an understanding of the system goals. It stimulates the development of the requirements and architecture related to the user elements of the system. It serves as the basis for subsequent definition documents and provides the foundation for the long-range operational planning activities.

Concurrence: A documented agreement by a management official that a proposed course of action is acceptable.

Concurrent Engineering: Design in parallel rather than serial engineering fashion. It is an approach to product development that brings manufacturing, testing, assurance, operations and other disciplines into the design cycle to ensure all aspects are incorporated into the design and thus reduce overall product development time.

Configuration Items (CI): Any hardware, software, or combination of both that satisfies an end use function and is designated for separate configuration management. For example, configuration items can be referred to by an alphanumeric identifier which also serves as the unchanging base for the assignment of serial numbers to uniquely identify individual units of the CI.

Configuration Management Process: A management discipline that is applied over a product’s life cycle to provide visibility into and to control changes to performance and functional and physical characteristics. It ensures that the configuration of a product is known and reflected in product information, that any product change is beneficial and is effected without adverse consequences, and that changes are managed.

Context Diagram: A diagram that shows external systems that impact the system being designed.

Continuous Risk Management: A systematic and iterative process that efficiently identifies, analyzes, plans, tracks, controls, communicates, and documents risks associated with implementation of designs, plans, and processes.

Contract: A mutually binding legal relationship obligating the seller to furnish the supplies or services (including construction) and the buyer to pay for them. It includes all types of commitments that obligate the Government to an expenditure of appropriated funds and that, except as otherwise authorized, are in writing. In addition to bilateral instruments, contracts include (but are not limited to) awards and notices of awards; job orders or task letters issued under basic ordering agreements; letter contracts; orders, such as purchase orders under which the contract becomes effective by written acceptance or performance; and bilateral contract modifications. Contracts do not include grants and cooperative agreements.

Contractor: An individual, partnership, company, corporation, association, or other service having a contract with the Agency for the design, development, manufacture, maintenance, modification, operation, or supply of items or services under the terms of a contract to a program or project. Research grantees, research contractors, and research subcontractors are excluded from this definition.

Control Account Manager: A manager responsible for a control account and for the planning, development, and execution of the budget content for those accounts.

Control Gate (or milestone): A defined point in the program/project life cycle where the decision authority can evaluate progress and determine next actions. These may include a key decision point, life cycle review, or other milestones identified by the program/project.

Cost-Benefit Analysis: A methodology to determine the advantage of one alternative over another in terms of equivalent cost or benefits. It relies on totaling positive factors and subtracting negative factors to determine a net result.

Cost-Effectiveness Analysis: A systematic quantitative method for comparing the costs of alternative means of achieving the same equivalent benefit for a specific objective.

Critical Design Review: A review that demonstrates that the maturity of the design is appropriate to support proceeding with full-scale fabrication, assembly, integration, and test, and that the technical effort is on track to complete the system development meeting performance requirements within the identified cost and schedule constraints.

Critical Event (or key event): An event in the operations phase of the mission that is time-sensitive and is required to be accomplished successfully in order to achieve mission success. These events should be considered early in the life cycle as drivers for system design.

Critical Event Readiness Review: A review that evaluates the readiness of a project’s flight system to execute the critical event during flight operation.

Customer: The organization or individual that has requested a product and will receive the product to be delivered. The customer may be an end user of the product, the acquiring agent for the end user, or the requestor of the work products from a technical effort. Each product within the system hierarchy has a customer.

– D –

Data Management: DM is used to plan for, acquire, access, manage, protect, and use data of a technical nature to support the total life cycle of a system.

Decision Analysis Process: A methodology for making decisions that offers techniques for modeling decision problems mathematically and finding optimal decisions numerically. The methodology entails identifying alternatives, one of which should be decided upon; possible events, one of which occurs thereafter; and outcomes, each of which results from a combination of decision and event.

Decision Authority: The individual authorized by the Agency to make important decisions for programs and projects under his or her authority.

Decision Matrix: A methodology for evaluating alternatives in which valuation criteria are typically displayed in rows on the left side of the matrix and alternatives are the column headings of the matrix. A “weight” is typically assigned to each criterion.

Decision Support Package: Documentation submitted in conjunction with formal reviews and change requests.

Decision Tree: A decision model that displays the expected consequences of all decision alternatives by making discreet all “chance” nodes, and, based on this, calculating and appropriately weighting the possible consequences of all alternatives.

Decommissioning Review: A review that confirms the decision to terminate or decommission a system and assess the readiness for the safe decommissioning and disposal of system assets. The DR is normally held near the end of routine mission operations upon accomplishment of planned mission objectives. It may be advanced if some unplanned event gives rise to a need to prematurely terminate the mission, or delayed if operational life is extended to permit additional investigations.

Deliverable Data Item: Consists of technical data, such as requirements specifications, design documents, management data plans, and metrics reports, that have been identified as items to be delivered with an end product.

Demonstration: Showing that the use of an end product achieves the individual specified requirement (verification) or stakeholder expectation (validation). It is generally a basic confirmation of performance capability, differentiated from testing by the lack of detailed data gathering. Demonstrations can involve the use of physical models or mock-ups; for example, a requirement that all controls shall be reachable by the pilot could be verified by having a pilot perform flight-related tasks in a cockpit mock-up or simulator. A demonstration could also be the actual operation of the end product by highly qualified personnel, such as test pilots, who perform a one-time event that demonstrates a capability to operate at extreme limits of system performance.

Derived Requirements: Requirements arising from constraints, consideration of issues implied but not explicitly stated in the high-level direction provided by NASA Headquarters and Center institutional requirements, factors introduced by the selected architecture, and the design. These requirements are finalized through requirements analysis as part of the overall systems engineering process and become part of the program or project requirements baseline. Requirements arising from constraints, consideration of issues implied but not explicitly stated in the high-level direction provided by NASA Headquarters and Center institutional requirements, factors introduced by the selected architecture, and the design. These requirements are finalized through requirements analysis as part of the overall systems engineering process and become part of the program or project requirements baseline.

Descope: As a verb, take out of (or remove from) the scope of a project. As a noun, as in “performance descope,” it indicates the process or the result of the process of narrowing the scope; i.e., removing part of the original scope.

Design Solution Definition Process: The process used to translate the outputs of the logical decomposition process into a design solution definition. It includes transforming the defined logical decomposition models and their associated sets of derived technical requirements into alternative solutions and analyzing each alternative to be able to select a preferred alternative and fully define that alternative into a final design solution that will satisfy the technical requirements.

Designated Governing Authority: For the technical effort, this is the Center Director or the person that has been designated by the Center Director to ensure the appropriate level of technical management oversight. For large programs, this will typically be the Engineering Technical Authority. For smaller projects, this function can be delegated to line managers.

Detection: Determination that system state or behavior is different from expected performance.

Diagnosis: Determining the possible locations and/or causes of an anomaly or a failure.

Discrepancy: Any observed variance from, lack of agreement with, or contradiction to the required or expected outcome, configuration, or result.

– E –

Earned Value: The sum of the budgeted cost for tasks and products that have actually been produced (completed or in progress) at a given time in the schedule.

Earned Value Management: A tool for measuring and assessing project performance through the integration of technical scope with schedule and cost objectives during the execution of the project. EVM provides quantification of technical progress, enabling management to gain insight into project status and project completion costs and schedules. Two essential characteristics of successful EVM are EVM system data integrity and carefully targeted monthly EVM data analyses (i.e., risky WBS elements).

Emergent Behavior: An unanticipated behavior shown by a system due to interactions between large numbers of simple components of that system.

End Product: The hardware/software or other product that performs the operational functions. This product is to be delivered to the next product layer or to the final customer.

Enabling Products: The life cycle support products and services (e.g., production, test, deployment, training, maintenance, and disposal) that facilitate the progression and use of the operational end product through its life cycle. Since the end product and its enabling products are interdependent, they are viewed as a system. Project responsibility thus extends to acquiring services from the relevant enabling products in each life cycle phase. When a suitable enabling product does not already exist, the project that is responsible for the end product may also be responsible for creating and using the enabling product.

Engineering Unit: A high fidelity unit that demonstrates critical aspects of the engineering processes involved in the development of the operational unit. Engineering test units are intended to closely resemble the final product (hardware/software) to the maximum extent possible and are built and tested so as to establish confidence that the design will function in the expected environments. In some cases, the engineering unit will become the final product, assuming that proper traceability has been exercised over the components and hardware handling.

Enhanced Functional Flow Block Diagram: A block diagram that represents control flows and data flows as well as system functions and flow.

Entrance Criteria: Guidance for minimum accomplishments each project needs to fulfill prior to a life cycle review.

Environmental Impact: The direct, indirect, or cumulative beneficial or adverse effect of an action on the environment.

Environmental Management: The activity of ensuring that program and project actions and decisions that potentially impact or damage the environment are assessed and evaluated during the formulation and planning phase and reevaluated throughout implementation. This activity is performed according to all NASA policy and Federal, state, and local environmental laws and regulations.

Establish (with respect to processes): The act of developing policy, work instructions, or procedures to implement process activities.

Evaluation: The continual self- and independent assessment of the performance of a program or project and incorporation of the evaluation findings to ensure adequacy of planning and execution according to plan.

Extensibility: The ability of a decision to be extended to other applications.

– F –

Failure: The inability of a system, subsystem, component, or part to perform its required function within specified limits (Source: NPR 8715.3 and Avizienis 2004).

Failure Tolerance: The ability to sustain a certain number of failures and still retain capability (Source: NPR 8705.2). A function should be preserved despite the presence of any of a specified number of coincident, independent failure causes of specified types.

Fault: A physical or logical cause, which explains a failure (Source: Avizienis 2004).

Fault Identification: Determining the possible locations of a failure or anomaly cause(s), to a defined level of granularity.

Fault Isolation: The act of containing the effects of a fault to limit the extent of failure.

Fault Management: A specialty engineering discipline that encompasses practices that enable an operational system to contain, prevent, detect, diagnose, identify, respond to, and recover from conditions that may interfere with nominal mission operations.

Fault Tolerance: See “Failure Tolerance.”

Feasible: Initial evaluations show that the concept credibly falls within the technical cost and schedule constraints for the project.

Flexibility: The ability of a decision to support more than one current application.

Flight Readiness Review: A review that examines tests, demonstrations, analyses, and audits that determine the system’s readiness for a safe and successful flight/launch and for subsequent flight operations. It also ensures that all flight and ground hardware, software, personnel, and procedures are operationally ready.

Float: The amount of time that a task in a project network schedule can be delayed without causing a delay to subsequent tasks or the project completion date.

Formulation Phase: The first part of the NASA management life cycle defined in NPR 7120.5 where system requirements are baselined, feasible concepts are determined, a system definition is baselined for the selected concept(s), and preparation is made for progressing to the Implementation Phase.

Functional Analysis: The process of identifying, describing, and relating the functions a system should perform to fulfill its goals and objectives.

Functional Baseline (Phase B): The functional baseline is the approved configuration documentation that describes a system’s or top-level CIs’ performance requirements (functional, interoperability, and interface characteristics) and the verification required to demonstrate the achievement of those specified characteristics.

Functional Configuration Audit (FCA): Examines the functional characteristics of the configured product and verifies that the product has met, via test results, the requirements specified in its functional baseline documentation approved at the PDR and CDR plus any approved changes thereafter. FCAs will be conducted on both hardware- and software-configured products and will precede the PCA of the configured product.

Functional Decomposition: A subfunction under logical decomposition and design solution definition, it is the examination of a function to identify subfunctions necessary for the accomplishment of that function and functional relationships and interfaces.

Functional Flow Block Diagram: A block diagram that defines system functions and the time sequence of functional events.

– G –

Gantt Chart: A bar chart depicting start and finish dates of activities and products in the WBS.Goal: Goals elaborate on the need and constitute a specific set of expectations for the system. They further define what we hope to accomplish by addressing the critical issues identified during the problem assessment. Goals need not be in a quantitative or measurable form, but they must allow us to assess whether the system has achieved them.

Government Mandatory Inspection Points: Inspection points required by Federal regulations to ensure 100 percent compliance with safety/mission-critical attributes when noncompliance can result in loss of life or loss of mission.

– H –

Health Assessment: The activity under Fault Management that carries out detection, diagnosis, and identification of faults and prediction of fault propagation states into the future.

Health Monitoring: The activity under Fault Management that implements system state data collection, storage, and reporting though sensing and communication.

Heritage (or legacy): Refers to the original manufacturer’s level of quality and reliability that is built into the parts, which have been proven by (1) time in service, (2) number of units in service, (3) mean time between failure performance, and (4) number of use cycles.

Human-Centered Design: An approach to the development of interactive systems that focuses on making systems usable by ensuring that the needs, abilities, and limitations of the human user are met throughout the system’s life cycle.

Human Factors Engineering: The discipline that studies human-system interfaces and provides requirements, standards, and guidelines to ensure the human component of an integrated system is able to function as intended.

Human Systems Integration: An interdisciplinary and comprehensive management and technical process that focuses on the integration of human considerations into the system acquisition and development processes to enhance human system design, reduce life cycle ownership cost, and optimize total system performance.

– I –

Implementation Phase: The part of the NASA management life cycle defined in NPR 7120.5 where the detailed design of system products is completed and the products to be deployed are fabricated, assembled, integrated, and tested and the products are deployed to their customers or users for their assigned use or mission.

Incommensurable Costs: Costs that cannot be easily measured, such as controlling pollution on launch or mitigating debris.

Influence Diagram: A compact graphical and mathematical representation of a decision state. Its elements are decision nodes, chance nodes, value nodes, and arrows to indicate the relationships among these elements.

Inspection: The visual examination of a realized end product. Inspection is generally used to verify physical design features or specific manufacturer identification. For example, if there is a requirement that the safety arming pin has a red flag with the words “Remove Before Flight” stenciled on the flag in black letters, a visual inspection of the arming pin flag can be used to determine if this requirement was met.

Integrated Logistics Support: The management, engineering activities, analysis, and information management associated with design requirements definition, material procurement and distribution, maintenance, supply replacement, transportation, and disposal that are identified by space flight and ground systems supportability objectives.

Interface Management Process: The process to assist in controlling product development when efforts are divided among parties (e.g., Government, contractors, geographically diverse technical teams) and/or to define and maintain compliance among the products that should interoperate.

Iterative: Application of a process to the same product or set of products to correct a discovered discrepancy or other variation from requirements. (See “recursive” and “repeatable.”)

– K –

Key Decision Point: The event at which the decision authority determines the readiness of a program/project to progress to the next phase of the life cycle (or to the next KDP).

Key Event (or Critical Event): See “Critical Event.”

Key Performance Parameter: Those capabilities or characteristics (typically engineering-based or related to health and safety or operational performance) considered most essential for successful mission accomplishment. They characterize the major drivers of operational performance, supportability, and interoperability.

Knowledge Management: A collection of policies, processes, and practices relating to the use of intellectual- and knowledge-based assets in an organization.

– L –

Least-Cost Analysis: A methodology that identifies the least-cost project option for meeting the technical requirements.

Liens: Requirements or tasks not satisfied that have to be resolved within a certain assigned time to allow passage through a control gate to proceed.

Life Cycle Cost (LCC): The total of the direct, indirect, recurring, nonrecurring, and other related expenses both incurred and estimated to be incurred in the design, development, verification, production, deployment, prime mission operation, maintenance, support, and disposal of a project, including closeout, but not extended operations. The LCC of a project or system can also be defined as the total cost of ownership over the project or system’s planned life cycle from Formulation (excluding Pre–Phase A) through Implementation (excluding extended operations). The LCC includes the cost of the launch vehicle.

Logical Decomposition Models: Mathematical or visual representations of the relationships between requirements as identified in the Logical Decomposition Process.

Logical Decomposition Process: A process used to improve understanding of the defined technical requirements and the relationships among the requirements (e.g., functional, behavioral, performance, and temporal) and to transform the defined set of technical requirements into a set of logical decomposition models and their associated set of derived technical requirements for lower levels of the system and for input to the Design Solution Definition Process.

Logistics (or Integrated Logistics Support): See “Integrated Logistics Support.”

Loosely Coupled Program: Programs that address specific objectives through multiple space flight projects of varied scope. While each individual project has an assigned set of mission objectives, architectural and technological synergies and strategies that benefit the program as a whole are explored during the formulation process. For instance, Mars orbiters designed for more than one Mars year in orbit are required to carry a communication system to support present and future landers.

– M –

Maintain (with respect to establishment of processes): The act of planning the process, providing resources, assigning responsibilities, training people, managing configurations, identifying and involving stakeholders, and monitoring process effectiveness.

Maintainability: The measure of the ability of an item to be retained in or restored to specified conditions when maintenance is performed by personnel having specified skill levels, using prescribed procedures and resources, at each prescribed level of maintenance.

Margin: The allowances carried in budget, projected schedules, and technical performance parameters (e.g., weight, power, or memory) to account for uncertainties and risks. Margins are allocated in the formulation process based on assessments of risks and are typically consumed as the program/project proceeds through the life cycle.

Master Equipment List (MEL): The MEL is a listing of all the parts of a system and includes pertinent information such as serial numbers, model numbers, manufacturer, equipment type, system/element it is located within, etc.

Measure of Effectiveness (MOE): A measure by which a stakeholder’s expectations are judged in assessing satisfaction with products or systems produced and delivered in accordance with the associated technical effort. The MOE is deemed to be critical to not only the acceptability of the product by the stakeholder but also critical to operational/mission usage. A MOE is typically qualitative in nature or not able to be used directly as a design-to requirement.

Measure of Performance (MOP): A quantitative measure that, when met by the design solution, helps ensure that a MOE for a product or system will be satisfied. These MOPs are given special attention during design to ensure that the MOEs to which they are associated are met. There are generally two or more measures of performance for each MOE.

Metric: The result of a measurement taken over a period of time that communicates vital information about the status or performance of a system, process, or activity. A metric should drive appropriate action.

Mission: A major activity required to accomplish an Agency goal or to effectively pursue a scientific, technological, or engineering opportunity directly related to an Agency goal. Mission needs are independent of any particular system or technological solution.

Mission Concept Review: A review that affirms the mission/project need and examines the proposed mission’s objectives and the ability of the concept to fulfill those objectives.

Mission Definition Review: A life cycle review that evaluates whether the proposed mission/system architecture is responsive to the program mission/system functional and performance requirements and requirements have been allocated to all functional elements of the mission/system.

Mitigation: An action taken to mitigate the effects of a fault towards achieving existing or redefined system goals.

Model: A model is a physical, mathematical, or logical representation of reality.

– N –

Need: A single statement that drives everything else. It should relate to the problem that the system is supposed to solve, but not be the solution.

Nonconforming product: Software, hardware, or combination, either produced, acquired, or in some combination that is identified as not meeting documented requirements.

– O –

Objective: Specific target levels of outputs the system must achieve. Each objective should relate to a particular goal. Generally, objectives should meet four criteria:

- Specific: Objectives should aim at results and reflect what the system needs to do, but they don’t outline how to implement the solution. They need to be specific enough to provide clear direction, so developers, customers, and testers can understand them.

- Measurable: Objectives need to be quantifiable and verifiable. The project needs to monitor the system’s success in achieving each objective.

- Aggressive, but attainable: Objectives need to be challenging but reachable, and targets need to be realistic. At first, objectives “To Be Determined” (TBD) may be included until trade studies occur, operations concepts solidify, or technology matures. But objectives need to be feasible before starting to write requirements and design systems.

- Results-oriented: Objectives need to focus on desired outputs and outcomes, not on the methods used to achieve the target (what, not how).

Objective Function (sometimes Cost Function): A mathematical expression of the values of combinations of possible outcomes as a single measure of cost-effectiveness.

Operational Environment: The environment in which the final product will be operated. In the case of space flight hardware/software, it is space. In the case of ground-based or airborne systems that are not directed toward space flight, it is the environments defined by the scope of operations. For software, the environment is defined by the operational platform.

Operational Readiness Review: A review that examines the actual system characteristics and the procedures used in the system or product’s operation and ensures that all system and support (flight and ground) hardware, software, personnel, procedures, and user documentation accurately reflects the deployed state of the system and are operationally ready.

Operations Concept: A description of how the flight system and the ground system are used together to ensure that the concept of operation is reasonable. This might include how mission data of interest, such as engineering or scientific data, are captured, returned to Earth, processed, made available to users, and archived for future reference. (Source: NPR 7120.5)

Optimal Solution: A feasible solution that best meets criteria when balanced at a system level.

Other Interested Parties (Stakeholders): A subset of “stakeholders,” other interested parties are groups or individuals who are not customers of a planned technical effort but may be affected by the resulting product, the manner in which the product is realized or used, or have a responsibility for providing life cycle support services.

– P –

Peer Review: Independent evaluation by internal or external subject matter experts who do not have a vested interest in the work product under review. Peer reviews can be planned, focused reviews conducted on selected work products by the producer’s peers to identify defects and issues prior to that work product moving into a milestone review or approval cycle.

Performance Standards: Defines what constitutes acceptable performance by the provider. Common metrics for use in performance standards include cost and schedule.

Physical Configuration Audits (PCA) or configuration inspection: The PCA examines the physical configuration of the configured product and verifies that the product corresponds to the build-to (or code-to) product baseline documentation previously approved at the CDR plus the approved changes thereafter. PCAs are conducted on both hardware-and software-configured products.

Post-Flight Assessment Review: Evaluates how well mission objectives were met during a mission and identifies all flight and ground system anomalies that occurred during the flight and determines the actions necessary to mitigate or resolve the anomalies for future flights of the same spacecraft design.

Post-Launch Assessment Review: A review that evaluates the readiness of the spacecraft systems to proceed with full, routine operations after post-launch deployment. The review also evaluates the status of the project plans and the capability to conduct the mission with emphasis on near-term operations and mission-critical events.

Precedence Diagram: Workflow diagram that places activities in boxes connected by dependency arrows; typical of a Gantt chart.

Preliminary Design Review: A review that demonstrates that the preliminary design meets all system requirements with acceptable risk and within the cost and schedule constraints and establishes the basis for proceeding with detailed design. It will show that the correct design option has been selected, interfaces have been identified, and verification methods have been described.

Process: A set of activities used to convert inputs into desired outputs to generate expected outcomes and satisfy a purpose.

Producibility: A system characteristic associated with the ease and economy with which a completed design can be transformed (i.e., fabricated, manufactured, or coded) into a hardware and/or software realization.

Product: A part of a system consisting of end products that perform operational functions and enabling products that perform life cycle services related to the end product or a result of the technical efforts in the form of a work product (e.g., plan, baseline, or test result).

Product Baseline (Phase D/E): The product baseline is the approved technical documentation that describes the configuration of a CI during the production, fielding/deployment, and operational support phases of its life cycle. The product baseline describes detailed physical or form, fit, and function characteristics of a CI; the selected functional characteristics designated for production acceptance testing; and the production acceptance test requirements.

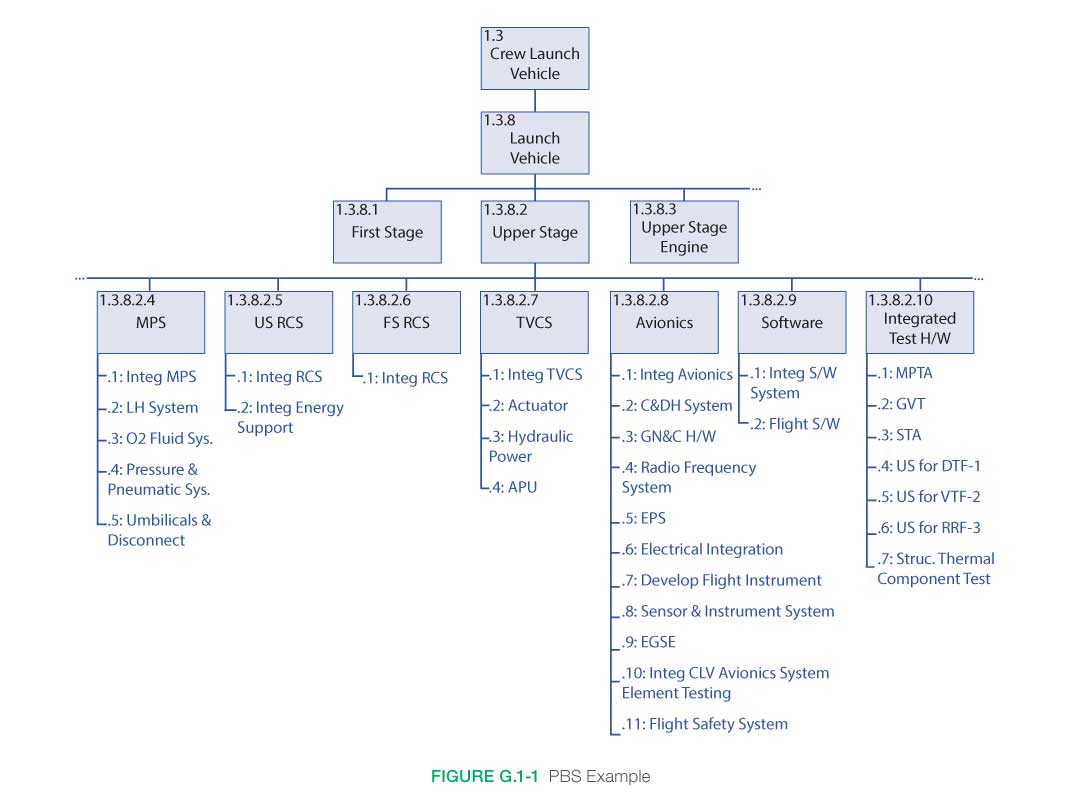

Product Breakdown Structure: A hierarchical breakdown of the hardware and software products of a program/project.

Product Implementation Process: A process used to generate a specified product of a product layer through buying, making, or reusing in a form consistent with the product life cycle phase exit (success) criteria and that satisfies the design solution definition-specified requirements (e.g., drawings, specifications).

Product Integration Process: A process used to transform the design solution definition into the desired end product of the product layer through assembly and integration of lower-level validated end products in a form that is consistent with the product life cycle phase exit (success) criteria and that satisfies the design solution definition requirements (e.g., drawings, specifications).

Product Realization: The act of making, buying, or reusing a product, or the assembly and integration of lower-level realized products into a new product, as well as the verification and validation that the product satisfies its appropriate set of requirements and the transition of the product to its customer.

Product Transition Process: A process used to transition a verified and validated end product that has been generated by product implementation or product integration to the customer at the next level in the system structure for integration into an end product or, for the top-level end product, transitioned to the intended end user.

Product Validation Process: A process used to confirm that a verified end product generated by product implementation or product integration fulfills (satisfies) its intended use when placed in its intended environment and to assure that any anomalies discovered during validation are appropriately resolved prior to delivery of the product (if validation is done by the supplier of the product) or prior to integration with other products into a higher-level assembled product (if validation is done by the receiver of the product). The validation is done against the set of baselined stakeholder expectations.

Product Verification Process: A process used to demonstrate that an end product generated from product implementation or product integration conforms to its design solution definition requirements as a function of the product life cycle phase and the location of the product layer end product in the system structure.

Production Readiness Review (PRR): A review for projects developing or acquiring multiple or similar systems greater than three or as determined by the project. The PRR determines the readiness of the system developers to efficiently produce the required number of systems. It ensures that the production plans, fabrication, assembly, integration-enabling products, operational support, and personnel are in place and ready to begin production.

Prognosis: The prediction of a system’s future health states, degradation, and Remaining Useful Life (RUL).

Program: A strategic investment by a mission directorate or mission support office that has a defined architecture and/or technical approach, requirements, funding level, and a management structure that initiates and directs one or more projects. A program defines a strategic direction that the Agency has identified as critical.

Program/System Definition Review: A review that examines the proposed program architecture and the flowdown to the functional elements of the system. The proposed program’s objectives and the concept for meeting those objectives are evaluated. Key technologies and other risks are identified and assessed. The baseline program plan, budgets, and schedules are presented.

Program Requirements: The set of requirements imposed on the program office, which are typically found in the program plan plus derived requirements that the program imposes on itself.

Program System Requirements Review: A review that evaluates the credibility and responsiveness of a proposed program requirements/architecture to the mission directorate requirements, the allocation of program requirements to the projects, and the maturity of the program’s mission/system definition.

Programmatic Requirements: Requirements set by the mission directorate, program, project, and PI, if applicable. These include strategic scientific and exploration requirements, system performance requirements, and schedule, cost, and similar nontechnical constraints.

Project: A specific investment having defined goals, objectives, requirements, life cycle cost, a beginning, and an end. A project yields new or revised products or services that directly address NASA’s strategic needs. The products may be produced or the services performed wholly in-house; by partnerships with Government, industry, or academia; or through contracts with private industry.

Project Plan: The document that establishes the project’s baseline for implementation, signed by the responsible program manager, Center Director, project manager, and the MDAA, if required.

Project Requirements: The set of requirements imposed on the project and developer, which are typically found in the project plan plus derived requirements that the project imposes on itself. It includes identification of activities and deliverables (end products and work products) and outputs of the development and operations.

Phase Product: An end product that is to be provided as a result of the activities of a given life cycle phase. The form depends on the phase—a product of early phases might be a simulation or model; a product of later phases may be the (final) end product itself.

Product Form: A representation of a product that depends on the development phase, current use, and maturity. Examples include mock-up, model, engineering unit, prototype unit, and flight unit.

Product Realization: The desired output from the application of the four product realization processes. The form of this product is dependent on the phase of the product life cycle and the phase exit (success) criteria.

Prototype: The prototype unit demonstrates form, fit, and function at a scale deemed to be representative of the final product operating in its operational environment. A subscale test article provides fidelity sufficient to permit validation of analytical models capable of predicting the behavior of full-scale systems in an operational environment. The prototype is used to “wring out” the design solution so that experience gained from the prototype can be fed back into design changes that will improve the manufacture, integration, and maintainability of a single flight item or the production run of several flight items.

– Q –

Quality Assurance: An independent assessment performed throughout a product’s life cycle in order to acquire confidence that the system actually produced and delivered is in accordance with its functional, performance, and design requirements.

– R –

Realized Product: The end product that has been implemented/integrated, verified, validated, and transitioned to the next product layer.

Recovery: An action taken to restore the functions necessary to achieve existing or redefined system goals after a fault/failure occurs.

Recursive: Value is added to the system by the repeated application of processes to design next lower-layer system products or to realize next upper-layer end products within the system structure. This also applies to repeating the application of the same processes to the system structure in the next life cycle phase to mature the system definition and satisfy phase exit (success) criteria.

Relevant Stakeholder: A subset of the term “stakeholder” that applies to people or roles that are designated in a plan for stakeholder involvement. Since “stakeholder” may describe a very large number of people, a lot of time and effort would be consumed by attempting to deal with all of them. For this reason, “relevant stakeholder” is used in most practice statements to describe the people identified to contribute to a specific task.

Relevant Environment: Not all systems, subsystems, and/or components need to be operated in the operational environment in order to satisfactorily address performance margin requirements or stakeholder expectations. Consequently, the relevant environment is the specific subset of the operational environment that is required to demonstrate critical “at risk” aspects of the final product performance in an operational environment.

Reliability: The measure of the degree to which a system ensures mission success by functioning properly over its intended life. It has a low and acceptable probability of failure, achieved through simplicity, proper design, and proper application of reliable parts and materials. In addition to long life, a reliable system is robust and fault tolerant.

Repeatable: A characteristic of a process that can be applied to products at any level of the system structure or within any life cycle phase.

Requirement: The agreed-upon need, desire, want, capability, capacity, or demand for personnel, equipment, facilities, or other resources or services by specified quantities for specific periods of time or at a specified time expressed as a “shall” statement. Acceptable form for a requirement statement is individually clear, correct, feasible to obtain, unambiguous in meaning, and can be validated at the level of the system structure at which it is stated. In pairs of requirement statements or as a set, collectively, they are not redundant, are adequately related with respect to terms used, and are not in conflict with one another.

Requirements Allocation Sheet: Documents the connection between allocated functions, allocated performance, and the physical system.

Requirements Management Process: A process used to manage the product requirements identified, baselined, and used in the definition of the products of each product layer during system design. It provides bidirectional traceability back to the top product layer requirements and manages the changes to established requirement baselines over the life cycle of the system products.

Risk: In the context of mission execution, risk is the potential for performance shortfalls that may be realized in the future with respect to achieving explicitly established and stated performance requirements. The performance shortfalls may be related to any one or more of the following mission execution domains: (1) safety, (2) technical, (3) cost, and (4) schedule. (Source: NPR 8000.4, Agency Risk Management Procedural Requirements)

Risk Assessment: An evaluation of a risk item that determines (1) what can go wrong, (2) how likely it is to occur, (3) what the consequences are, and (4) what the uncertainties associated with the likelihood and consequences are, and 5) what the mitigation plans are.

Risk-Informed Decision Analysis Process: A five-step process focusing first on objectives and next on developing decision alternatives with those objectives clearly in mind and/or using decision alternatives that have been developed under other systems engineering processes. The later steps of the process interrelate heavily with the Technical Risk Management Process.

Risk Management: Risk management includes Risk-Informed Decision-Making (RIDM) and Continuous Risk Management (CRM) in an integrated framework. RIDM informs systems engineering decisions through better use of risk and uncertainty information in selecting alternatives and establishing baseline requirements. CRM manages risks over the course of the development and the Implementation Phase of the life cycle to ensure that safety, technical, cost, and schedule requirements are met. This is done to foster proactive risk management, to better inform decision-making through better use of risk information, and then to more effectively manage Implementation risks by focusing the CRM process on the baseline performance requirements emerging from the RIDM process. (Source: NPR 8000.4, Agency Risk Management Procedural Requirements) These processes are applied at a level of rigor commensurate with the complexity, cost, and criticality of the program.

– S –

Safety: Freedom from those conditions that can cause death, injury, occupational illness, damage to or loss of equipment or property, or damage to the environment.

Note 1: For purposes of the NASA Software Release program only, the term “software,” as redefined in NPR 2210.1, Release of NASA Software, does not include computer databases or software documentation.

Search Space (or Alternative Space): The envelope of concept possibilities defined by design constraints and parameters within which alternative concepts can be developed and traded off.

Single-Project Programs: Programs that tend to have long development and/or operational lifetimes, represent a large investment of Agency resources, and have contributions from multiple organizations/agencies. These programs frequently combine program and project management approaches, which they document through tailoring.

Software: Computer programs, procedures, rules, and associated documentation and data pertaining to the development and operation of a computer system. Software also includes Commercial Off-The-Shelf (COTS), Government Off-The-Shelf (GOTS), Modified Off-The-Shelf (MOTS), embedded software, reuse, heritage, legacy, autogenerated code, firmware, and open source software components.

Solicitation: The vehicle by which information is solicited from contractors for the purpose of awarding a contract for products or services. Any request to submit offers or quotations to the Government. Solicitations under sealed bid procedures are called “invitations for bids.” Solicitations under negotiated procedures are called “requests for proposals.” Solicitations under simplified acquisition procedures may require submission of either a quotation or an offer.

Note 2: Definitions for the terms COTS, GOTS, heritage software, MOTS, legacy software, software reuse, and classes of software are provided in NPR 7150.2, NASA Software Engineering Requirements. (Source: NPD 7120.4, NASA Engineering and Program/Project Management Policy)

Specification: A document that prescribes completely, precisely, and verifiably the requirements, design, behavior, or characteristics of a system or system component. In NPR 7123.1, “specification” is treated as a “requirement.”

Stakeholder: A group or individual who is affected by or has an interest or stake in a program or project. There are two main classes of stakeholders. See “customers” and “other interested parties.”

Stakeholder Expectations: A statement of needs, desires, capabilities, and wants that are not expressed as a requirement (not expressed as a “shall” statement) is referred to as an “expectation.” Once the set of expectations from applicable stakeholders is collected, analyzed, and converted into a “shall” statement, the expectation becomes a requirement. Expectations can be stated in either qualitative (non-measurable) or quantitative (measurable) terms. Requirements are always stated in quantitative terms. Expectations can be stated in terms of functions, behaviors, or constraints with respect to the product being engineered or the process used to engineer the product.

Stakeholder Expectations Definition Process: A process used to elicit and define use cases, scenarios, concept of operations, and stakeholder expectations for the applicable product life cycle phases and product layer. The baselined stakeholder expectations are used for validation of the product layer end product.

Standing Review Board: The board responsible for conducting independent reviews (life-cycle and special) of a program or project and providing objective, expert judgments to the convening authorities. The reviews are conducted in accordance with approved Terms of Reference (ToR) and life cycle requirements per NPR 7123.1.

State Diagram: A diagram that shows the flow in the system in response to varying inputs in order to characterize the behavior of the system.

Success Criteria: Specific accomplishments that need to be satisfactorily demonstrated to meet the objectives of a technical review so that a technical effort can progress further in the life cycle. Success criteria are documented in the corresponding technical review plan. Formerly referred to as “exit” criteria, a term still used in some NPDs/NPRs.

Surveillance: The monitoring of a contractor’s activities (e.g., status meetings, reviews, audits, site visits) for progress and production and to demonstrate fiscal responsibility, ensure crew safety and mission success, and determine award fees for extraordinary (or penalty fees for substandard) contract execution.

System: (1) The combination of elements that function together to produce the capability to meet a need. The elements include all hardware, software, equipment, facilities, personnel, processes, and procedures needed for this purpose. (2) The end product (which performs operational functions) and enabling products (which provide life cycle support services to the operational end products) that make up a system.

System Acceptance Review: The SAR verifies the completeness of the specific end products in relation to their expected maturity level, assesses compliance to stakeholder expectations, and ensures that the system has sufficient technical maturity to authorize its shipment to the designated operational facility or launch site.

System Definition Review: The Mission/System Definition Review (MDR/SDR) evaluates whether the proposed mission/system architecture is responsive to the program mission/system functional and performance requirements and requirements have been allocated to all functional elements of the mission/system. This review is used for projects and for single-project programs.

System Integration Review: A SIR ensures that segments, components, and subsystems are on schedule to be integrated into the system and that integration facilities, support personnel, and integration plans and procedures are on schedule to support integration.

System Requirements Review: For a program, the SRR is used to ensure that its functional and performance requirements are properly formulated and correlated with the Agency and mission directorate strategic objectives. For a system/project, the SRR evaluates whether the functional and performance requirements defined for the system are responsive to the program’s requirements and ensures that the preliminary project plan and requirements will satisfy the mission.

System Safety Engineering: The application of engineering and management principles, criteria, and techniques to achieve acceptable mishap risk within the constraints of operational effectiveness and suitability, time, and cost throughout all phases of the system life cycle.

System Structure: A system structure is made up of a layered structure of product-based WBS models. (See “Work Breakdown Structure” and Product Breakdown Structure.”)

Systems Approach: The application of a systematic, disciplined engineering approach that is quantifiable, recursive, iterative, and repeatable for the development, operation, and maintenance of systems integrated into a whole throughout the life cycle of a project or program.

Systems Engineering (SE) Engine: The SE model shown in Figure 2.1-1 that provides the 17 technical processes and their relationships with each other. The model is called an “SE engine” in that the appropriate set of processes is applied to the products being engineered to drive the technical effort.

Systems Engineering Management Plan (SEMP): The SEMP identifies the roles and responsibility interfaces of the technical effort and specifies how those interfaces will be managed. The SEMP is the vehicle that documents and communicates the technical approach, including the application of the common technical processes; resources to be used; and the key technical tasks, activities, and events along with their metrics and success criteria.

– T –

Tailoring: A process used to adjust or seek relief from a prescribed requirement to accommodate the needs of a specific task or activity (e.g., program or project). The tailoring process results in the generation of deviations and waivers depending on the timing of the request.

OR

The process used to seek relief from NPR 7123.1 requirements consistent with program or project objectives, allowable risk, and constraints.

Technical Assessment Process: A process used to help monitor progress of the technical effort and provide status information for support of the system design, product realization, and technical management processes. A key aspect of the process is conducting life cycle and technical reviews throughout the system life cycle.

Technical Cost Estimate: The cost estimate of the technical work on a project created by the technical team based on its understanding of the system requirements and operational concepts and its vision of the system architecture.

Technical Data Management Process: A process used to plan for, acquire, access, manage, protect, and use data of a technical nature to support the total life cycle of a system. This process is used to capture trade studies, cost estimates, technical analyses, reports, and other important information.

Technical Data Package: An output of the Design Solution Definition Process, it evolves from phase to phase, starting with conceptual sketches or models and ending with complete drawings, parts list, and other details needed for product implementation or product integration.

Technical Measures: An established set of measures based on the expectations and requirements that will be tracked and assessed to determine overall system or product effectiveness and customer satisfaction. Common terms for these measures are: Measures Of Effectiveness (MOEs), Measures Of Performance (MOPs), and Technical Performance Measures (TPMs).

Technical Performance Measures: A set of performance measures that are monitored by comparing the current actual achievement of the parameters with that anticipated at the current time and on future dates. TPMs are used to confirm progress and identify deficiencies that might jeopardize meeting a system requirement. Assessed parameter values that fall outside an expected range around the anticipated values indicate a need for evaluation and corrective action. Technical performance measures are typically selected from the defined set of Measures Of Performance (MOPs).

Technical Planning Process: A process used to plan for the application and management of each common technical process. It is also used to identify, define, and plan the technical effort applicable to the product life cycle phase for product layer location within the system structure and to meet project objectives and product life cycle phase exit (success) criteria. A key document generated by this process is the SEMP.

Technical Requirements: A set of requirements imposed on the end products of the system, including the system itself. Also referred to as “product requirements.”

Technical Requirements Definition Process: A process used to transform the stakeholder expectations into a complete set of validated technical requirements expressed as “shall” statements that can be used for defining a design solution for the ProductBreakdown Structure (PBS) model and related enabling products.

Technical Risk: Risk associated with the achievement of a technical goal, criterion, or objective. It applies to undesired consequences related to technical performance, human safety, mission assets, or environment.

Technical Risk Management Process: A process used to make risk-informed decisions and examine, on a continuing basis, the potential for deviations from the project plan and the consequences that could result should they occur.

Technical Team: A group of multidisciplinary individuals with appropriate domain knowledge, experience, competencies, and skills who are assigned to a specific technical task.

Technology Readiness Assessment Report: A document required for transition from Phase B to Phase C/D demonstrating that all systems, subsystems, and components have achieved a level of technological maturity with demonstrated evidence of qualification in a relevant environment.

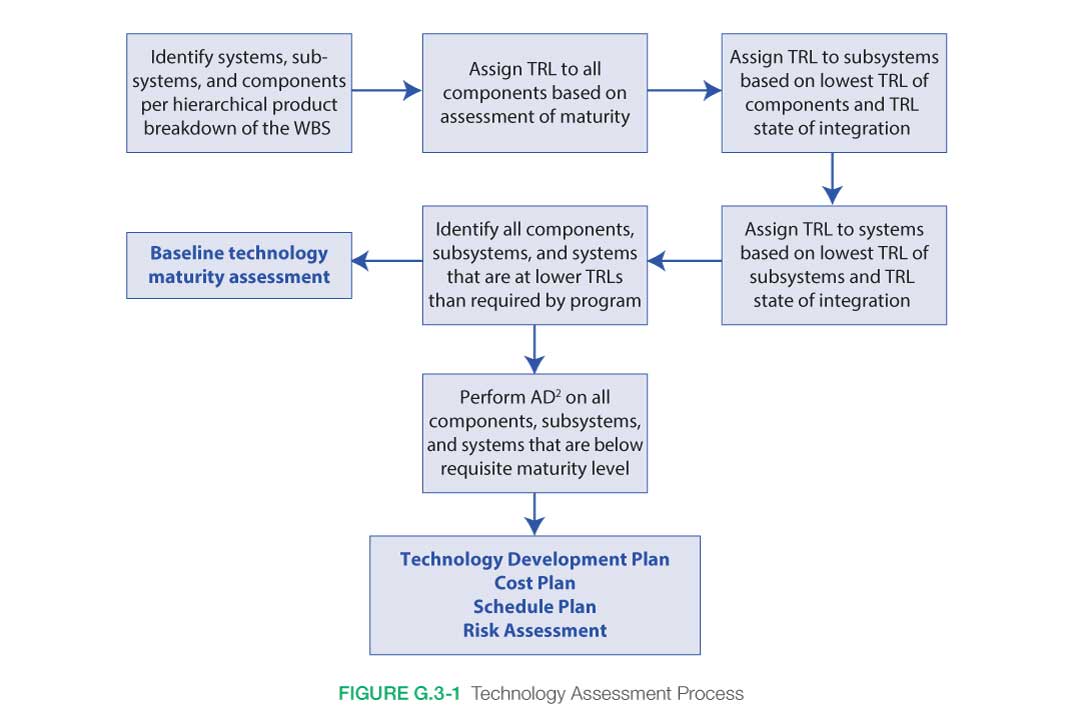

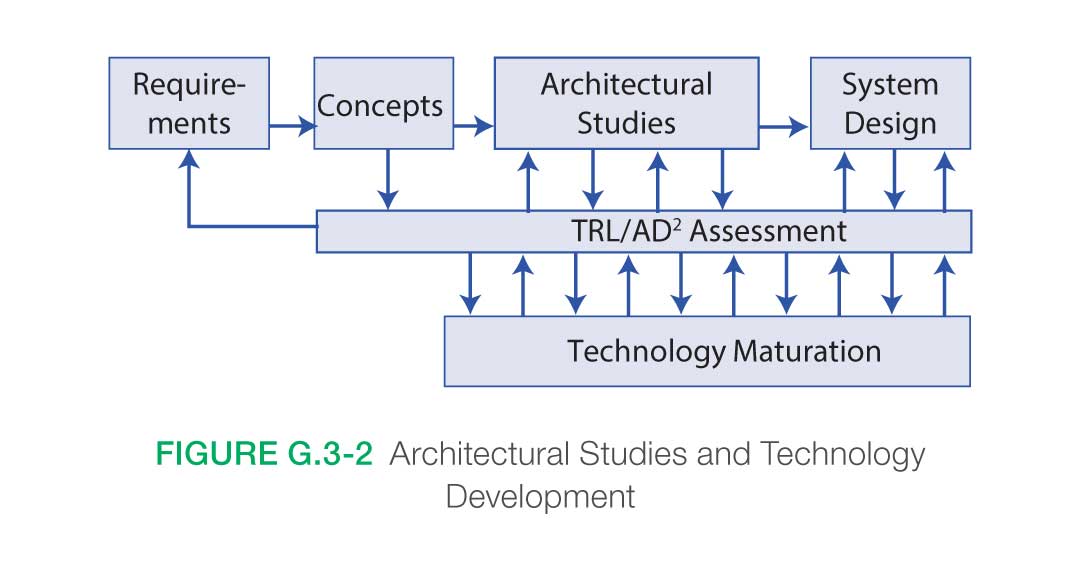

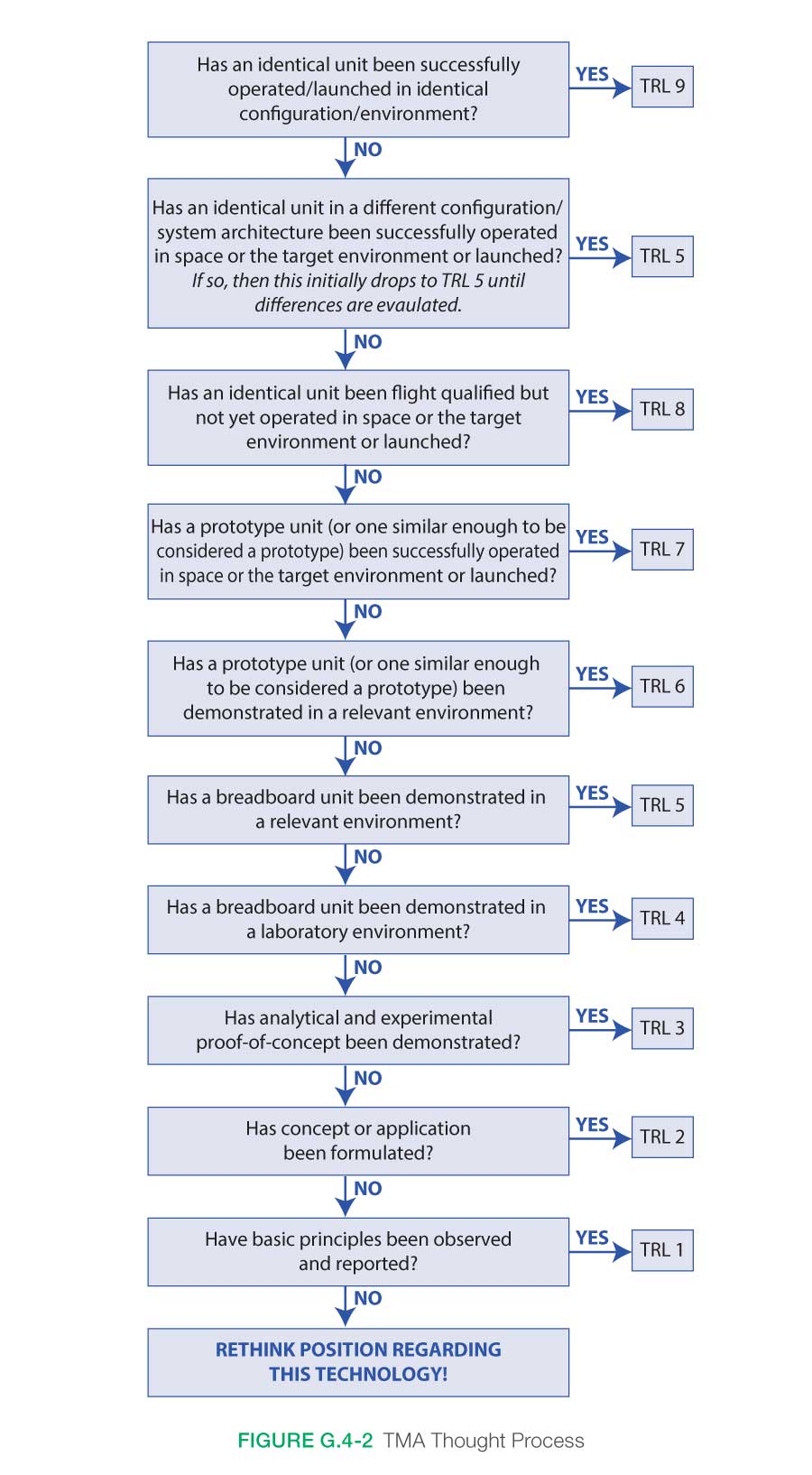

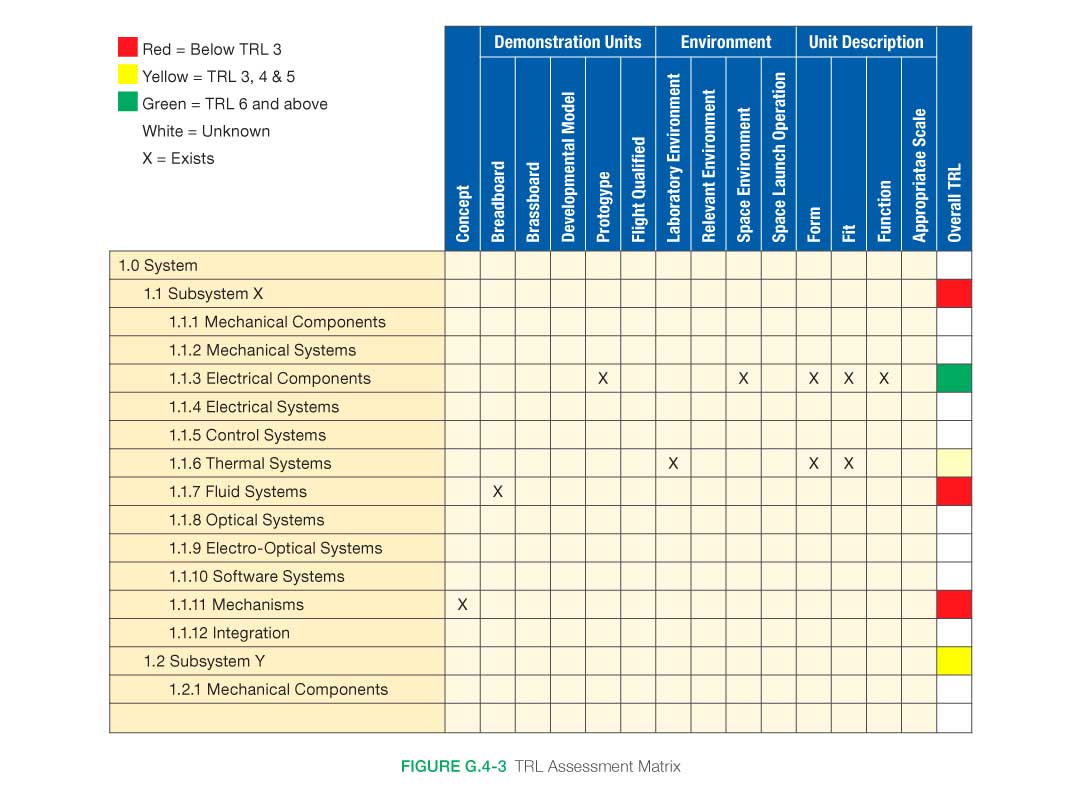

Technology Assessment: A systematic process that ascertains the need to develop or infuse technological advances into a system. The technology assessment process makes use of basic systems engineering principles and processes within the framework of the Product Breakdown Structure (PBS). It is a two-step process comprised of (1) the determination of the current technological maturity in terms of Technology Readiness Levels (TRLs) and (2) the determination of the difficulty associated with moving a technology from one TRL to the next through the use of the Advancement Degree of Difficulty Assessment (AD2).

Technology Development Plan: A document required for transition from Phase A to Phase B identifying technologies to be developed, heritage systems to be modified, alternative paths to be pursued, fallback positions and corresponding performance descopes, milestones, metrics, and key decision points. It is incorporated in the preliminary project plan.

Technology Maturity Assessment: A process to determine a system’s technological maturity based on Technology Readiness Levels (TRLs).

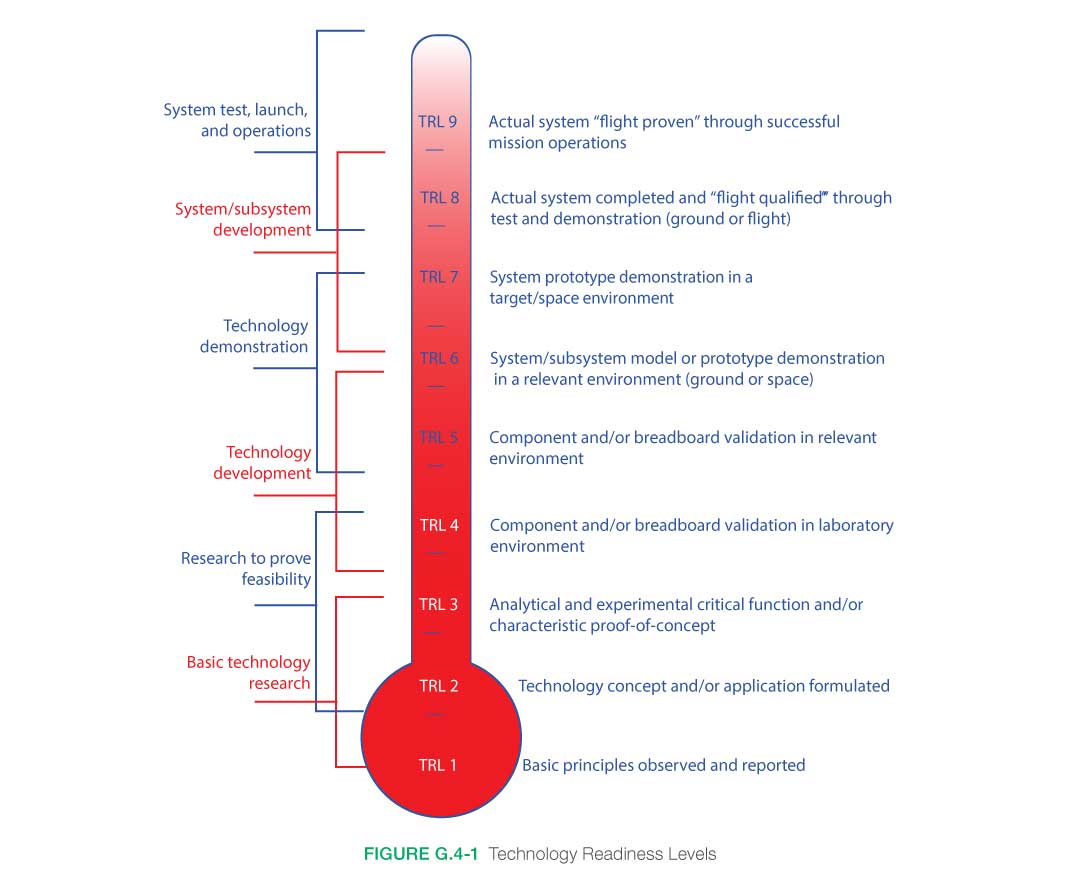

Technology Readiness Level: Provides a scale against which to measure the maturity of a technology. TRLs range from 1, basic technology research, to 9, systems test, launch, and operations. Typically, a TRL of 6 (i.e., technology demonstrated in a relevant environment) is required for a technology to be integrated into an SE process.

Test: The use of a realized end product to obtain detailed data to verify or validate performance or to provide sufficient information to verify or validate performance through further analysis.

Test Readiness Review: A review that ensures that the test article (hardware/software), test facility, support personnel, and test procedures are ready for testing and data acquisition, reduction, and control.

Threshold Requirements: A minimum acceptable set of technical and project requirements; the set could represent the descope position of the project.