Tomorrow’s technology will build on historical breakthroughs.

This article is from the 2015 NESC Technical Update.

Propelling technology forward normally involves building on past accomplishments. That is what prompted Cornelius (Neil) Dennehy, NASA Technical Fellow for Guidance, Navigation, and Control (GNC), to take a look back at NASA’s first space rendezvous. A half-century ago, two crewed Gemini spacecraft, Gemini 6A and Gemini 7, conquered the technological milestone of meeting up with one another while orbiting 160 nautical miles above the planet.

Though engaged in the study of space rendezvous for more than 20 years, Mr. Dennehy was struck by the enormity of the task that lay before those early astronauts and engineers. “They had to start with a clean sheet of paper,” he says. “They had to work out the question of relative motion, the radar sensors, the modeling and simulation, the training. They had to create it all. Back then, they didn’t know what they didn’t know, so they were unencumbered and creative. They were excited about breaking new technical ground.”

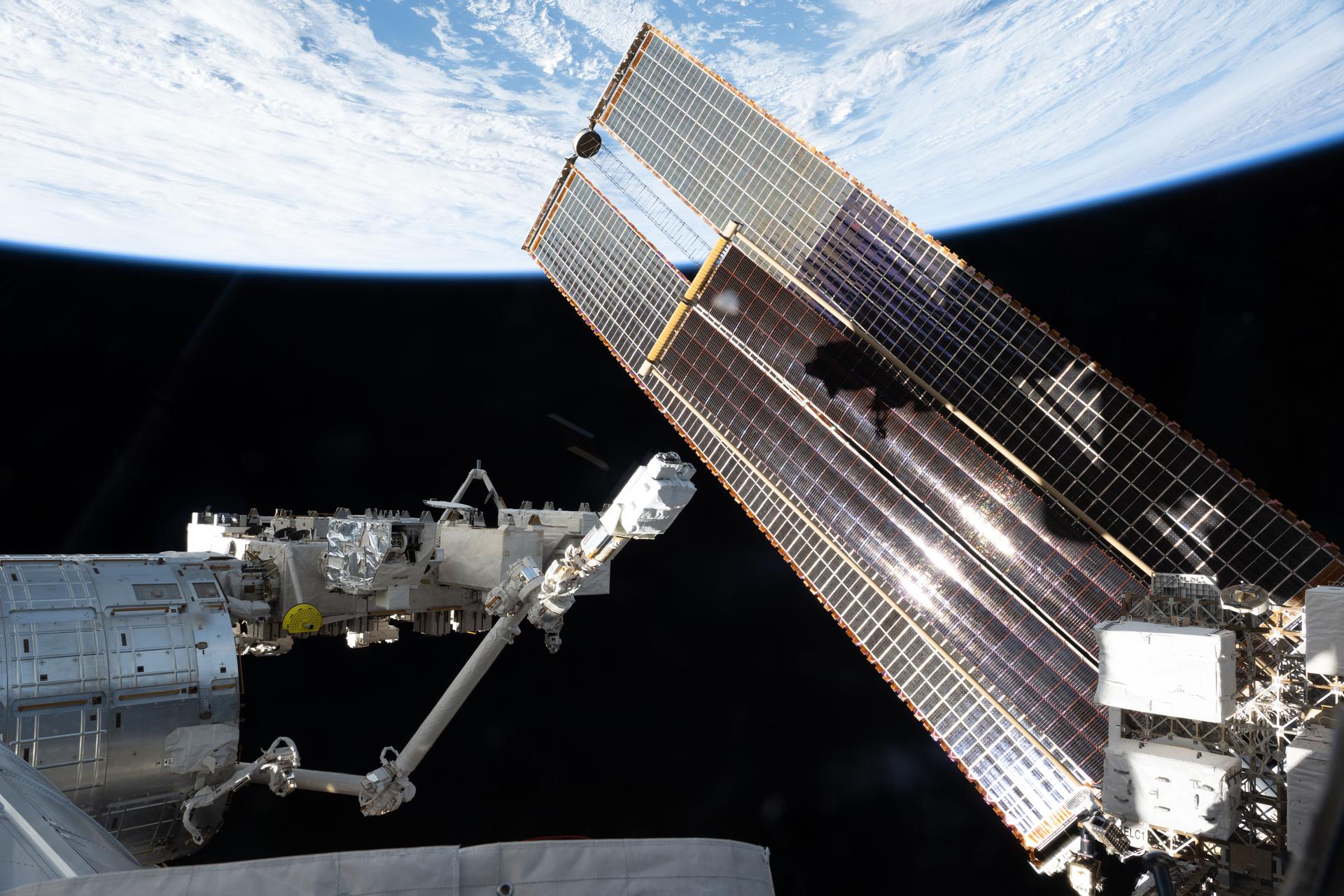

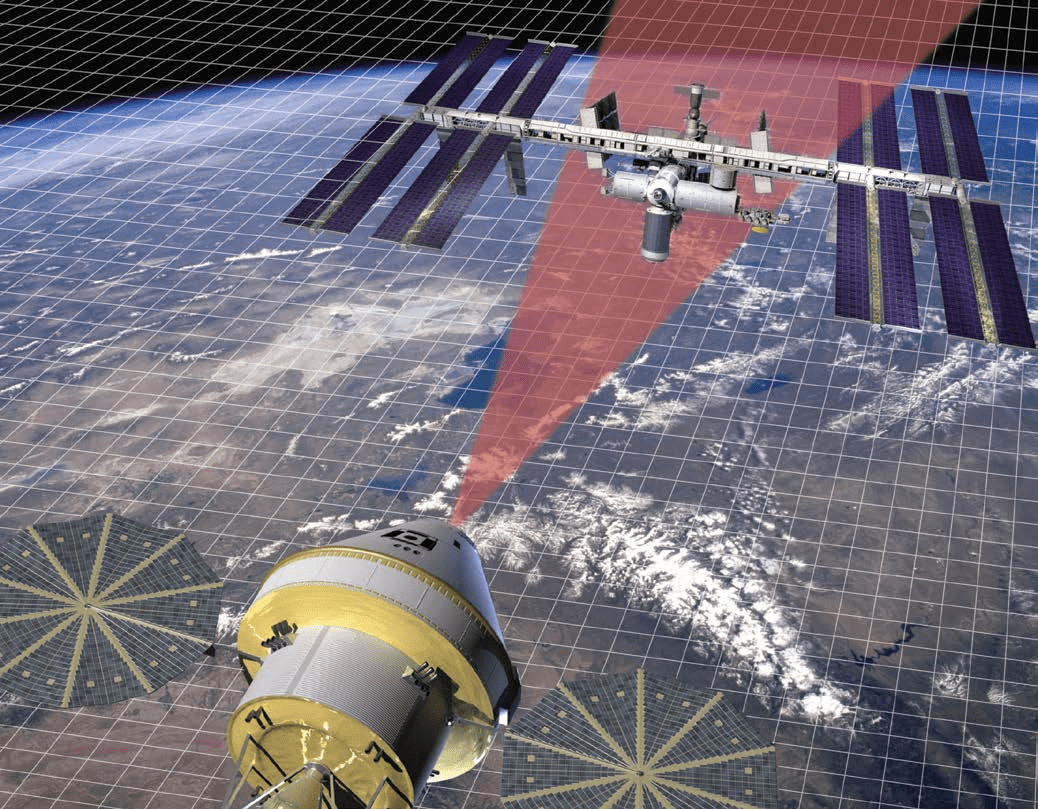

A fresh look at this first proximity flight in low Earth orbit inspired Mr. Dennehy, bringing a renewed vigor to his current work on rendezvous and capture capabilities. And it reminded him that today’s state-of-the-art sensors, which are guiding unmanned resupply spacecraft to their docking ports at the International Space Station (ISS), and the plans that are underway for a rendezvous mission with an asteroid, were built on that earlier Gemini success and the subsequent refinement of rendezvous with Apollo and Shuttle. And it cemented his conclusion that continued evolution in rendezvous technology will be the key to getting humans to Mars.

“The fundamental physics have already been flight proven,” explains Mr. Dennehy. Following that first space rendezvous in 1965, NASA refined its capabilities in later Gemini missions, achieving the first docking of two spacecraft. From there, Apollo missions honed NASA’s Earth orbit rendezvous skills. Apollo 10 saw the first lunarorbit rendezvous. Then the Shuttle Program saw more than 50 rendezvous and docking missions, with satellites in need of upgrades or repairs, with Mir, the Hubble Space Telescope, and in-space assembly and docking of the ISS.

“Our rendezvous operations became a very polished, wellorchestrated process,” he says. “We know how to do it. Now we need to focus on increasing the performance of these technologies, driving costs down, and finding ways to do rendezvous more autonomously. There are still challenges out there for us.”

To that end, Mr. Dennehy is leading a system-level capability leadership team for the Agency focused on rendezvous and capture, and for the past 5 years has also been running a grass roots autonomous rendezvous and docking community of practice. Last summer he gave a lecture in Europe on the numerous lessons learned over the years from relative motion missions. “It’s an active area of study right now,” he says. “We’re working hard to make sure we have the right capabilities for the future needs of rendezvous and capture — workforce, facilities, tools, testbeds — all of the ingredients we’ll need on a journey to Mars.”

Advancing Existing Technology

Mr. Dennehy describes rendezvous and capture as more than just a single-discipline endeavor. “It is a true systems capability — it’s GNC, software, mechanical systems, avionics, sensors, vision processing— a true multidisciplinary feat.” A unique combination of planning tools on the ground, onboard processing, control algorithms, navigation algorithms, docking mechanisms, and capture hardware are required for a successful meet-up in space.

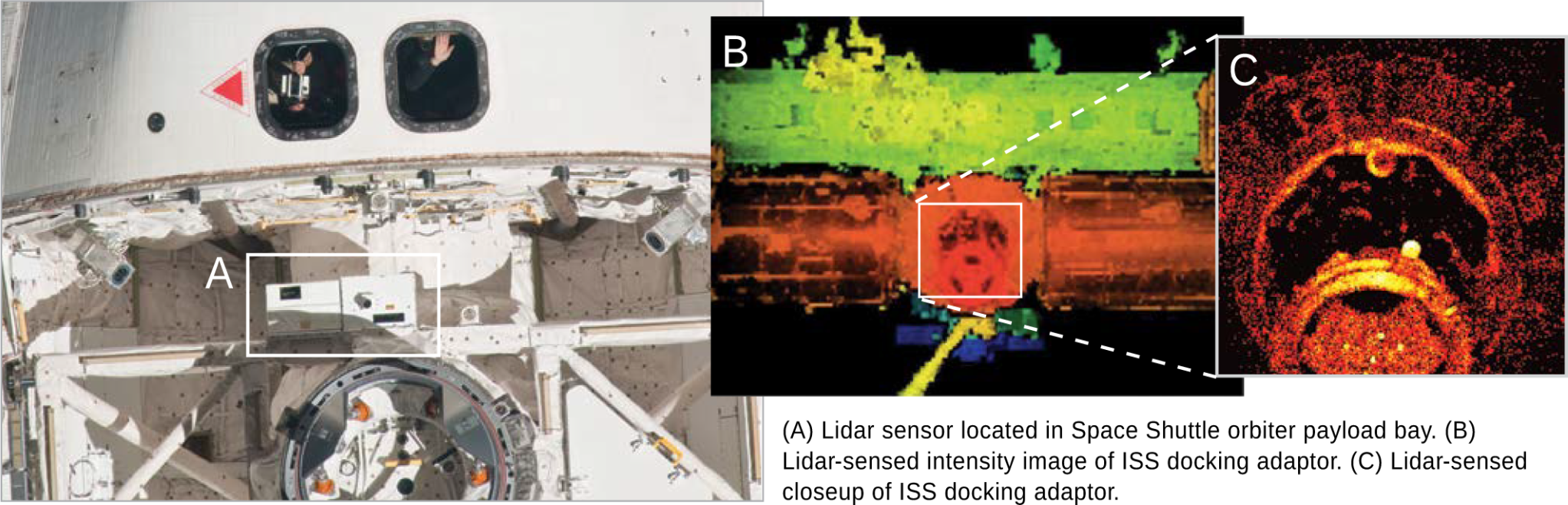

But the area where Mr. Dennehy has focused much attention is lidar, also know as Light Detection and Ranging. “Lidar has become one of the basic relative navigation sensors we use on missions,” he says. “Lidar sensors are most useful when there are mission requirements for obtaining lighting-independent relative navigation information or when there is a direct ranging requirement. It comes down to the sensors. If you can’t get data about where the target is relative to your position, you won’t have the data needed to accomplish a rendezvous. It won’t occur without the right sensor technology.”

There’s an art to selecting the appropriate relative navigation sensor suite for each rendezvous mission application. Typically, a combination of wide-angle and narrow-angle visible cameras, infrared cameras, laser range finders, and more recently flash or scanning lidar relative navigation sensors are used, all of which are optical-based sensors. The shift from radar-based to optical-based sensors for rendezvous, which occurred over the decades since the first radar-guided Gemini rendezvous, was driven by the desire to develop a fully autonomous rendezvous, proximity operations, and docking functional capability.

“Over the past few years, optical relative navigation sensors have successfully been used on the European Space Agency’s Automated Transfer Vehicle, the Japan Aerospace Exploration Agency’s H-II Transfer Vehicle, as well as the U.S. commercial Cygnus and Dragon spacecraft, to rendezvous with ISS for cargo resupply. The one noteworthy exception is with Russian spacecraft that rendezvous with the ISS. They employ radar-based relative navigation sensing, which has been their legacy approach since the 1960’s.”

Radars will continue to have a role as a GNC relative navigation sensor, particularly for planetary landing mission applications where they are used for altimetry and velocity measurement, Mr. Dennehy says. “In fact, every single spacecraft, robotic or manned, that landed on the Moon or Mars, has used radar. With continued investments in lidar technology development, I can foresee the day when planetary landings will be accomplished with relatively small, low power, high-performance lidar sensors, potentially replacing radars. There is also the possibility of multi-functional lidar sensors for future mission applications that will perform direct relative range and range rate measurements as well as providing the raw data needed for target attitude pose estimation and hazard detection.”

Over the past decade, lidar sensors have become an increasingly intriguing relative navigation option for GNC engineers designing rendezvous missions. Lidar works similarly to radar, but uses laser light pulses instead of radio-frequency electromagnetic pulses to measure the distance from and bearing to a target object, like another spacecraft. The laser return data collected must then be processed in order to reach a relative navigation state. Lidar sensors cannot only provide the range and bearing to the target but can also provide the attitude orientation (or “pose”) of the target relative to the chase spacecraft. Several NESC assessments, led by Mr. Dennehy, have focused on lidar technology. “We want to help characterize their performance and the flight software algorithms that process the data.” It is a step forward toward improving the reliability and lifecycle length needed for prolonged missions in space.

Mr. Dennehy recently led an NESC assessment to evaluate the lidar-based natural feature tracking technology planned for OSIRIS-REx (Origins-Spectral Interpretation-Resource Identification-Security-Regolith Explorer). “It’s an asteroid sample return mission set to launch in September 2016. The spacecraft will rendezvous with an asteroid and through a controlled touch-and-go (TAG) maneuver, will collect a sample and bring it home.” What complicates this mission is the rendezvous target. The motion of small, distant bodies is often not well-known or characterized. It is not another known cooperative spacecraft. It is not engaging with a well-defined docking port on ISS. “It’s a rendezvous between a spacecraft and a natural object, a primitive body,” he says.

Aside from advances in lidar, “we’ll also need higher degrees of autonomy and operational flexibility,” states Mr. Dennehy, for managing a rendezvous that could be happening millions of miles away from Earth. In a mission like OSIRIS-REx, ground operations will be doing navigation and flight dynamics, but only up to a point. “The time it will take a signal to travel to the spacecraft and back for processing on the ground will be on the order of minutes,” Mr. Dennehy says. “When OSIRIS-REx is doing its TAG maneuver, it will have to do it autonomously since the ground can’t realistically be in the loop.”

“Autonomous rendezvous and capture will be an integral element of going to Mars”

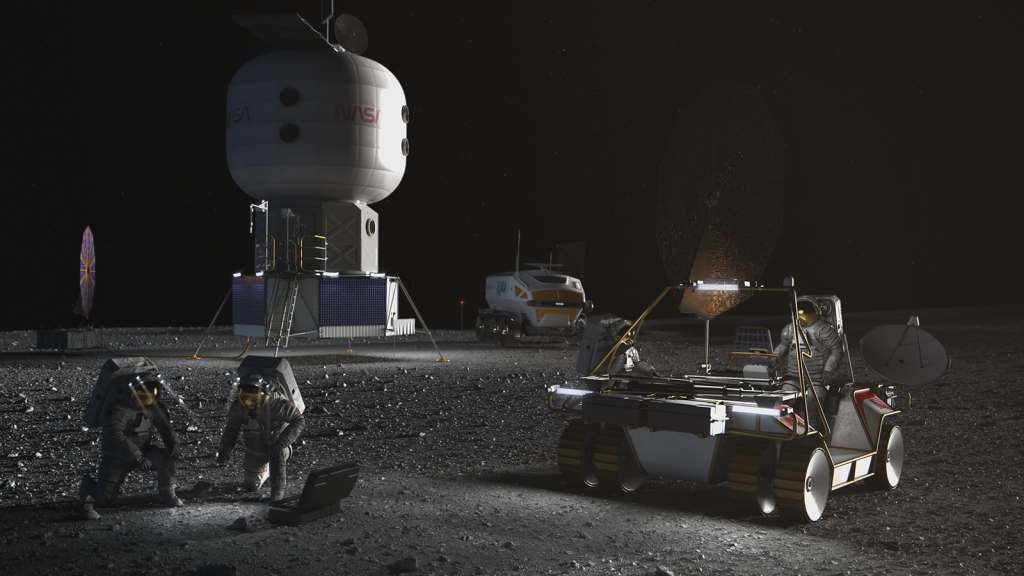

What is learned from OSIRIS-REx can then be applied to another mission — a Mars sample return. “A lander will go down to the surface, gather samples of soil, and launch itself back to orbit and rendezvous with an orbiting craft.” This could mean in-space assembly of assets that would involve rendezvous and docking with platforms and other spacecraft — even further out into the solar system. “Autonomous rendezvous and capture will be an integral element of going to Mars,” he says.

Autonomous rendezvous could also lead to lower operational costs. More autonomy may eventually reduce the workload for on-ground crews or the pilots and crews in the spacecraft itself. “But we’ll have to work up to that very carefully, by advancing this system capability in a careful and well-thought-out manner.”

“We‘ve certainly come a long way, but we have a long way to go,” says Mr. Dennehy. That is why building on what has already been accomplished is so important. “The environment has changed and we have to find more affordable ways to do this. We can’t afford the development of entirely new spacecraft-unique rendezvous solutions each time a future mission application arises. As creative engineers, we’d all like to start with that clean sheet of paper and show how innovative we are, but we need to both leverage our existing rendezvous architecture for our future missions and increase the levels of autonomous operations,” he says. “That will get both implementation and operations costs down so that we can fly even more missions, and that’s what we all want to do here at NASA.”