2021 in Review: Highlights from NASA in Silicon Valley

by Rachel Hoover

With 2022 on the horizon, join us as we look back at the highlights of 2021 at NASA’s Ames Research Center in California’s Silicon Valley.

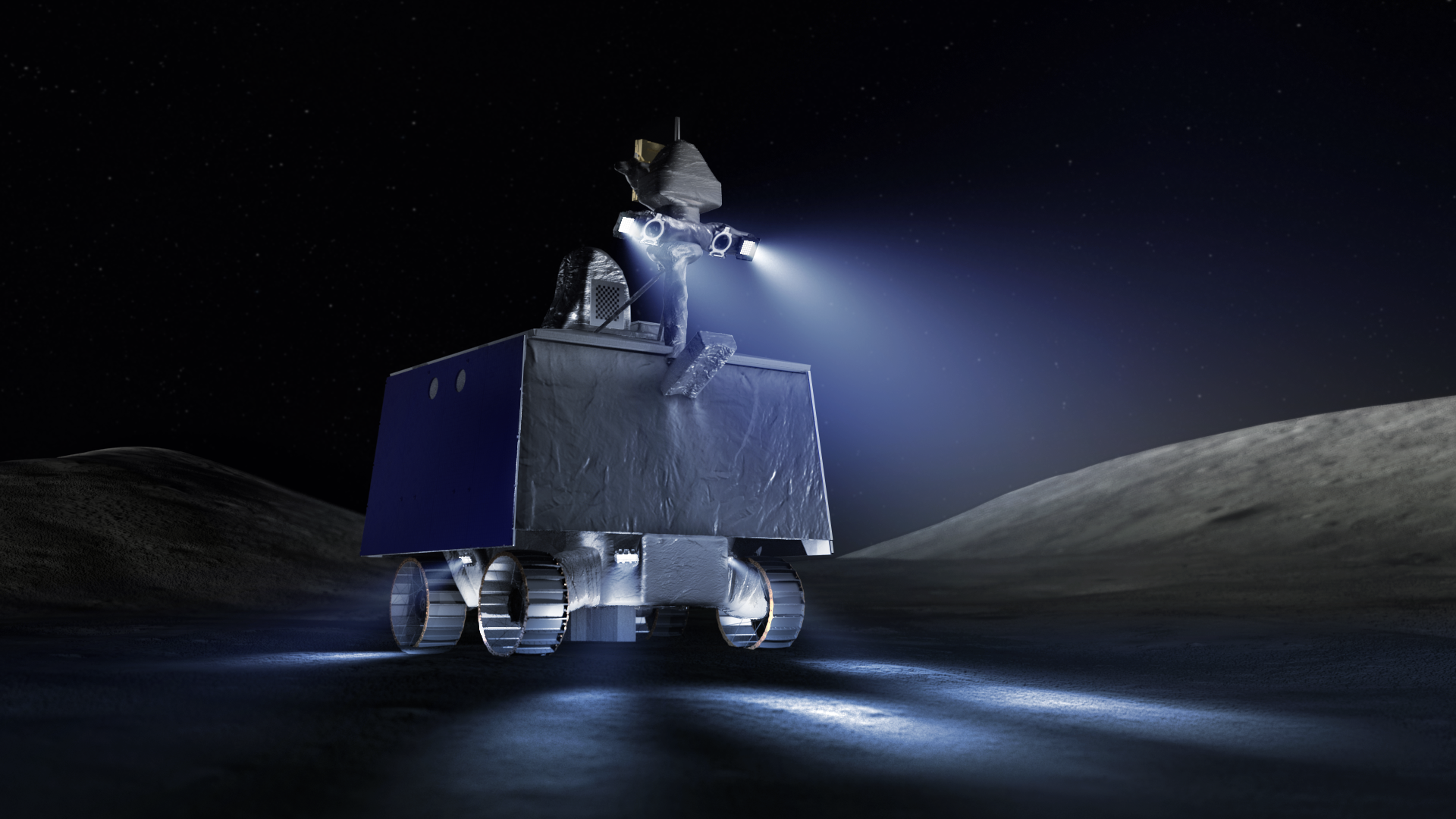

1) NASA’s water-hunting Moon rover, the Volatiles Investigating Polar Exploration Rover, made great strides this year. The VIPER team successfully completed practice runs of the full-scale assembly of the Artemis program’s lunar rover in VIPER’s new clean room. Two rounds of egress testing let rover drivers practice exiting the lander and rolling onto the rocky surface of the Moon. NASA also announced the landing site selected for the robotic rover, which will be delivered to the Nobile region of the Moon’s South Pole in late 2023 as part of the Commercial Lunar Payload Services initiative. NASA also chose eight new VIPER science team members and their proposals to expand and complement VIPER’s already existing science team and planned investigations. This year’s progress contributed to VIPER’s completion of its Critical Design Review, turning the mission’s focus toward construction of the rover beginning in late 2022.

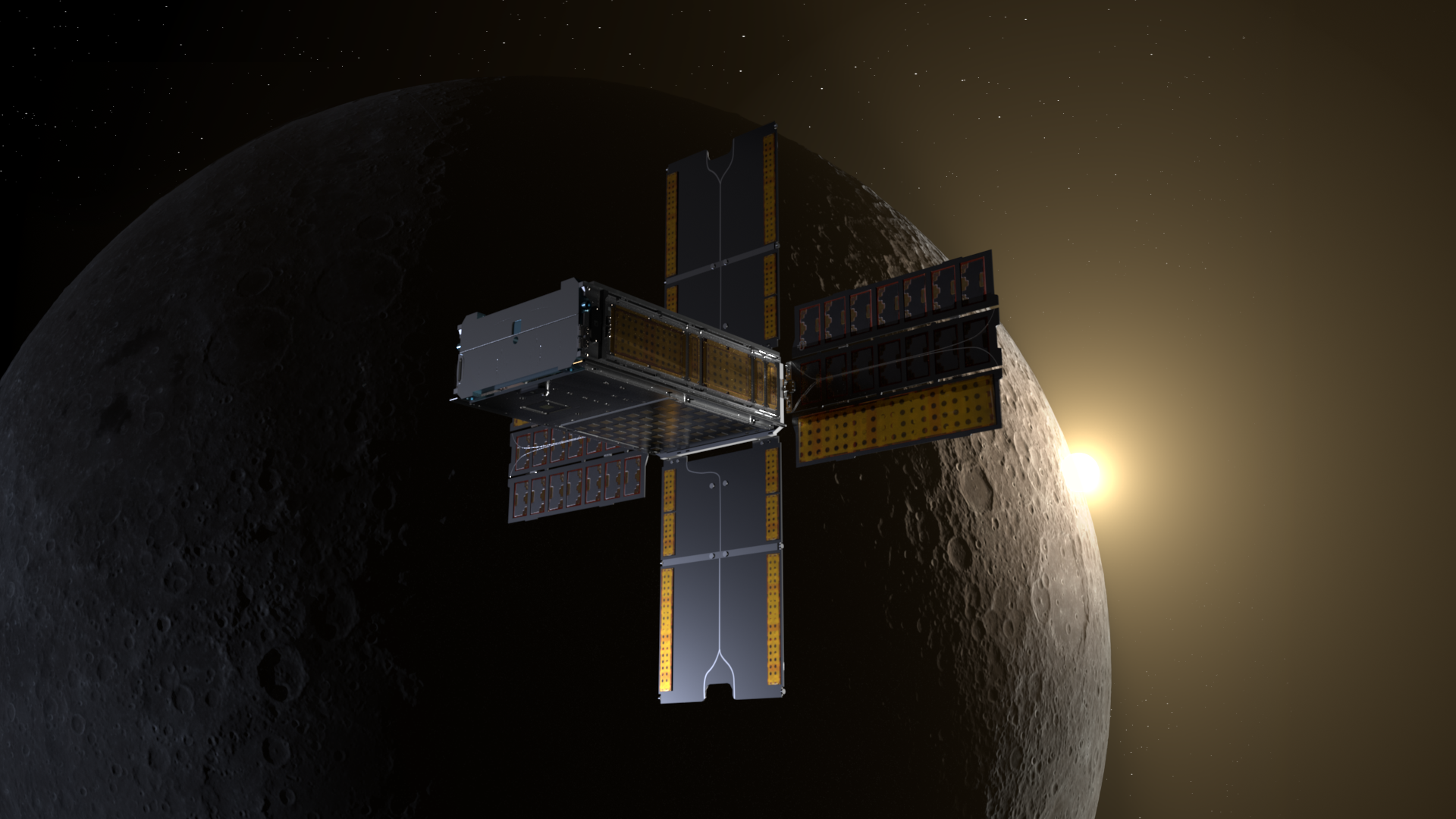

2) Artemis. The CAPSTONE CubeSat readied for its 2022 launch to a never-before-used orbit near the Moon. CAPSTONE is a pathfinder for NASA’s Moon-orbiting Gateway outpost that is part of NASA’s Artemis program. It will help verify the dynamics of a unique orbit and demonstrate innovative navigation technology and communications capabilities.

An integral part of ensuring astronaut safety is Orion’s launch abort system, which can pull the crew module away from the rocket in a split-second if an emergency arises during launch. Researchers at Ames are using cutting-edge computational fluid dynamics software to better understand how different abort scenarios affect vibration levels.

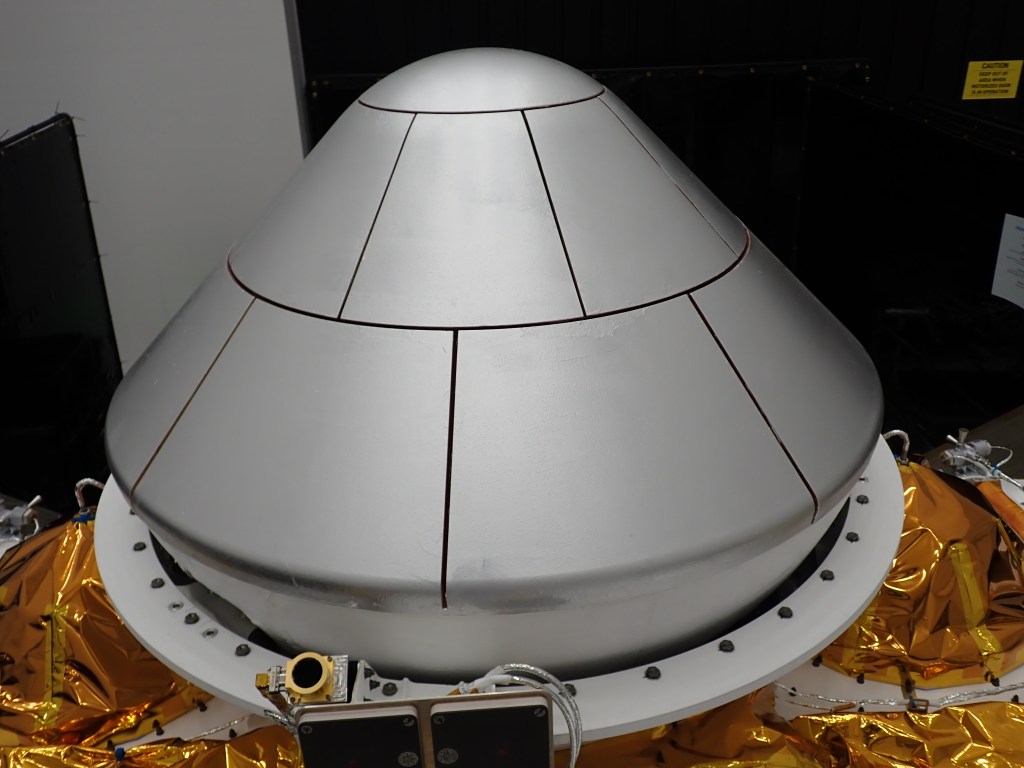

When NASA astronauts return from the Moon during the Artemis missions, their spacecraft – the Orion crew capsule – will blast through Earth’s atmosphere so fast it will trigger two forms of heating. For the first time, Ames tested Orion’s heat shield material with both kinds of heating – the most realistic tests to date. Speaking of heat shields, Ames also tested an “umbrella-like” deployable heat shield design called ADEPT, that will make it safer for larger vehicles to safely pass through the atmosphere of distant locations, like Mars and beyond.

3) Robotics. NASA astronauts aboard the International Space Station worked with free-flying Astrobee robot Honey to test a technology inspired by gecko feet. The “gecko gripper” allows robots in space to grab onto surfaces – without applying force to stick – and then detach on demand. Engineers also tested Astrobee Bumble’s ability to investigate a simulated anomaly, conduct a survey, and untangle itself using ISAAC, the Integrated System for Autonomous and Adaptive Caretaking software. NASA astronauts unpacked, conducted health checks, and got Astrobee Queen up-and-floating for the first time too. Enhanced robotic assistants and autonomous operations means crew can spend less time on routine chores and focus more on tasks only humans can do. U.S. Vice President Kamala Harris met robot Honey during a special phone call to NASA astronauts Shannon Walker and Kate Rubins aboard the space station to find out what it’s like to do science in space.

4) Aeronautics: Onwards and upwards! NASA tested, rolled out, and wrapped up a spectrum of new technologies to improve aircraft safety and efficiency while expanding the ability to coordinate air traffic. NASA’s Airspace Technology Demonstration 2, or ATD-2, project developed technologies that predict airport traffic conditions to determine the best time for departing flights to push back from the gate. By September 2021, software developed by ATD-2 had reduced greenhouse gas emissions by saving more than one million gallons of jet fuel. It also spared travelers 933 hours in flight delays, among other concrete benefits.

NASA also officially wrapped up the unmanned aircraft systems traffic management, or UTM project. UTMinvented a totally new way to handle the airspace: an automated, decentralized style of air traffic management where multiple parties, from government to commercial industry, work together to provide services. Since then, several efforts have emerged to apply UTM’s concepts and capabilities to even more realms such as managing traffic for future flying taxis.

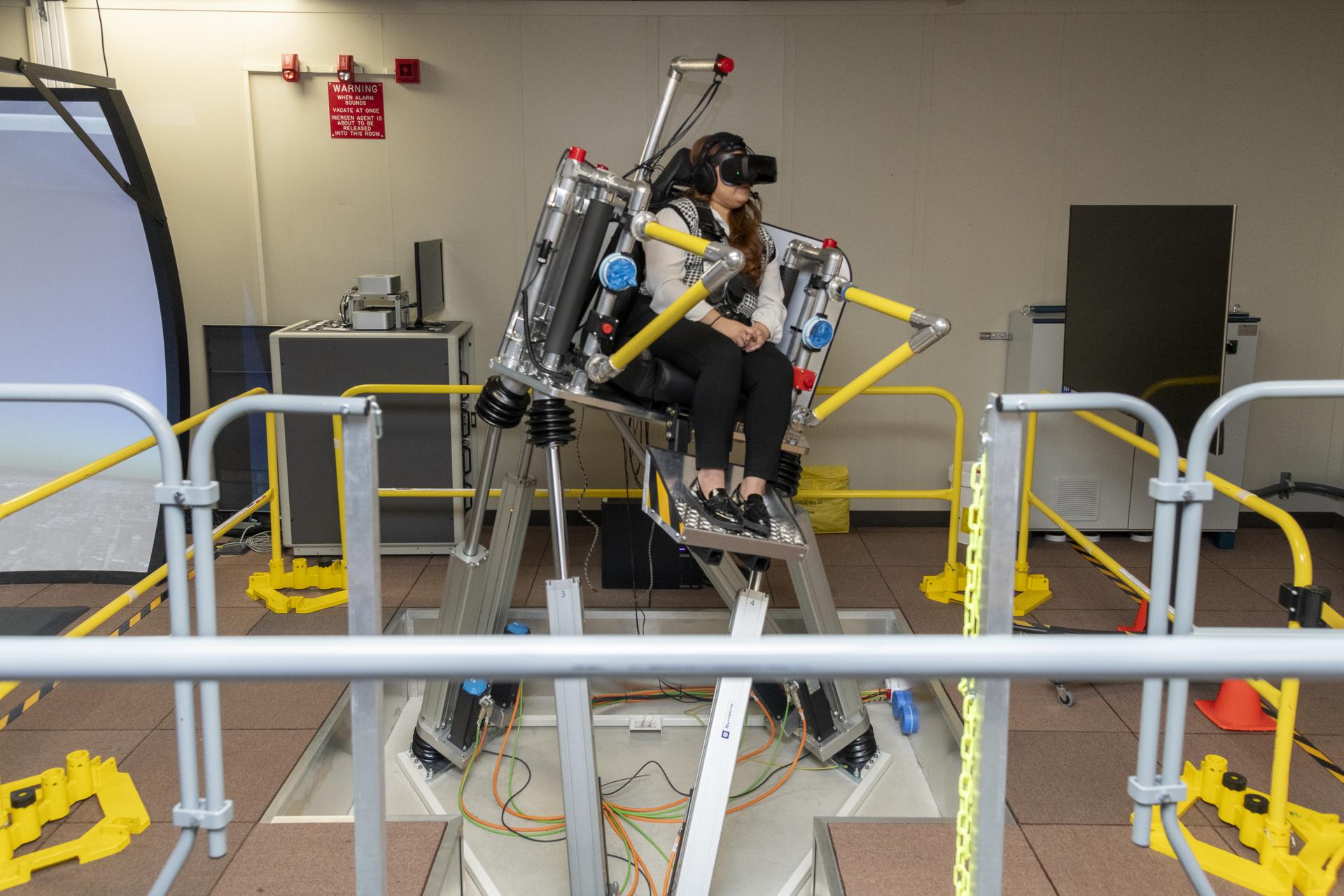

The Air Traffic Management eXploration, or ATM-X, project supported and collected data during NASA’s Advanced Air Mobility National Campaign flight tests and Integrated Dry Run test, including tests of a prototype all-electric vertical takeoff and landing air taxi vehicle. The goal is to understand how new aircraft serving as air taxis will need to operate. To ensure air taxi passengers enjoy a safe and smooth ride, NASA is using high-performance computer simulations to study a system that could suppress wobbles caused by sudden gusts of wind.

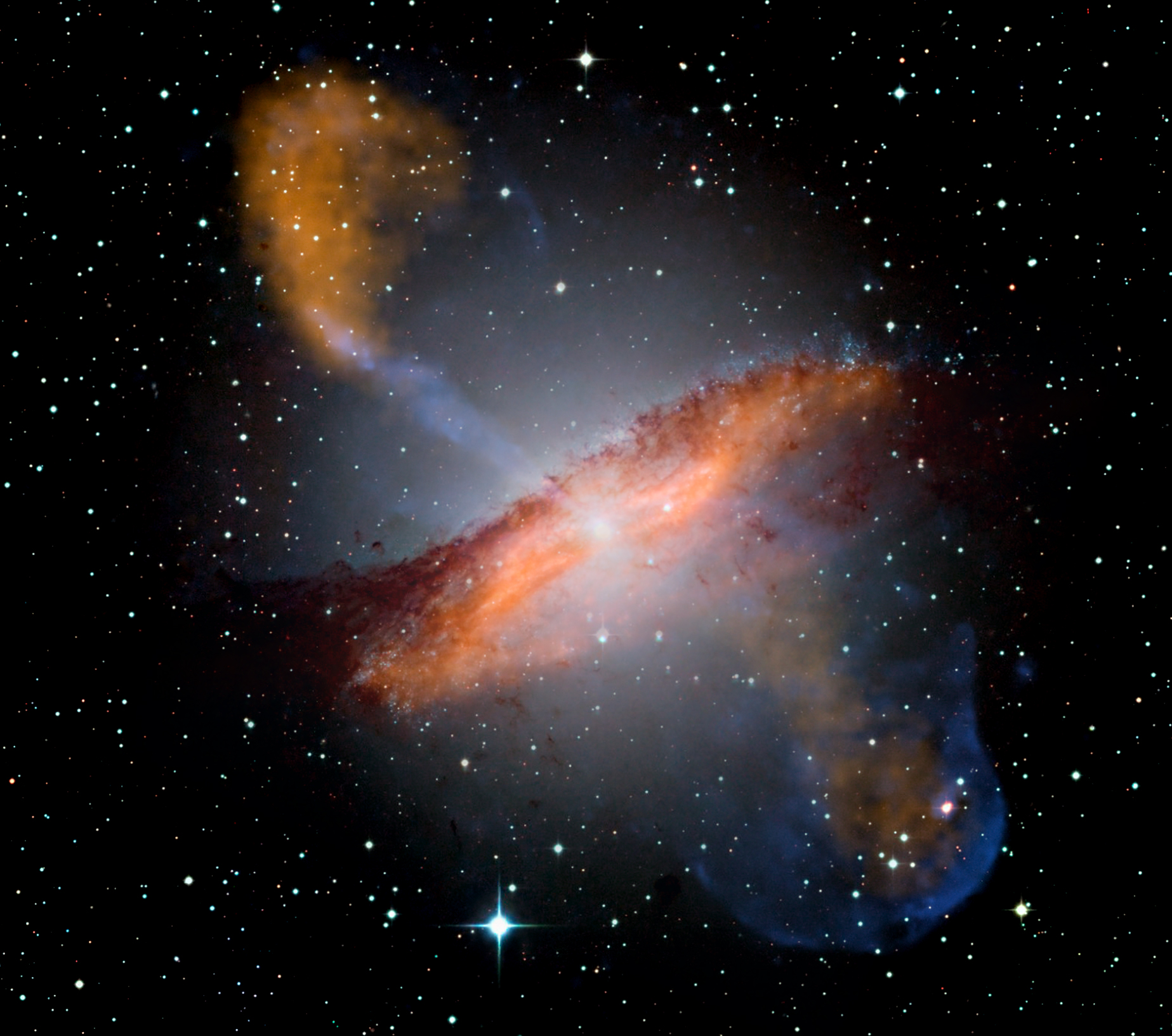

5) Discoveries of Cosmic Proportions. Over the last year, Ames scientists have made several discoveries about the cosmos! Using the data gathered during NASA’s Kepler and follow-on K2 mission, a deep neural network called ExoMiner helped add over 300 newly validated exoplanets – or planets beyond our solar system – to the current list of over 4,000 worlds. ExoMiner uses NASA’s Pleiades supercomputer, which also helps scientists understand the universe by simulating cosmic phenomena. A recent study simulated a galaxy designed to be similar to our own Milky Way colliding with a smaller galaxy, revealing how such collisions create a “fossil record” of information that can help researchers peer into cosmic history.

Galaxies across the universe continue to be observed by the Stratospheric Observatory for Infrared Astronomy, or SOFIA, NASA’s telescope on a plane. Recently, SOFIA studied the formation of stars in our own galaxy’s center, made key observations of never-before-seen components of a spiral galaxy, provided new insights into how the early universe may have been shaped, and enabled the first clear look into a star-forming region in the Milky Way. This research is helping us understand more about some of the most complex cosmic structures we know of. The team also had the opportunity to fly from the Southern Hemisphere by temporarily changing their base of operations to French Polynesia, allowing SOFIA to make observations of atomic oxygen in the Earth’s atmosphere.

6) NASA’s Ingenuity Mars Helicopter successfully demonstrated the first powered controlled flight on another planet. Ingenuity was designed and developed by NASA’s Jet Propulsion Laboratory in Pasadena, California. The expertise of aeronautical engineers at Ames led JPL to partner with the center and NASA’s Revolutionary Vertical Lift Technology project from the moment work on Ingenuity started. Ames engineers provided performance predictions, computational fluid dynamics analysis, and facility installation studies, as well as system identification, development, and validation. Ames also contributed to the system integration testing of a full-scale Mars helicopter prototype, two engineering development models, and the flight model. Ames continues to partner with JPL to consider future Mars rotorcraft designs.

7) Our STEReO project – Scalable Traffic Management for Emergency Response Operations – was busy this fall learning from wildland firefighters how small, autonomous aircraft could help battle blazes. Working in partnership with firefighting experts, earlier this year the STEReO team tested and demonstrated elements of the toolkit it’s designing for pilots flying unmanned aircraft systems – also called UAS or drones – as part of wildfire response efforts. STEReO aims to modernize the response to many kinds of disasters by allowing smart UAS to play a bigger role.

8) S-MODE. After a successful test run, in October NASA deployed aircraft, a research vessel, and several kinds of autonomous ocean robots to study small ocean whirlpools, eddies, and currents. The Sub-Mesoscale Ocean Dynamics Experiment, or S-MODE, team aims to understand the role these ocean processes play in the movement of heat, nutrients, oxygen, and carbon, which travel from the ocean surface to the deeper ocean layers below. This will help scientists better understand how Earth’s oceans slow the impact of global warming and impact the Earth climate system.

9) Tagging in NASA to Help Track Otters. Sea otters are important mammals in marine ecosystems and are essential to maintaining balance in kelp forests. Understanding how otters and other animals interact with their changing environments is critical for deciphering the impacts of climate change on wildlife and ecosystems.

That’s why the Space Shop, a maker space at Ames, is developing a modern tracking device designed to humanely fit onto the hind flippers of sea otters. This new and improved tracking device is still being tested, but once it’s ready to be deployed, scientists will be able to track sea otter populations with far more precision and frequency than was previously possible. In the future, the same technology could be used to track other wild animals.

10) “Sniffing” Out COVID-19. As COVID-19 continues to be a part of our lives, the more tools that can be developed to manage the virus’ spread in our communities the better. E-Nose is a smartphone-based device derived from technology used to help monitor air quality inside spacecraft, but NASA is advancing it to detect COVID-19 by “sniffing” a person’s breath. E-Nose could help mitigate community spread of the virus in a manner similar to how temperature checks are used to screen individuals before entering shared indoor spaces, such as a local grocery store or restaurant. The device is almost ready for field testing, after which the project will look into next steps for making the technology more widely available.

11) BioSentinel: A Step Closer to Artemis Deep Space Flight. This year the BioSentinel team completed assembly and a battery of tests on their spacecraft before packing it up and shipping it off to NASA’s Kennedy Space Center in Florida. There, the spacecraft was placed in its CubeSat dispenser. This assembly was later installed in the Space Launch System rocket’s Orion stage adaptor where BioSentinel now occupies its CubeSat seat for a ride to deep space aboard Artemis I.

BioSentinel will fly past the Moon and into an orbit around the Sun. It will perform the first long-duration biology experiment in deep space. Its six-month science investigation will study the effects of deep space radiation on a living organism, yeast. Because human cells and yeast cells have many similar biological mechanisms, BioSentinel’s experiments can help us better understand the radiation risks for long-duration deep space human exploration.

12) Good Things Come in Small Packages: Answers to NASA’s Science and Technology Questions. NASA researchers are sending a myriad of small science and technology payloads to orbit or the edge of space to address big science questions and help drive future exploration missions.

NASA is keen to ensure spacecraft don’t accidentally contaminate Mars if we’re ever to discover whether Martian life exists. The Microbes in Atmosphere for Radiation, Survival and Biological Outcomes Experiment,or MARSBOx, tested if any common Earth microorganisms could actually persist on a spacecraft to Mars. MARSBOx flew millions of tiny microbes to the Mars-like environment found 20 miles above the surface of the Earth. Researchers found that two of the four types of microorganisms flown in this experiment could temporarily withstand these harsh conditions.

How do clouds contribute to climate patterns on Earth, as well as other planets like Saturn, Venus, and Mars? This question motivated NASA’s development of a new nephelometer called NephEx – short for Nephelometer Experiment – a sensor that measures details about the interior composition of clouds. The compact device is being tested here on Earth and could one day be deployed to the far reaches of space to advance our understanding of climate patterns across our solar system.

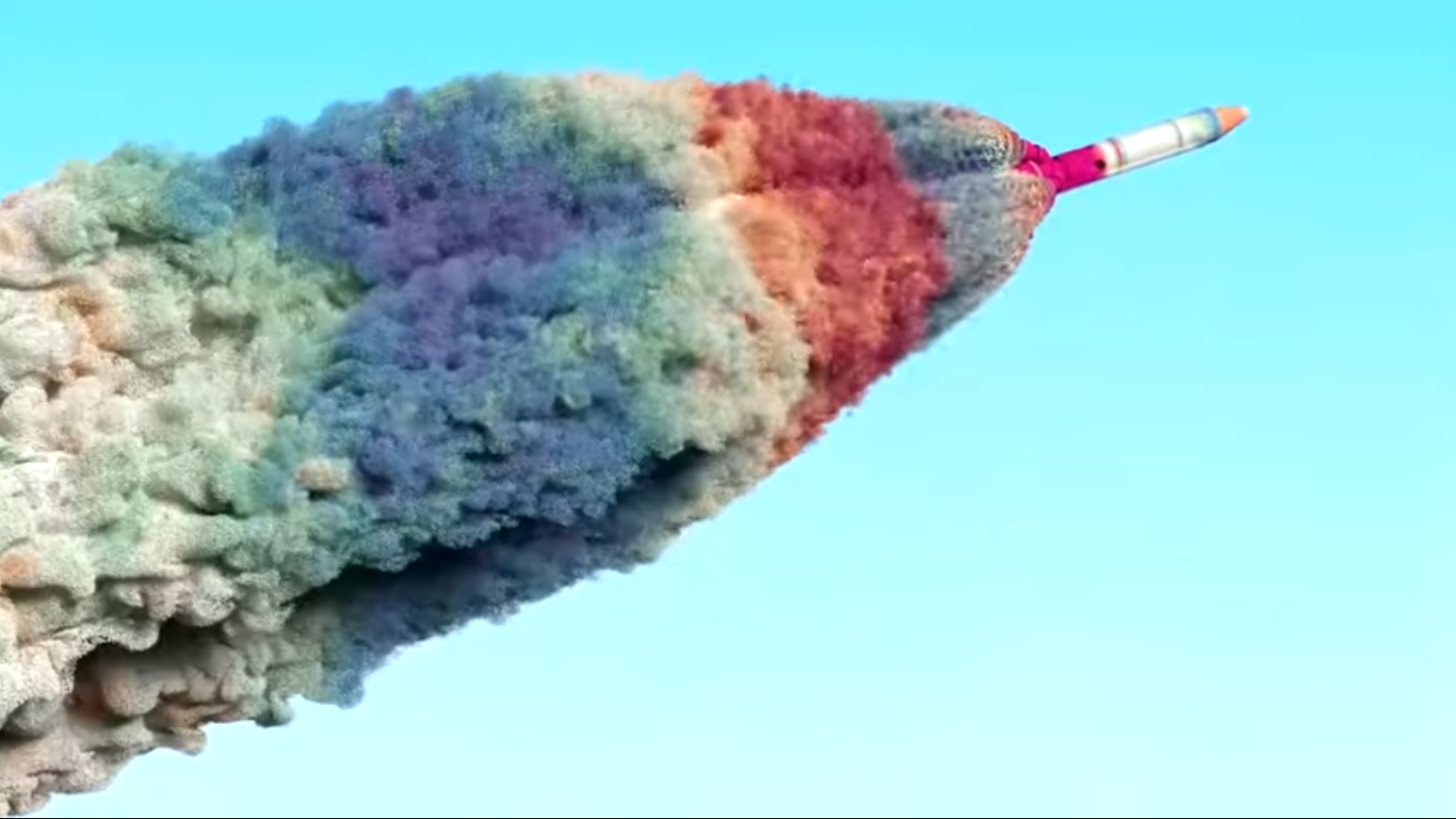

New small spacecraft technologies could transform future deep space mission capabilities and bring down mission costs. NASA’s Pathfinder Technology Demonstrator, or PTD, series of missions demonstrates novel CubeSat technologies in low-Earth orbit. The first mission of the series, PTD-1, launched a 6-unit CubeSat – roughly the size of a shoebox – to demonstrate a new type of propulsion system that uses water as fuel. The system produces gas propellants – a mix of hydrogen and oxygen – from water and burns them in a tiny rocket engine for thrust.

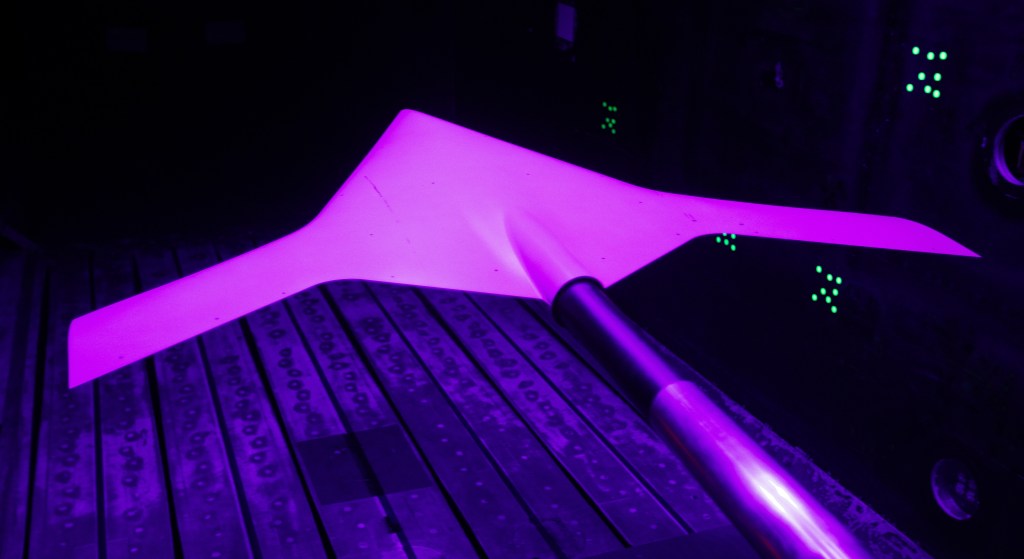

TechEdSat-7 is one of a series of nanosatellite flight missions that helps to teach a new generation of researchers and engineers how to execute flight experiments. These missions test cutting-edge technologies relevant to the overall goal of using cost-effective CubeSats – bringing small payloads from orbit back to Earth or to the surface of other worlds, such as Mars. Technologies packed into TechEdSat-7’s flight include a novel exo-brake, a deployable device that applies drag to the spacecraft to cause deorbit.

NASA’s Payload Accelerator for CubeSat Endeavors, or PACE, initiative is finding ways to speed up the process of getting small spacecraft technologies ready for prime time. NASA needs to ensure new technologies are sufficiently vetted to stand up to the extreme demands of the space environment before implementing them for an exploration mission. Flight tests are key to the vetting process, which culminates with a technology demonstration in space. V-R3x, the first technology with flight tests facilitated by PACE, used a swarm of three CubeSats – each about the size of a coffee mug – to demonstrate new technologies and techniques for radio networking and navigation in low-Earth orbit. In a separate flight test, V-R3x launched a single CubeSat on a high-altitude balloon and deployed four units on the ground to communicate with the satellite at the upper edges of the atmosphere – over 100,000 feet away. This flight enabled the researchers to evaluate V-R3x’s advanced swarm communications by forming a mesh network between multiple spacecraft and ground stations.

13) Biosciences. To keep astronauts healthy during multi-year missions on the Moon or Mars, NASA is testing a concept that uses microorganisms to produce vital nutrients in space so that astronauts can drink them down as well as an “enhanced” spaceflight diet. Ames also supports experiments aboard the space station to study which genes help tardigrades – also known as water bears – tolerate extreme environments, learn more precisely what causes space-related loss of muscle strength, and monitor for potentially disease-causing bacteria and fungi.

And there’s more in store with the much-anticipated launch of NASA’s James Webb Space Telescope, which will be broadcast live on NASA TV, the NASA app, and the agency’s website. Members of the public also can register to attend the launch virtually. NASA’s virtual guest program for the mission includes curated launch resources, notifications about related opportunities or changes, and a stamp for the NASA virtual guest passport following a successful launch.

NASA photo

Ames Contributions to NASA’s James Webb Space Telescope

by Rachel Hoover

The James Webb Space Telescope is the most complex space science observatory ever built. Its revolutionary science is made possible by key contributions from NASA’s expertise in Silicon Valley, and will allow scientists to explore parts of the universe never seen before.

Webb will peer more than 13.5 billion years back into cosmic history to a time when the first luminous objects were evolving. It’s the first observatory capable of exploring the very earliest galaxies, and could transform our understanding of the universe. Webb will also study the atmospheres of planets orbiting other stars, and observe moons, planets, comets, and other objects within our own solar system. This data will reveal the molecules and elements that exist on distant planets, and could unlock clues to the origins of our planet and life as we know it.

NASA’s Ames Research Center in California’s Silicon Valley made significant contributions to early mission concepts, technology development, and modeling. Ames researchers also will lead and contribute to the mission’s science investigations.

Early Concepts and Detector Technology Development

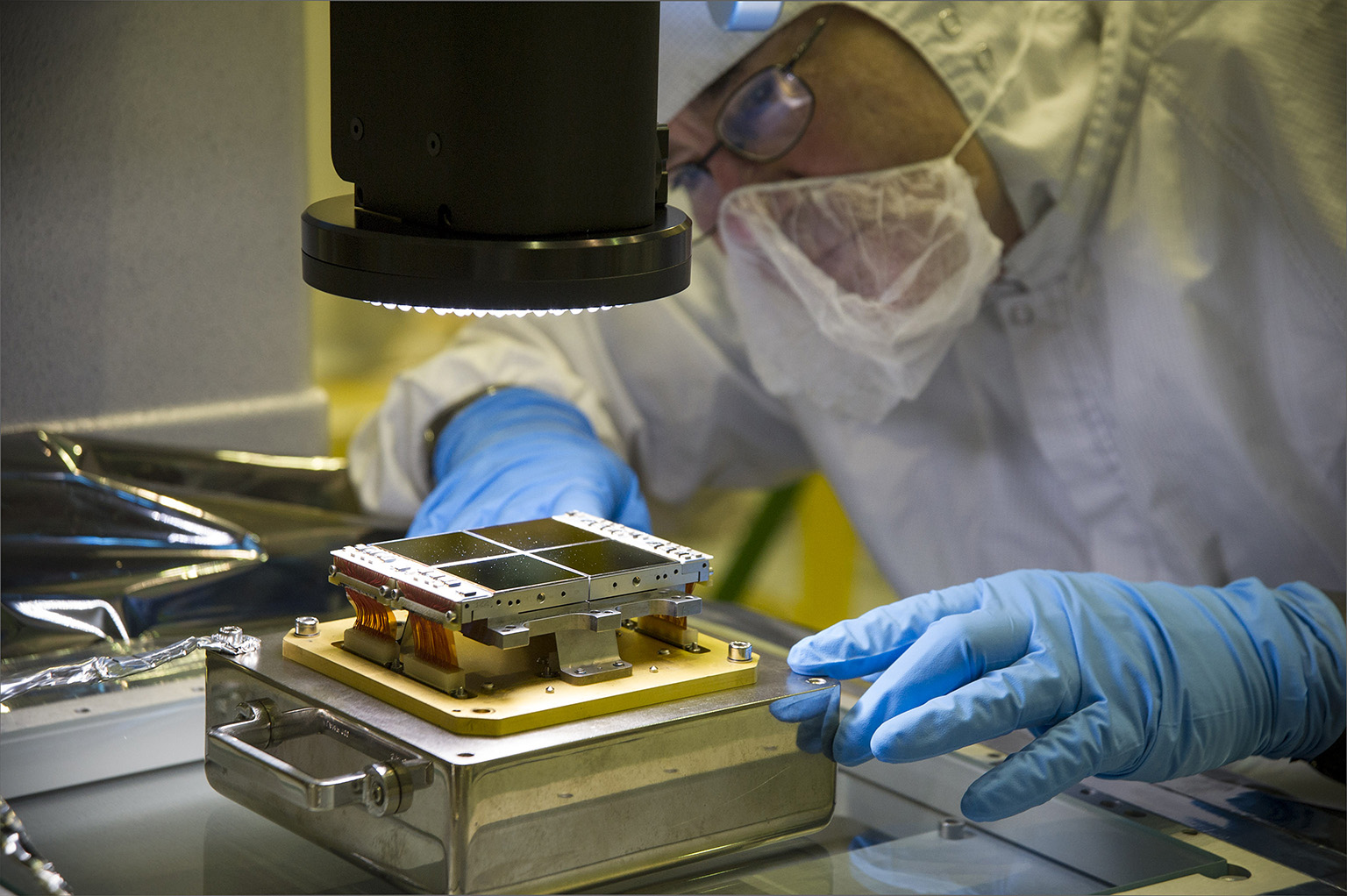

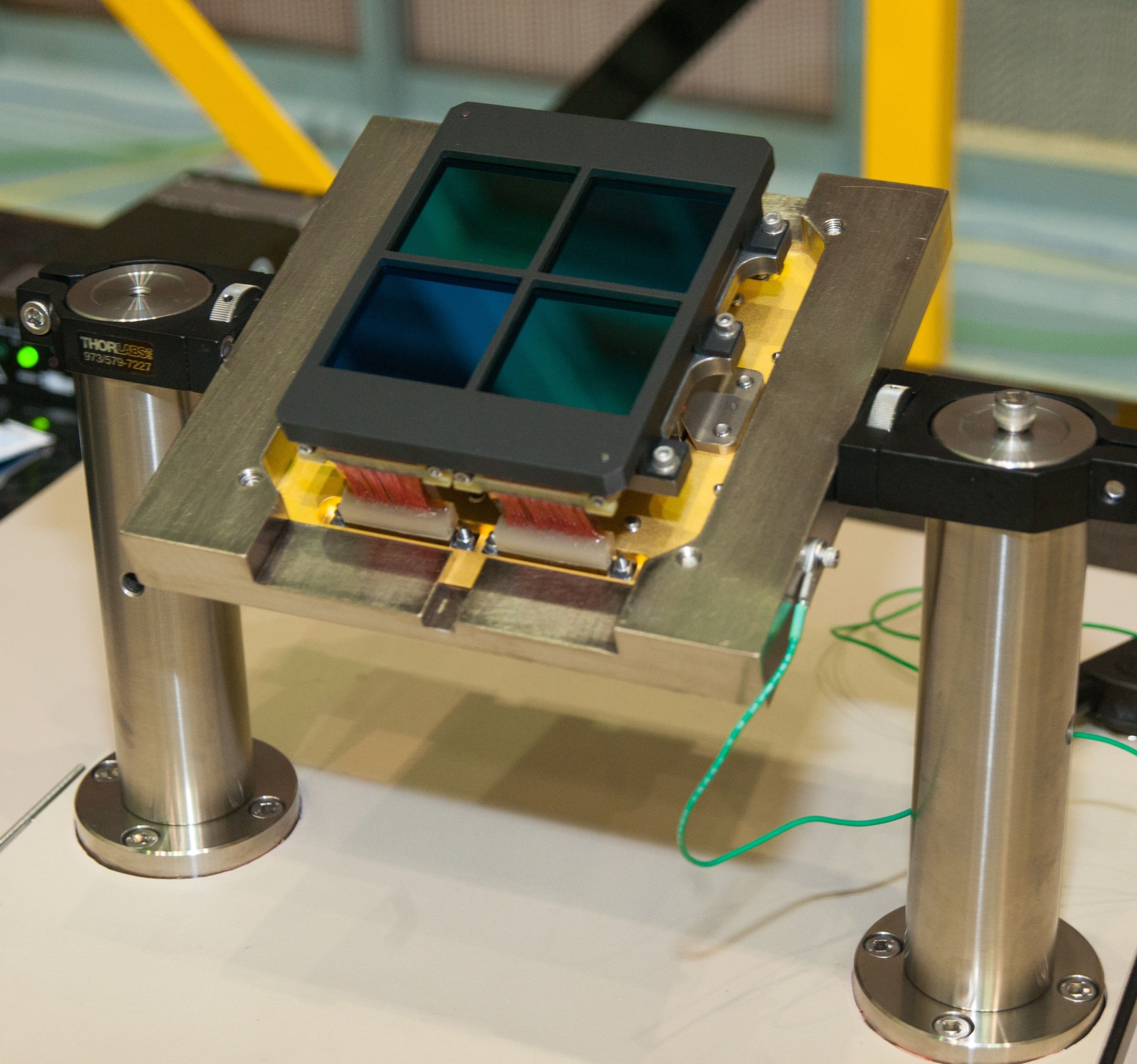

When designing Webb, engineers had to imagine a telescope unlike any built before. In the late 1990s, NASA received a formal recommendation that a telescope to follow the Hubble Space Telescope should operate at infrared wavelengths and be equipped with a mirror larger than four meters. After that, a team of engineers and astronomers at Ames worked together to guide, define, develop, and test Webb’s novel detector technology. All of Webb’s onboard science instruments benefit from Ames’ contributions.

Webb’s mirrors collect light and direct it to the science instruments, which filter that light before focusing it on the detectors. Each of Webb’s four instruments has its own set of detectors, which absorb photons and convert them to electronic voltages that can be measured.

These new detectors need to be extraordinarily sensitive in order to record the feeble light from far-away galaxies, nebulae, stars, and planets. Webb needs large-area arrays of detectors to efficiently survey the sky. Ames has extended the state of the art for infrared detectors by guiding the development of detector arrays that are lower in noise, larger in format, and longer lasting than their predecessors.

These detectors allow Webb to “see” light outside the visible range and show us otherwise hidden regions of space at the near-infrared and mid-infrared wavelengths. With its longer wavelengths, infrared radiation can penetrate dense molecular clouds, whose dust blocks most of the light detectable by the Hubble instruments.

Infrared Detectors

Webb uses two types of detectors in order to sense shorter or longer wavelength light.

Three of Webb’s four science instruments capture near-infrared wavelengths. The Near Infrared Spectrograph, or NIRSpec; Near-Infrared Camera, or NIRCam; and the Fine Guidance System/Near-Infrared Imager and Slitless Spectrograph, or FGS/NIRISS, all use mercury-cadmium-telluride detectors. The Mid-Infrared Instrument, or MIRI, uses arsenic doped silicon detectors, and is Webb’s only mid-infrared tool.

The Ames Detector Lab performed foundational work to characterize mid-infrared detector performance in a space radiation environment and developed large-area, low-background mid-infrared detectors. The fruits of this multi-decade effort are in MIRI, but also benefited several Webb detector candidates.

Six Modes to Split Light

In addition to the detector work, Ames scientists contributed to the design, development, and testing of two of Webb’s scientific instruments, NIRCam and MIRI.

All four of Webb’s scientific instruments use spectroscopy to break down light into separate wavelengths – like raindrops create a rainbow – to determine the physical and chemical properties of various forms of cosmic matter. The spectrographs divide light to send to the detectors, which measure their intensity. The intensity of wavelengths, or their absence, can reveal the temperature, density, motion, distance, elements, and molecules of objects.

Webb is equipped with several modes of spectroscopy to address specific scientific questions. A scientist at Ames designed silicon dispersers – which spectrographs use to spread light out – and led the development of the spectroscopic modes of the NIRCam instrument, which are:

- Wide-Field Slitless Spectroscopy: to capture the overall spectrum of a wide field of view – a field of stars, part of a nearby galaxy, or many galaxies – at once.

- Time-Series Spectroscopy: to capture the spectrum of an object or region of space at regular intervals in order to observe how the spectrum changes over time. Time-series spectroscopy is used to study planets as they transit their stars.

For researchers:

Ames’s Contributions to Webb’s Science Program

Astrophysicists and scientists at Ames will use Webb to continue studying brown dwarfs, young stars, evolved stars, nearby galaxies, and worlds beyond our solar system, called exoplanets. They’ll also look for signs of polycyclic aromatic hydrocarbons, or PAHs, a class of large, chicken wire-shaped molecules that scientists believe could have played a role in the origins of life on Earth and elsewhere in the cosmos. Collectively, Ames researchers will lead over 400 hours of observations in the first year of Webb operations using Guaranteed Time, Early Release Science, and General Observer observations.

Learn more:

-

NASA release: NASA’s James Webb Space Telescope General Observer Scientific Programs Selected (March 30, 2021)

Bringing Webb into Your Home

Spacecraft like the James Webb Space Telescope travel to far-off destinations, but the NASA mobile app uses the latest in augmented reality, or AR, to bring NASA’s space exploring technologies right into the homes of users. Currently the NASA app includes the Webb telescope and 34 other AR models, plus detailed information about each mission. Download the NASA app today and experience exploration here and beyond.

Collaborators

The James Webb Space Telescope will be the world’s premier space science observatory when it launches in 2021. Webb will solve mysteries in our solar system, look beyond to distant worlds around other stars, and probe the mysterious structures and origins of our universe and our place in it. Webb is an international program led by NASA with its partners, ESA (European Space Agency) and the Canadian Space Agency.

NASA Selects New Members for Artemis Rover Science Team

by Rachel Hoover

When NASA’s Volatiles Investigating Polar Exploration Rover, or VIPER, explores and samples the soils at the Moon’s South Pole, scientists anticipate it will reveal answers to some of the Moon’s enduring mysteries. Where is the water and how much is there? Where did the Moon’s water come from? What other resources are there?

What other questions could VIPER answer? NASA sought ideas and recently chose eight new science team members and their proposals that expand and complement VIPER’s already existing science team and planned investigations.

The selected co-investigators, their institutions, and their project proposals are:

- Kathleen Mandt, Johns Hopkins University in Baltimore, tracing lunar volatile sources based on composition

- Parvathy Prem, Johns Hopkins, modeling active volatile sources and transport during the VIPER mission

- Myriam Lemelin, University of Sherbrooke in Canada, unmixing the spectral variations of the south polar region to retrieve its composition, volatile content, sources and modification processes

- Masatoshi Hirabayashi, Auburn University in Alabama, statistical and thermal approaches to constrain the impact-induced regolith-volatile distribution mechanisms

- Casey Honniball, NASA’s Goddard Space Flight Center in Greenbelt, Maryland, comparing the exchange of water and methane with the lunar surface and exosphere

- Laszlo Kestay, U.S. Geological Survey in Flagstaff, Arizona, ground truth for lunar resource assessments

- Barbara Cohen, NASA Goddard, VIPER measurements of nitrogen and noble gases in polar regolith

- Kevin Lewis, Johns Hopkins, a geophysical traverse at the lunar south pole with the VIPER accelerometers

“These additions to the science team will provide new and fresh perspectives, enhance the expertise in critical areas, and really allow the team to get the maximum science value out of the VIPER mission,” said Sarah Noble, VIPER program scientist in the Planetary Science Division at NASA Headquarters in Washington.

The VIPER mission is managed out of NASA’s Ames Research Center in California’s Silicon Valley, and is scheduled to be delivered to the Moon in late 2023 by Astrobotic’s Griffin lander as part of the Commercial Lunar Payload Services initiative. Construction of the rover will begin in late 2022 at NASA’s Johnson Space Center in Houston, while the rover flight software and navigation system design will take place at Ames. Astrobotic will receive the complete rover with its scientific instruments in mid-2023 in preparation for launch later that year.

CLICK Team Tests Optical Communications Technology Ahead of Small Spacecraft Swarm Demonstration

by Loura Hall

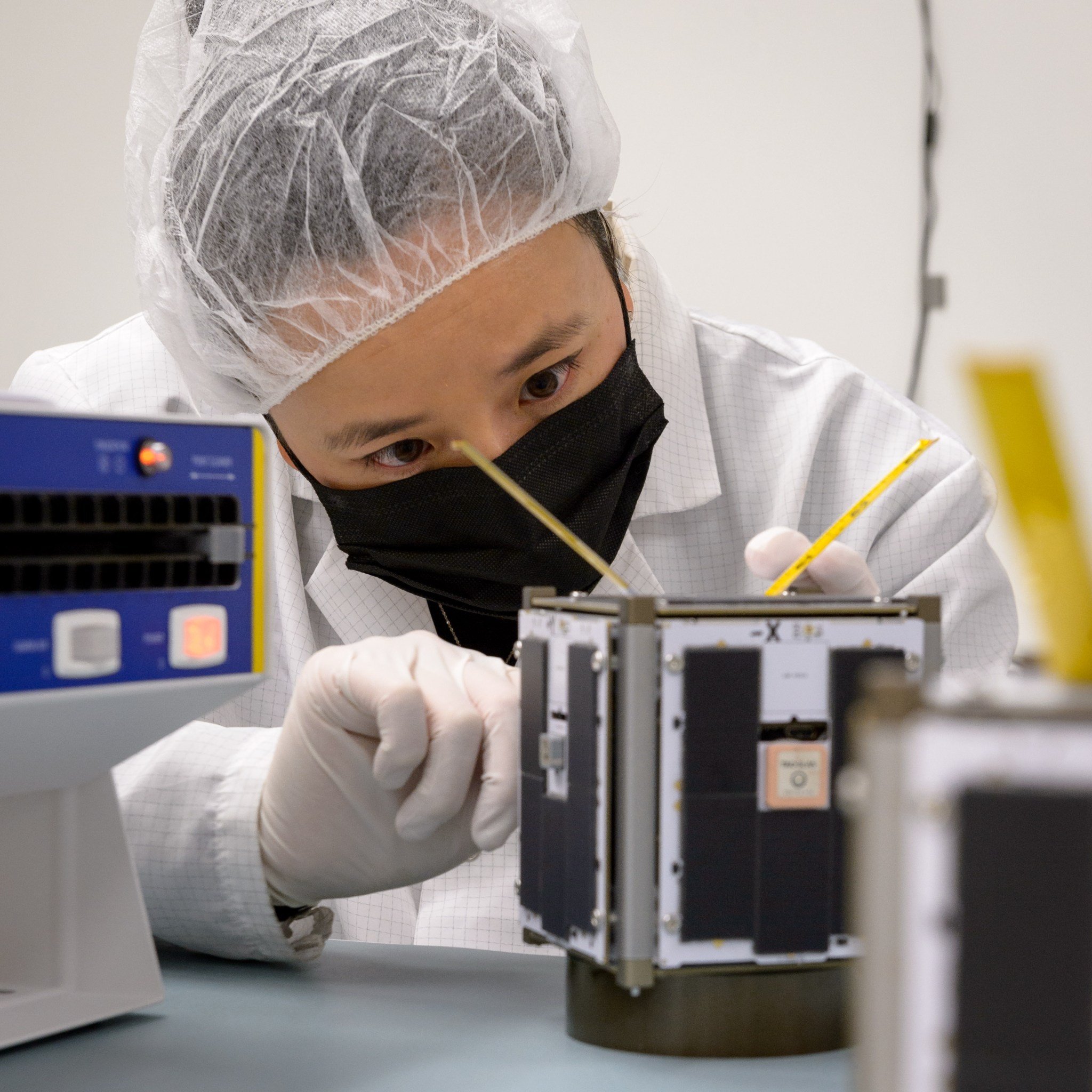

Teams from Massachusetts Institute of Technology (MIT) in Cambridge and University of Florida (UF) in Gainesville are currently testing components of NASA’s CubeSat Laser Infrared CrosslinK (CLICK) B/C demonstration, aiming to validate that the technology can be packaged into a CubeSat and work as expected. CLICK B/C is the second of two sequential missions designed to advance optical communications capabilities for autonomous fleets of CubeSats.

Anticipated to deploy from the International Space Station in 2023, CLICK B/C’s ground testing occurs on an engineering development unit (EDU) at MIT. The EDU is a non-flight test unit that allows the team to assess the technology’s integration and correct any issues with the system’s hardware and software prior to building the actual system that will fly into space. Lessons learned from this testing will inform the flight build of CLICK B/C’s two small spacecraft. Additionally, vibration and thermal vacuum testing of the EDU simulates the launch and space environments, allowing researchers to refine the design’s manufacturability, the system’s optical alignment, and timing between the various system components.

CLICK B/C uses invisible laser beams to demonstrate spacecraft-to-spacecraft optical communications as well as measure the relative positions of the spacecraft, also called precision ranging. Researchers are currently assessing the laser’s performance as well as the electronics’ precise timing measurements, which translate directly to the technology’s ranging capabilities. The first CLICK technology demonstration mission, CLICK A, is planned to launch in May 2022 as a risk-reduction demonstration to help improve CLICK B/C’s chances of success.

Optical technology is promising for CubeSats because it does not use as much power as conventional radio frequency communications at high data rates. Specifically, the CLICK B/C technology is a full-duplex system, meaning it can send and receive data at the same time – an important development from conventional one-way laser communications. Optical communications advancements like these can make constellations of CubeSats less costly and more efficient – the CLICK flight demonstration will build on the technology base established through other NASA missions like the Laser Communications Relay Demonstration (LCRD), which launched on Dec.7.

In addition to MIT and UF, NASA’s Small Spacecraft Technology program is partnering with small satellite manufacturer Blue Canyon Technologies (BCT) of Boulder. MIT is building and testing the EDU, while UF’s focus is on precision timing, which enables CLICK B/C’s centimeter-level ranging capability. BCT is providing the two spacecraft for the CLICK B/C mission.

NASA’s Small Spacecraft Technology program within the agency’s Space Technology Mission Directorate funds CLICK’s demonstration missions. The program is based at NASA’s Ames Research Center in California’s Silicon Valley.

Building Future Air Taxis to See Through the Fog

by Abigail Tabor

While the sun beat down on the New Mexico desert, inside, a dense fog hung in the air. In a special facility outside Albuquerque, a team of NASA researchers was working with the kind of fog that’s so thick you can’t see three feet in front of you.

The ability to perceive things through the fog was the reason for their visit – but not our human ability. Rather, the engineers were testing sensors likely to be used on future air vehicles such as urban air taxis. There won’t be a human pilot on board these small aircraft, and they’ll need new ways of seeing and sensing the environment to help them take off, fly, and land safely.

Instruments such as optical and infrared cameras, radar, and lidar devices will become their “eyes.” Fog presents a major challenge to those sensors, and how well today’s technology can take it in stride is an important question. The answer will drive the next phase of their development and help these aircraft fly autonomously.

For their study, the team from NASA’s Ames Research Center in California’s Silicon Valley needed a special facility that could make fog on demand. The fog chamber at Sandia National Laboratories in Albuquerque, New Mexico, can repeatedly produce, with scientific precision, a fog with the specific density needed.

Fog: Common, Calm, and Extreme

Fog is an extreme environment for perception technology, but it’s weather that commuters and others will still want to fly in. It’s common enough and calm enough: you don’t have turbulence or lightning with fog. Still, our high-tech “eyes” can’t yet see through it the way they’ll need to.

The signals emitted by a lidar device scanning an area for a safe landing spot might reflect off the water droplets in fog, instead of the objects they’re meant to detect. Each of the sensors that might be used on unpiloted passenger aircraft in the future is impacted differently by fog, and designers need to know how.

“Each sensor has its strengths and weaknesses, and they’re affected by fog to different degrees,” said Nick Cramer, a research engineer at Ames. “We don’t know which will end up on these vehicles, so we tested a suite of sensors in the chamber to quantify their pros and cons.”

Vision Tests Through the Fog

Stretching for 180 feet, Sandia’s fog chamber resembles a long concrete corridor lined with plastic sheeting that traps the fog in the test area. A string of incandescent lights along the ceiling looks like something you’d see on a restaurant’s patio, but it provides important consistent lighting across the space.

From the control room, Cramer and his colleagues from the Revolutionary Aviation Mobility group, part of NASA’s Transformational Tools and Technologies project, watched as 64 sprinklers in the ceiling came alive.

“The sprayers release a mixture of water and salt to create fog made of particles of a larger size,” said Jeremy Wright, an optical engineer at Sandia. “It’s actually difficult to get just right. Depending on the conditions, the water droplets want to either condense more water out of the air and grow or give water back to the air as humidity.”

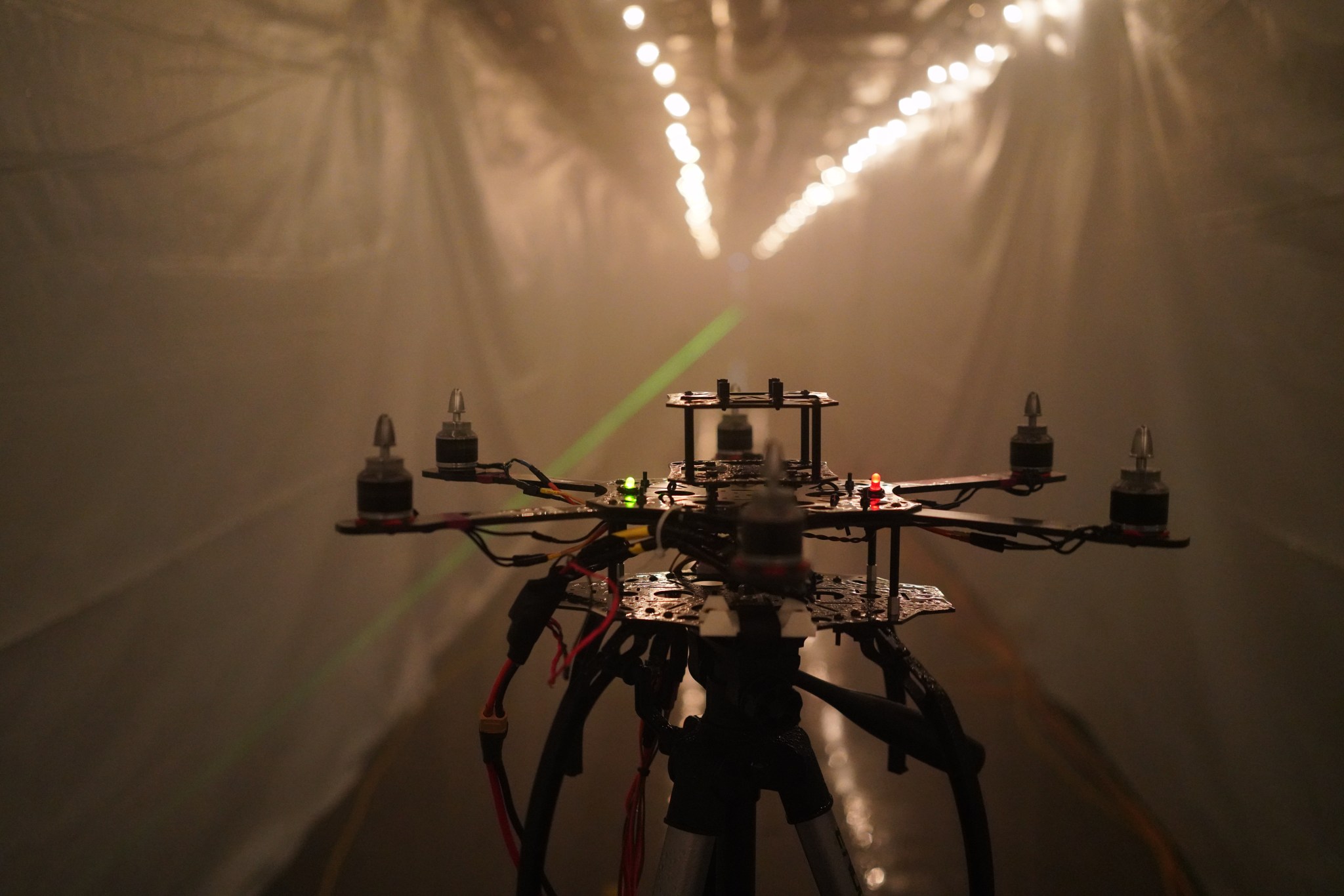

At one end of the chamber, the frame of an unmanned aerial vehicle, commonly known as a drone, stood bolted to a stand. The UAV was a test target for the collection of sensors installed at the opposite end. The researchers measured how well they could detect the UAV – or its warm motors, in the case of an infrared camera – from different distances through different levels of fog.

Future aircraft imagined under NASA’s vision for Advanced Air Mobility will need to detect and avoid other self-flying vehicles this way. And people, too: one team member walked slowly through the foggy chamber to test the sensors’ ability to pick out a pedestrian.

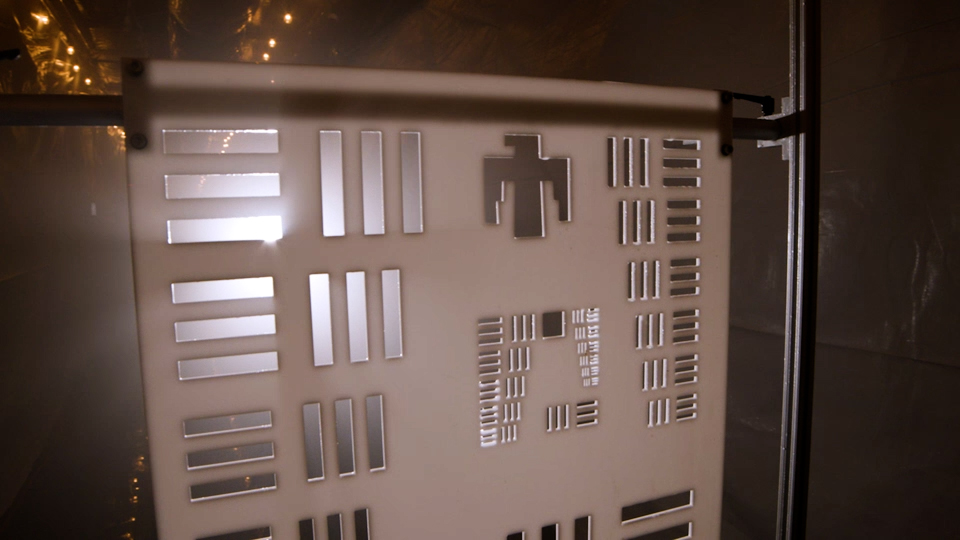

The devices under study also received an eye test. This time, the target was a white panel with multiple sets of horizontal and vertical rectangles cut out of it. The rectangles get smaller as you move from group to group. Essentially an eye chart for cameras, it helps researchers say how much detail a sensor can make out through the fog.

Data for the Next Generation of Sensors

These tests to study how far and how well today’s technology can see in foggy weather will help answer how safe an aircraft relying on them would be. NASA will release the data for use by companies and researchers working to develop information processing techniques and improve sensors for Advanced Air Mobility vehicles. They need this kind of data to build accurate computer simulations, discover new challenges, and validate their technology for flight.

NASA, meanwhile, will continue its fundamental research to understand how best to use these aircraft sensors. With different strengths and weaknesses, optical, radar, lidar, and other systems are complementary. A fusion of different sensors combined in the smartest ways will help make the market opened by Advanced Air Mobility a safe, productive reality.

Microbial Tracking – The Astronaut’s Guide to Microbe Hitchhikers

by Abigail Tabor

On Earth, there is a close connection between microorganisms and humans. Although people often think of microbes as enemies to our health, humans actually depend on their presence to survive. These microorganisms are found on all surfaces of the body, and there are tens of trillions of them that do us no harm. In fact, many are known to protect us, with beneficial roles that include digestion, stimulation of the immune system and protection from diseases.

When humans travel to the International Space Station, they don’t just bring themselves and their cargo – they also bring microbes into the station. Since the construction of the space station began in 1998, there have been more than two hundred missions to the station. With all the movement of people and goods, the station has become an environment of its own with a unique microbial population.

To better understand this environment, the Space Biosciences division at NASA’s Ames Research Center in California’s Silicon Valley is managing the Microbial Tracking research series. Recent SpaceX commercial resupply missions to the station have delivered new sets of microbial sampling kits and brought samples back to Earth for analysis. These are analyzed to identify the types of microbes being found, the interactions between them, the development of microbial communities, and any microbial effects on human health.

By taking multiple samples over time, researchers are able to see how the microbial population is changing. Some samples are collected on board the space station, and others are taken from the SpaceX spacecraft before launch for comparison. The findings from this research will help keep things running smoothly in space by determining the impact microbes have on the crew’s health. With this knowledge, NASA can work to develop ways of minimizing the hazards from microorganisms during long-duration crewed missions.

Microbial Tracking-1

Microbial Tracking-1 was a three-part series characterizing airborne and surface microorganisms from specific locations aboard the space station in 2015 and 2016. Sampling on three different occasions occurred over a 15-month period, which allowed researchers to study the population living on the station over time.

Learn more:

- NASA video: “Space Station Live: Millions of microbes under study”

- NASA article: “What’s Growing on the Space Station’s Walls? Observing How Microbes Adapt in a Spaceflight Environment”

For researchers:

- NASA Ames Space Biosciences division’s technical experiment page: Microbial Tracking-1 (SpaceX CRS-8)

- International Space Station technical mission page: Microbial Tracking Payload Series (Microbial Observatory-1)

Microbial Tracking-2

Microbial Tracking-2 followed the populations of microbes sharing the space station with the astronauts – including those on the crew themselves. This was the first time humans were studied in the Microbial Tracking investigation, and four crew members have volunteered to participate. Samples were collected with a swab before, during and after spaceflight from various surfaces of the astronauts’ body, including the mouth, nose, forehead, armpit and navel. Environmental samples were also being collected from surface and air locations around the station. For the first time, viruses were studied in the Microbial Tracking experiment series, in addition to bacteria and fungi.

Microbial Tracking-2 studied the microorganisms present on the space station between June 2017 and late 2018.

For researchers:

- NASA Ames Space Biosciences division’s technical experiment page: Microbial Tracking-2 (SpaceX CRS-11)

- International Space Station technical mission page: Microbial Tracking-2

Microbial Tracking-3

Microbial Tracking-3 continues the series’ work monitoring the space station for potentially disease-causing bacteria and fungi. This time, the investigation is looking specifically at genetic changes that occur during spaceflight and may increase the microbes’ ability to cause disease and to resist antibiotics.

The study will analyze data from three sources: existing data in the NASA GeneLab space biology database, microbes collected previously from the space station and held at NASA’s Jet Propulsion Laboratory, and new microbes to be collected from the space station at three time points over approximately one year. Beginning with the launch of sampling kits in June 2021, this work will improve understanding of how microbes change in this unique environment over time and help predict those that may pose a threat to crew health.