David L. Stringer, Director

NASA Armstrong Test Facility

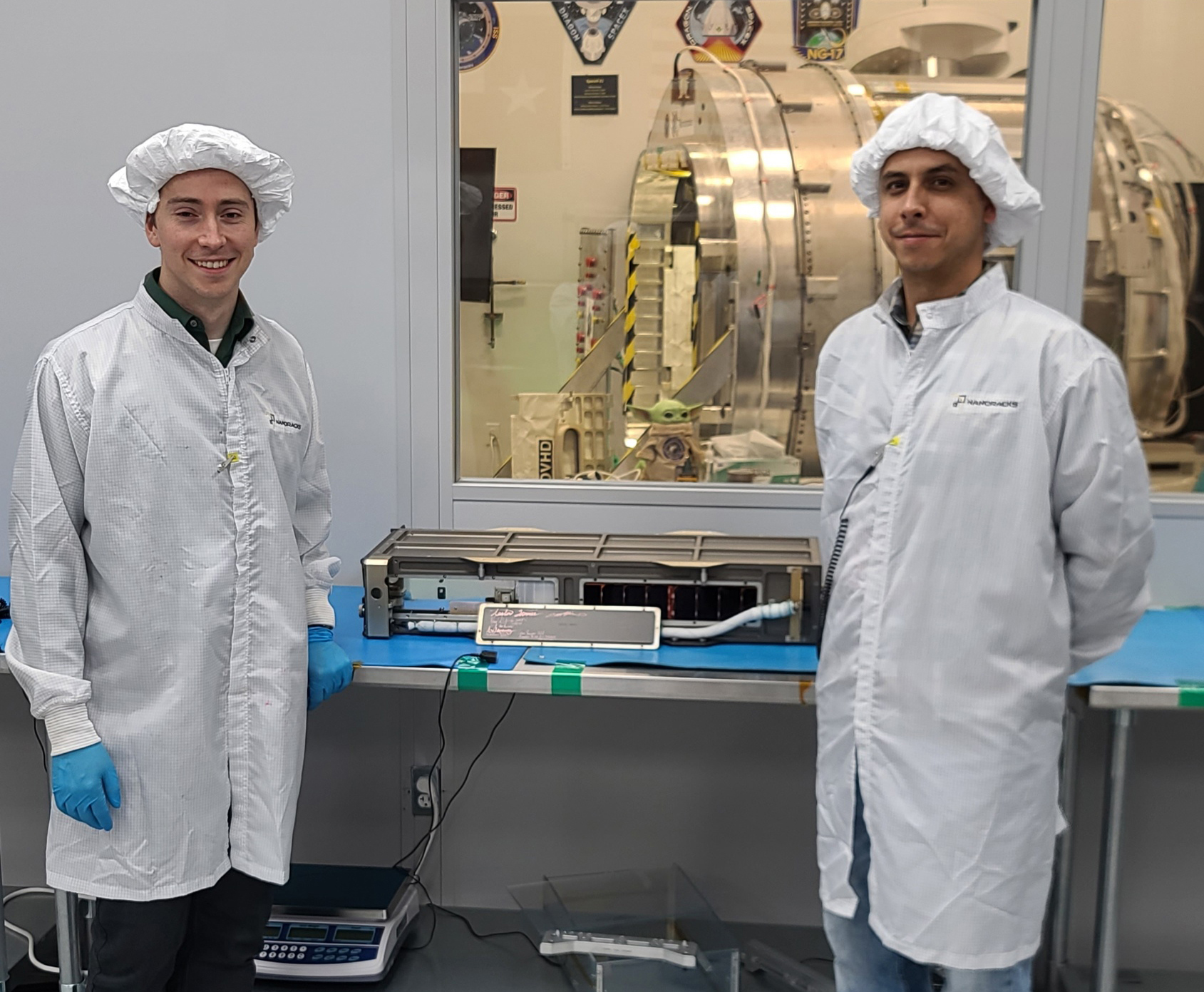

Testing may not often get the kind of attention it deserves, but it is absolutely essential to the success of space exploration and the missions, both uncrewed and crewed, that launch and operate for weeks, months, years, even decades.

Put succinctly, the purpose of testing is knowledge. Testing is essential to space-technology development, risk reduction and flight qualification, and incident response and mitigation. Also key are workforce skills development and advancement, specifically for technicians, engineers, scientists and managers.

The facilities where testing is conducted are likewise crucial. The studies undertaken there reduce risk by identifying problems before use. In other words, we test to avoid failures in ordinary use, as well as outright catastrophes.

According to an Aerospace Corporation Study, not testing complex space systems substantially increases the probability of failure. Three notable examples:

- In 2009, lack of proper testing of the Taurus XL rocket payload fairing led to the loss of the first Orbiting Carbon Observatory (OCO-1).

- In 2011, the Glory satellite suffered the same fate, having lifted off on that same rocket. OCO-1 cost $209 million, Glory cost $388 million, and successor OCO-2 in 2014—this time successfully launched on a different vehicle, the Delta II—cost $468 million, for a grand total of more than $1 billion thus far.

- The Hubble Space Telescope deferred critical tests of its mirror system to save money and time. A space shuttle mission, three years and more than $1 billion later, the Hubble was made operational.

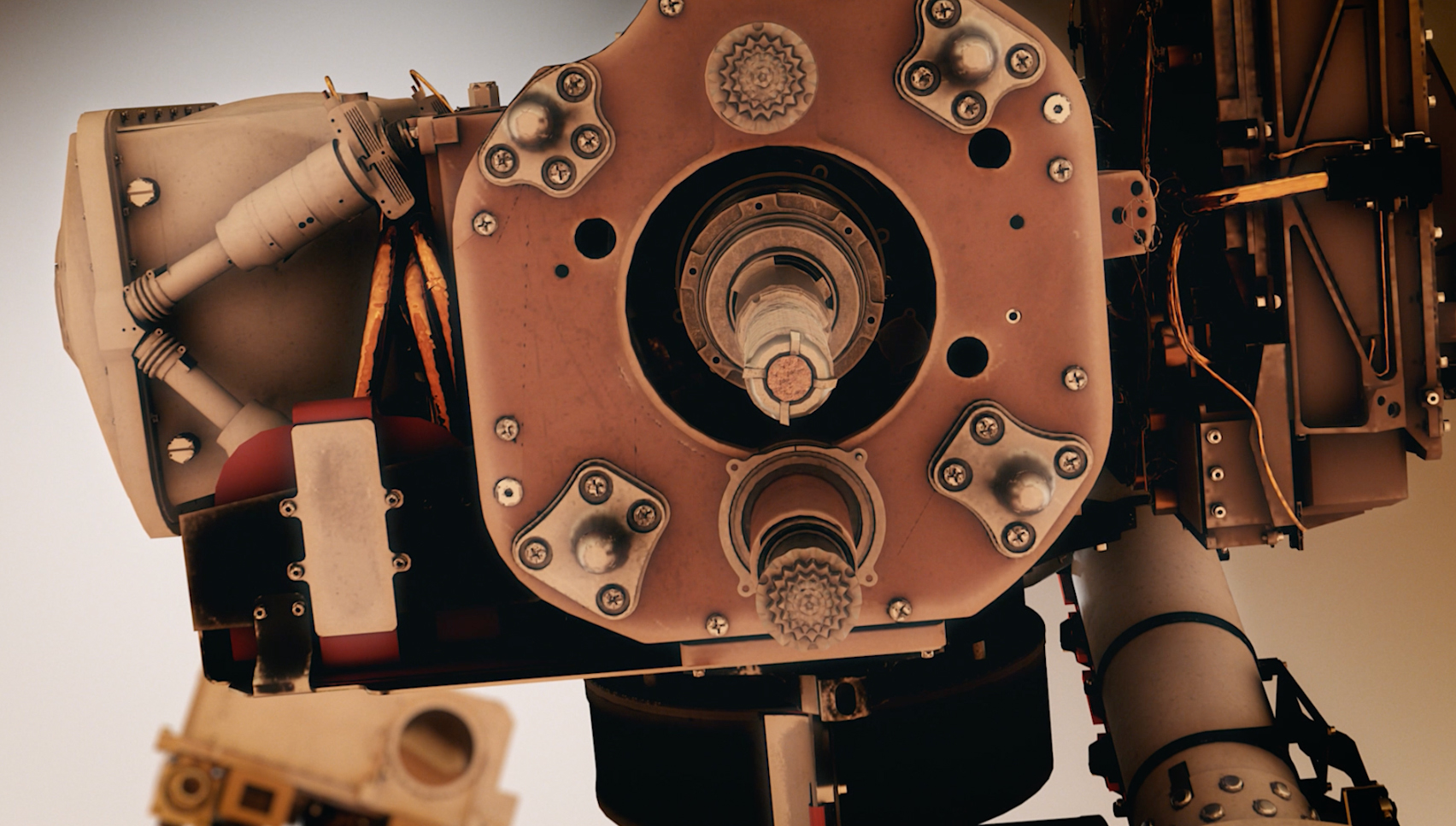

The bottom line is that the more costly, the more exceptional, or the more critical a spacecraft is, the more likely it is to benefit from components, systems and full-scale testing in the conditions mimicking those it will see in actual use.

Testing’s Value

So, how to quantify the value of testing? A primary metric should be the total cost of the test in dollars and time compared to the cost in dollars and time of both mission failure and the resultant consequences. That’s not to mention the threats posed to crewed missions, where human life is of course the central concern, and safety should always be of paramount importance.

Failure resulting from minimized testing priorities can and does significantly increase costs for subsequent missions, as remediation measures are studied and implemented to compensate for previous missteps.

Additionally, fixing problems in developmental testing is approximately 10 times cheaper than fixing them in operational testing, and fixing problems in operational testing is approximately 10 times cheaper than fixing them on orbit and/or on a production line.

Testing’s Cost

There are discrete data points in calculating cost. For the tester, that means the total dollar amount spent for hardware and related costs, as well as time involving labor.

For NASA specifically, quantifiable considerations have to do with facility availability, suitability and agility—that is, the ability to quickly ramp up as circumstances warrant. Is it worth it to have Agency research capabilities on standby just in case? Should ones not working regularly be mothballed, decommissioned or even demolished? Which ones should be kept?

These are the salient points to consider:

- How frequently are facilities booked? Booked backlog appears the most reliable indicator of needed capability.

- Which facilities are essential in avoiding genuine catastrophe for users like NASA, the U.S. Department of Defense, and commercial and international interests?

- Which facilities can perform multiple test roles?

- Which facilities uniquely provide other critical information?

- When there are other options, which facilities provide the best value to the testing customer when considering time, proximity, existing databases, and cost?

Whenever there is a choice of approximately equal capabilities, it’s important to consider both macro costs of operation—salaries, utility costs, etc.—and external availability factors such as power access and noise constraints.

It does no good to have the best test facility with little or no ability to use it, or with test costs so high that they deter potential customers.

Best Practices

Those in the testing arena make it a best practice to know test options and develop relationships among practitioners to optimize capability effectiveness. Correlating similar efforts helps develop a team spirit and makes a stronger community.

It’s best to define the concept of testing by defining the program requirements up front. Both users and acquirers will therefore better understand possible trades among technology, cost and timelines.

Test capabilities (current and qualified people as well as facilities) require time, sometimes extended, to become operational after the initial installation, modification, and/or return from mothballing or abandonment.

Finally, if people don’t know what or where the facilities are, they won’t know enough to test appropriately. Computing modeling only goes so far, and works well only if enough data points can be gathered to make the model valid. Comparisons, like facility capability and price and/or ground test versus flight test (or no test), are always warranted, but ought to be informed by eyes-on facts.

Computing modeling only goes so far, and works well only if enough data points can be gathered to make the model valid. Comparisons, like facility capability and price and/or ground test versus flight test (or no test), are always warranted, but ought to be informed by eyes-on facts.

David L. Stringer

Director, NASA Armstrong Test Facility

Admittedly, NASA’s history is replete with examples of capabilities and facilities that go through a more or less predictable life cycle: brand new, sustained while needed and, eventually, demolished when outmoded and outdated. To protect the vital research NASA conducts, we must maintain and improve our facilities and capabilities to appropriate levels, and then divest when no longer useful. And we should always be looking at what should or will come next, to prepare ourselves to meet and even exceed future mission requirements.

The United States has tremendous testing capabilities. We diminish or ignore testing at our national peril.