NARRATOR

It takes just a moment to send a message from Goddard Space Flight Center in Greenbelt, Maryland, home of NASA’s Near Earth and Space Networks, where I am, to the Jet Propulsion Laboratory in Pasadena, California, home of NASA’s Deep Space Network. I can look up a phone number in the NASA directory and call them. I could type an email. I could send an instant message.

Collaboration between these NASA centers, some 2,500 miles apart has never been easier. Web conferences can be arranged within minutes. Should an in-person meeting be necessary, a five-hour flight could take someone from Goddard to JPL by the close of business.

In the 1800s though, communications between these centers would have been impossibly inefficient. Mail had to be carried by hand to the West Coast. The delivery could take months by ship or as many as 25 days by stagecoach.

There was one exception, though. It wasn’t as fast as an email or a phone call, but this delivery system could deliver mail across the continent in an average of 10 days.

The Pony Express, in operation from April of 1860 to October of 1861, had mail carriers on horseback riding at breakneck speeds. Riders would switch mounts every 10 to 15 miles to give the horses a break. Every 75-100 miles, couriers would pass their mochillas, hybrid saddles-slash-mailbags, along to the next rider in just two short minutes.

The Pony Express, though a logistical feat, was never financially solvent. It was just too expensive to use or operate the network. But the nail in the coffin for the express wasn’t a fiscal failure. It became obsolete. In October of 1861, Western Union completed a transcontinental telegraph line. The Pony Express shuttered two days after.

Though the Pony Express was only around for a year and a half, its legacy endures. The Smithsonian Institute’s National Postal Museum in Washington has an exhibit featuring aspects of the Pony Express’ short, but fascinating, history. Stories and histories of the Old West often use this incredible transformation in the speed of communications to clearly delineate the past from the present — a bygone era from our digital one.

In spite of this, the Pony Express serves as a simple (if imperfect) metaphor for a bold new networking technology that might just revolutionize space communications, enabling an interplanetary internet: Disruption Tolerant Networking , or DTN.

I’m Danny Baird. This is the Invisible Network.

…

On Monday, May 6, 2019, NASA Goddard played host to a networking luminary: Dr. Vint Cerf, vice president and chief internet evangelist at Google and JPL distinguished visiting scientist. Cerf came to speak to the communications and navigation community in a forum focused on his role in founding the internet and, more recently, the maturation of DTN technologies.

Wearing one of his signature three-piece suits, he outlined the development of the IP, or internet protocol, that allows computers on Earth to interact with one another. It’s a foundational technology of the internet with a long history, but one that many are unaware of. What follows are Cerf’s recollections of that effort, from infancy to fruition, but there are some things you need to know before you listen:

First, some acronyms: ARPA, or Advanced Research Projects Agency, later called DARPA, the Defense Advanced Research Projects Agency, was and is a branch of the military responsible for developing emerging technologies with potential military applications. ARPANET was an early network developed by ARPA, which became the foundation for the internet as we know it today.

Second, some technical details: Packet-switching is a method of transmitting bundled data over a network. TCP/IP, or transmission control protocol slash internet protocol, governs the connections between computers and the internet, enabling packet-switching. Command and control is a military term that refers to the process by which information is spread through the chain of command and to allied forces.

Third, I’ve edited Cerf’s talk down for the purpose of this podcast, but there’s so much more to this history than what I’ve included. I’d encourage you to search the internet to learn more, but in the meantime, enjoy.

And, if it’s all just a little too technical, feel free to flip forward 20 minutes to catch the end of the episode and learn more about DTN.

VINT CERF

50 years ago, the first four nodes of the ARPANET were put into operation, and I was a graduate student at UCLA [University of California, Los Angeles]. My responsibility was to get this computer hooked up to the Interface Message Processor that formed one of the four packet switches of the original ARPANET. So, that was the very beginning of what became a wide area packet switching experiment — a very, very successful one.

At the time, packet switching was considered to be extremely risky. Most of the traditional communications people said it wouldn’t work — said they didn’t want to have anything to do with this crazy idea — but they’d be happy to sell dedicated circuits to us so we could build our stupid network.

So, we did and they did and it worked.

The Defense Advanced Research Projects Agency funded the ARPANET initially not to deal with nuclear war or anything else. They simply had a dozen universities that were doing research in artificial intelligence and computer science and every year every single one of the computer science department said to DARPA, “You need to buy us a world-class computer every year so we can do world class research,” and even DARPA couldn’t afford to buy another, you know, mainframe computer — a dozen of them — for each university.

So they said, “We’re going to build a network, and you’re going to share.” And everybody hated that idea. But DARPA said, “We’re going to build a network, and you’re going to share.”

So this was a resource sharing experiment initially. It was also an experiment in packet switching.

My good friend Steve Crocker, whom I mentioned I’d met in 1959, started a document series called Request For Comments (RFCs) and some of you who might have had something to do with either the Internet Engineering Task Force or other internet work will have encountered these documents because the first one came out in April of 1969, and there are some 8,800 of them now and counting.

Steve led the network working group, which developed the host-to-host protocols for the ARPANET. They’re the early equivalent of the TCP/IP protocols of the internet, somewhat less capable because they relied on the underlying network for reliability. The internet assumed that none of the networks were reliable and we had to do end-to-end recovery (which, by the way, is a feature also of the Delay and Disruption Tolerant Networking bundle protocol).

So Bob Kahn and I did the design of internet over a six-month period from the spring to the fall 1973. We wrote a paper, which was published in May of ’74. I started a series of experimental notes separate from the RFC series because we didn’t know whether this was going to work either. But we switched over to the RFC series as we got deeper into the design. So we had a detailed specification of the TCP/IP protocols — actually it was only TCP at that point in December of 1974.

By the way, if you wonder, “Where did the term ‘internet’ come from?” it was a short form of inter-networking and it was used for the first time in the title of the December 1974 RFC.

While I was at ARPA, I was advised by my then-boss, Bob Kahn — also my partner in the design of this system — that if I got hit by a bus, I should make sure that somebody else knew how this thing was supposed to work. So we created the Internet Configuration Control Board. The name was selected purposefully to sound as boring as possible so nobody would want to be on it. And then I picked the lead engineers for the various universities that were contributing to the internet design, and they became the Internet Configuration Control Board.

I left DARPA in late 1982 to go into the private sector and my successor rename the ICCB the Internet Activities Board with 10 task forces. Under that spawned the Internet Engineering Task Force — which is one of the larger of the task forces — and the Internet Research Task Force. And we required in this standardization process that you had to show multiple implementations designed and built from the spec, not from sitting down with the other guy who had something working. This was to verify that the spec was sufficient to actually make something work.

So in 1977, I was still at ARPA at the time, feeling like it was important to show that this stuff could actually link different kinds of packet switch nets together, because the whole idea was to allow an arbitrarily large number of distinct, separate and disparate packet switching systems to interwork.

So we had a mobile packet radio network operating out on the Bayshore Freeway, in the Bayshore area, in San Francisco. We had a packet satellite system that we built over the Atlantic using Intelsat 4A. And then we had the ARPANET, which had been extended to Europe by that time using an internal satellite hop.

Now the model — I should say, the purpose — that drove this particular design was the use of the internet technology for Command and Control. So by this time, we’re saying, “Packet switching works. How do we use it to help us use computers in Command and Control?”

Of course, the immediate, obvious problem is: that means computers in airplanes, computers in ships at sea and computers in mobile vehicles. And, up to that point, all we had were dedicated telephone lines connecting fixed-installation computers. So we needed — you know, you can’t use wires to connect the tanks, because they run over the wires and they break, and airplanes never make it off the tarmac, and the ships get all tangled up.

So we had to use mobile radio and satellite. They had different speeds, different error rates, different latencies, different packet sizes, different addressing structures. And so, we had to figure out how to bind them all together and that’s what TCP/IP did. And so, remarkably, on Nov. 22, 1977, we demonstrated that this stuff actually works in that elaborate environment.

Then comes a kind of a spread of internet. So, how did this actually get out there? This was an all-DARPA thing up until this point.

So, my good friend Bob Metcalfe, who is the inventor of ethernet when he was at Xerox PARC, started a company called 3Com in 1979 to sell commercial ethernets and he built a TCP/IP for the Unix operating system so that his ethernet would be useful. The National Science Foundation sponsored something called the CSNET (Computer Science Network), starting in 1981 — again, using the TCP/IP protocols. Sun Microsystems got started and they decided to use open protocols and open designs, and so they adopted TCP/IP. Berkeley was paid by DARPA to go implement another version of TCP/IP on Unix, and that was Bill Joy’s contribution. Of course, he was one of the founders of Sun Microsystems.

Then comes commercialization in one respect and that’s equipment. So Cisco Systems and Proteon — and others subsequently, like Juniper — started building routers. What was interesting is, the way you build a router originally was that you found a computer and a graduate student and you wrapped the graduate student around the computer and that turned it into a router. The problem we had is that we ran out of graduate students.

So Cisco had its origins at Stanford University. They started building equipment that you could essentially buy so you could build a piece of internet. And as the system began to grow, we realized that the naming system needed to be redesigned.

Originally, all we were doing is sending a file around with a list of all the host names and their IP addresses. That was it. So this file would go out once or twice a day and you’d update all of your tables.

But, as the network started to get bigger and bigger — more networks were involved, more sites were involved. We had to develop a richer architecture. So the domain name system, which is a hierarchical distributed file system, was developed in 1984 by Paul Mockapetris and Jon Postel.

And then Jon, bless his heart, who was the RFC editor, becomes the Internet Assigned Numbers Authority (IANA). He is personally handing out IP address space — and keeping track of it in a notebook somewhere — and handing out domain names. He does this all himself. One guy: RFC editor, domain names and IP address allocations.

Well, at this point, the National Science Foundation decides that it’s going to build five supercomputers. And it’s thinking, “How are we going to get the 3,000 research universities in the United States connected to the supercomputers? Maybe we should build a network.” Well, this network is going to have to connect 3,000 universities plus the five supercomputer centers.

And then they asked, “What protocols should we use to achieve this objective?”

And a guy from Ireland had been brought into NSF to run the supercomputer center program and decided that — in 1985 — that they would use the TCP/IP protocols to do this. This caused a gigantic hue and cry, because in 1978, in Europe, the International Standards Organization launched a project called Open Systems Interconnection.

And so, we’re now in a pitched battle between TCP/IP and OSI. Except nobody had implemented OSI. They just had stacks of documentation — beautifully documented, fantastic new vocabulary, seven layers, you know, wonderfully documented.

So, this Irish guy says, “I need something that works.” So he chooses TCP/IP and he [received] a lot of incoming as a result of that decision, but he stuck with his guns. And a good friend of mine, David Mills, built the first NSFNET backbone on 50-kilobit lines, which at the time were, you know, moderately high speed.

It died instantly, overloaded by demand and traffic. And so, the NSF’s response was to design and build a T1 network with the help of MCI, Merit and IBM.

And, in the meantime, in 1986, my good friend Dan Lynch starts a company called Interop to show the private sector, how does TCP/IP work and how all of their equipment could be made to interwork.

So they had a big show-net, the big ethernet — the yellow cable. And, in order to be at Interop, you had to show up and show your stuff worked with everybody else’s stuff. You can’t imagine how much debugging got done at those Interop things, because these guys are out there trying to sell their gear and then buyers are saying, “Show me it works.” So, it was a fabulous arrangement all around because lots and lots of people got to see how it worked and lots of people got their products to work as a consequence of that.

But, I just want to draw attention to one of the things that NSF did that turned out to be super helpful. And, again, I want you to be thinking about this in terms of commercialization and distribution of knowledge and access. In this particular case, NSF said, “Okay, we’re going to build this backbone network, but we need to connect 3,000 universities.” And they thought, “The poor guys who were building this backbone would have 3,000 potential customers, and that would be very distracting.”

So they said, “Hey, this internet thing allows you to build multiple networks and connect them together.” That was the whole idea.

So they said, “Why don’t we build a dozen intermediate level networks and have them be regional — have various universities cooperate with each other to build these regional networks. And then the regional networks would be connected to the internet backbone, the NSFNET backbone.”

Well, there were only a dozen regional networks. So that made for a nice, modest size customer base for the NSFNET backbone. And in the meantime, the regional networks had, you know, several hundred possible customers spread across all dozen of those. And so the result was a rapid growth in the number of networks that were part of the NSFNET or part of the internet backbone.

I’ve already mentioned the Interop shows. I walked in, in 1988, at one of [Dan’s] shows in San Francisco, at the Moscone Center; 50,000 people attended. Eric Benhamou, who was then the head of 3Com, and the first thing we encountered when we walk in is a two-story Cisco display.

So I stopped. I turned to Eric and I said, “How much do these cost?”

And he said, “About a quarter of a million dollars.”

Remember, this is 1988, when a quarter of a million dollars — you know — was a significant amount of money.

And then he said, “That doesn’t count the cost of the people to man the thing for a week.”

And I just stood there — sort of jaw dropping — thinking, “My God, somebody thinks they’re actually going to make money out of the internet.”

And then I thought, “Wait a minute, how in heck are we ever going to get the general public up on this system?” And I figured the government wasn’t going to pay for everybody’s home use of the internet.

So I said, “How do we build an economic engine under this thing?”

It turned out around that time, around ’88, I said, “Oh, there’s a rule that says no commercial traffic is allowed on the government sponsored backbones.”

So I thought, “How am I going to break that rule?”

So I had built MCI Mail — a commercial system for MCI — when I got into the private sector. So I went to the then-Federal Networking Council. And I said, “Would you allow me to perform an experiment to connect the commercial MCI Mail system to the internet to see whether we could make the two protocols work together?”

Of course, the real reason is we wanted to break that rule that said no commercial traffic. So they said, “OK, for a year.”

So in 1989, we got the MCI Mail system up and running on the internet backbone. And two things happened as a result. The first one is that three commercial internet services popped up that year, 1989: UUNET, which eventually ended up at MCI, which is now at Verizon, and PSINet, which was a commercialization of the New York State Education and Research Network, and CERFnet out in San Diego, which was originally supposed to be called S-U-R-F net, because what else would you do in San Diego? They had a whole ad campaign: “surf the internet.” And then they discovered that the guys in the Netherlands had already taken the term S-U-R-F net.

So somebody says, “Well, why don’t we call ourselves the California Educational Research Foundation network? It sounds the same.”

And then somebody says, “Oh, maybe we should call Vint.”

So, they call me up and they said, “Is it OK if we call our network C-E-R-F net?”

And my first thought was, “Oh, if they screw it up, am I going to be embarrassed?”

And I said, “Wait a minute, people name their kids after other people and if the kids don’t come out right they don’t blame the people they named them after.”

So I said, “Sure, why not.”

So I flew out in July of ’89, and Susan Estrada — who was the executive director at the time — and I got a plastic bottle full of glitter and we smashed it over a Cisco router and launched the CERFnet.

So those were the first three commercial networks and about the same time, the other commercial email services — TeleMail, OnTime, CompuServe — heard about MCI Mail being connected the internet. And they say, “Wait a minute, those MCI guys, they can’t have this special position. We want to be connected to the internet too!”

And the Federal Networking Council said, “OK.” So they all got hooked up.

And then surprise, surprise, these networks were completely independent of each other until they hooked up to the internet. And all of a sudden, every one of their customers could talk to every one of their competitor’s customers because they were all using the same internet email protocols. That was a little surprise because the internet was free at the time and so that messed up their business model just a little.

In any case, those were the sort of unexpected side effects.

And then along comes Tim Berners-Lee. He’s at CERN. He’s a physicist. And he’s trying to figure out, “How do I help my physics buddies share their papers with each other, you know, their formatted text and imagery and graphs and charts. How do we make it easy for them to find their stuff?”

And he invents the World Wide Web. HTML for formatting and HTTP for hypertext transport protocol. And nobody notices except a couple of guys at the National Center for Supercomputer Applications, Marc Andreessen and Eric Bina, who build the Mosaic browser, which is a graphical interface to the World Wide Web — you know, a million downloads in two weeks, everybody goes nuts. The internet is suddenly a technicolor magazine. And it’s got imagery and formatted text and eventually video and audio and everything else. So that starts to take off like crazy.

Right about that time, NSF said, “We’re not going to fund the secretariat for the Internet Engineering Task Force anymore.”

So I said, “OK, we’ve got to find a nonprofit to go underwrite the cost of running the secretariat.” So Bob Kahn and I started the Internet Society in 1992.

Another reason that I’m going through this is to draw your attention to the fact that, in this evolving internet environment, we would create new institutions as the need arose. So we didn’t try to anticipate ahead of time all the different institutional elements that were needed, but this was one that was needed for funding purposes. And eventually, of course, it’s played a significant role in policy development and articulation. In Europe, there was a RéseauxIP Européens, which is the internet protocol research networks in Europe. They started implementing TCP/IP — again against the background of, “OSI should conquer everything.”

So they took a risk and joined us in that space.

The National Science Foundation paid for connections — international connections — between the European research networks, the American research networks, the Japanese research networks. So NSF, once again, sort of plowed the ground to help make this a global phenomenon.

The Asia Pacific Network Information Center gets started in ’93 and is responsible for allocating IP address space and domain names. Similarly, the RIPE NCC.

Netscape Communications gets founded to do [a commercial version of] the Mosaic browser and goes public in 1995. Its IPO goes through the roof and the dot boom is on.

So just to catch you up to the end of the institutional story, the Internet Corporation for Assigned Names and Numbers (ICANN) gets started in 1997. So the authority for all of this stuff — the policy for the internet [names and numbers] — went from the Department of Defense, to the National Science Foundation, to the Department of Commerce (by way of NTIA) and now, finally, independently operated by ICANN.

Every time somebody invented a new networking technology, somebody said, “OK, it’s over for the internet.”

And I used to tell everybody, “You know, IP runs over everything, including you if you don’t get out of the way.” So that’s what happened.

The internet evolved successfully because of its open architecture. It was designed to be evolvable. It was designed to invite new applications, new protocols, new implementations, and we should be thinking about that.

This freedom to create new services and applications is vital.

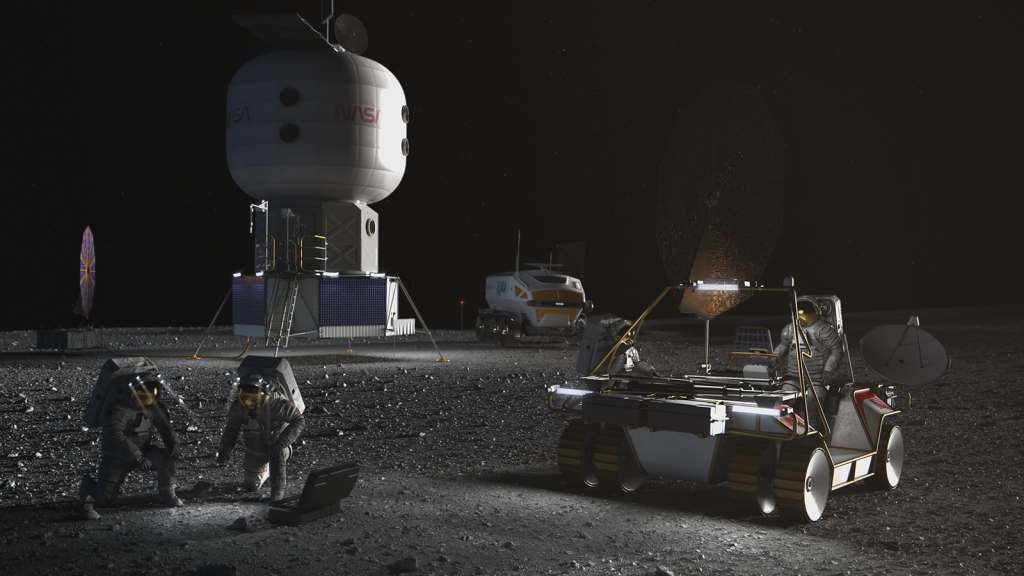

And, as we think about exploration of the rest of the solar system, we’re going to want to have an architecture that will invite new applications and new protocols as the need arises. I think, in the internet world, we had to have incentives for the business world to build and operate and sustain, maintain and grow this stuff.

Final point is, “The future isn’t written yet.” You remember that line from “Back to the Future”?

We get to write it. So that’s your job. We get to write the future of the interplanetary network. We get to write the future of PACE and some of the other missions that are coming up.

That’s not only a big responsibility, but it’s also an absolutely wonderful opportunity. So I join you in looking forward to writing that future. Thank you very much.

…

NARRATOR

While the internet and IP have become ubiquitous, Cerf’s contributions to communications and networking are far from behind him. In the late ’90s, he was on the team that originated the DTN protocols. Today, his work on DTN seeks to revolutionize networking once again, allowing communications engineers to extend the internet deep into space.

What is DTN? What makes it different from standard IP? Goddard communications architect and DTN engineer Dave Israel explains:

DAVE ISRAEL

We’re bringing internet-style operations in locations and scenarios where the internet protocols won’t work — so, network-based store-and-forward protocols. So, the [“D” in] DTN originally stood for delay. So, first issue is the speed of light time.

The IP and particularly things like the TCP/IP protocol — there’s actually a lot of back and forth between your computer and whatever computer you’re connected to — quick messages that go back and forth that the user never notices because there’s such a short delay. Once you start going distances where that speed of light time, there’s nothing you can do about it — we still haven’t worked out travelling or communicating faster than the speed of light — so you get these delays. So, if you wanted to say, “Hello,” to somebody and they say, “Hello back,” and there’s a 10-minute speed of light time delay, then that takes 20 minutes for that exchange to happen.

So if you have two end points that need to exchange little things back and forth in order for a protocol to work, then that’s just not going to cut it when you’re far away.

And then the other aspect built into that example is this assumption that the two end points of the conversation are connected at the same time. What we have are situations where, maybe let’s say there’s something on the far side of the Moon and we want to put a relay up. It would be one thing if we needed to work out only communicating the time when the relay is in view of the thing on the surface and Earth at the same time — would really limit the amount of time we’d be able to send data.

But, if we could do it in pieces and have the user on the surface send it to the relay whenever the relay is in view and then the relay sends the data back whenever the Earth in view, then you get more opportunities to send more data. And DTN provides automated ways to do that.

NARRATOR

To simplify, let’s look back at the Pony Express. Each DTN node is a station where horses or mail carriers can rest before moving to the next one. If there’s no clear path to the next station, the mail can remain at that station until a path clears. Alternately, if another path opens up, the mail can move through the network of Pony Express stations or DTN nodes without dealing with the downed station.

So, unlike the computer-to-computer IP connection used in the modern internet, DTN technologies allow for the temporary disruptions often experienced by spacecraft far from our planet. Just as the Pony Express riders conquered great distances by breaking the journey into smaller pieces, DTN allows data to travel, piecemeal, through a network comprised of many stopping points and alternate routes.

I like to think that DTN lets the data get “creative.” Even if the path home isn’t obvious, DTN finds a way.

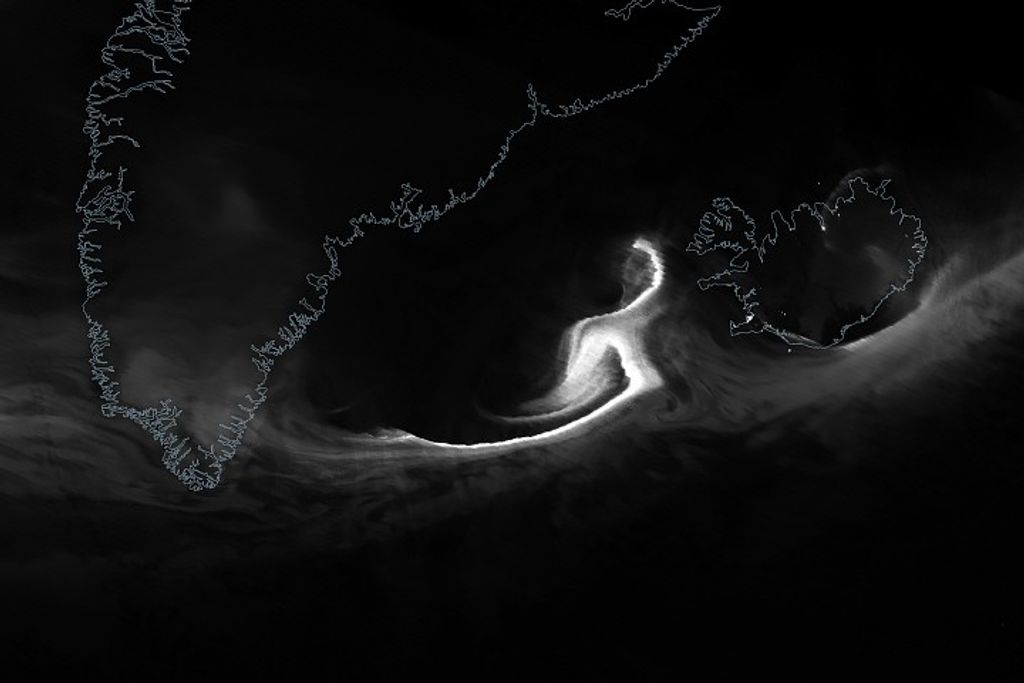

DTN technologies and protocols wouldn’t just benefit spacecraft far from home. Implementing DTN on Earth could improve communications services for remote locations with spotty connectivity, like the rainforest or the arctic.

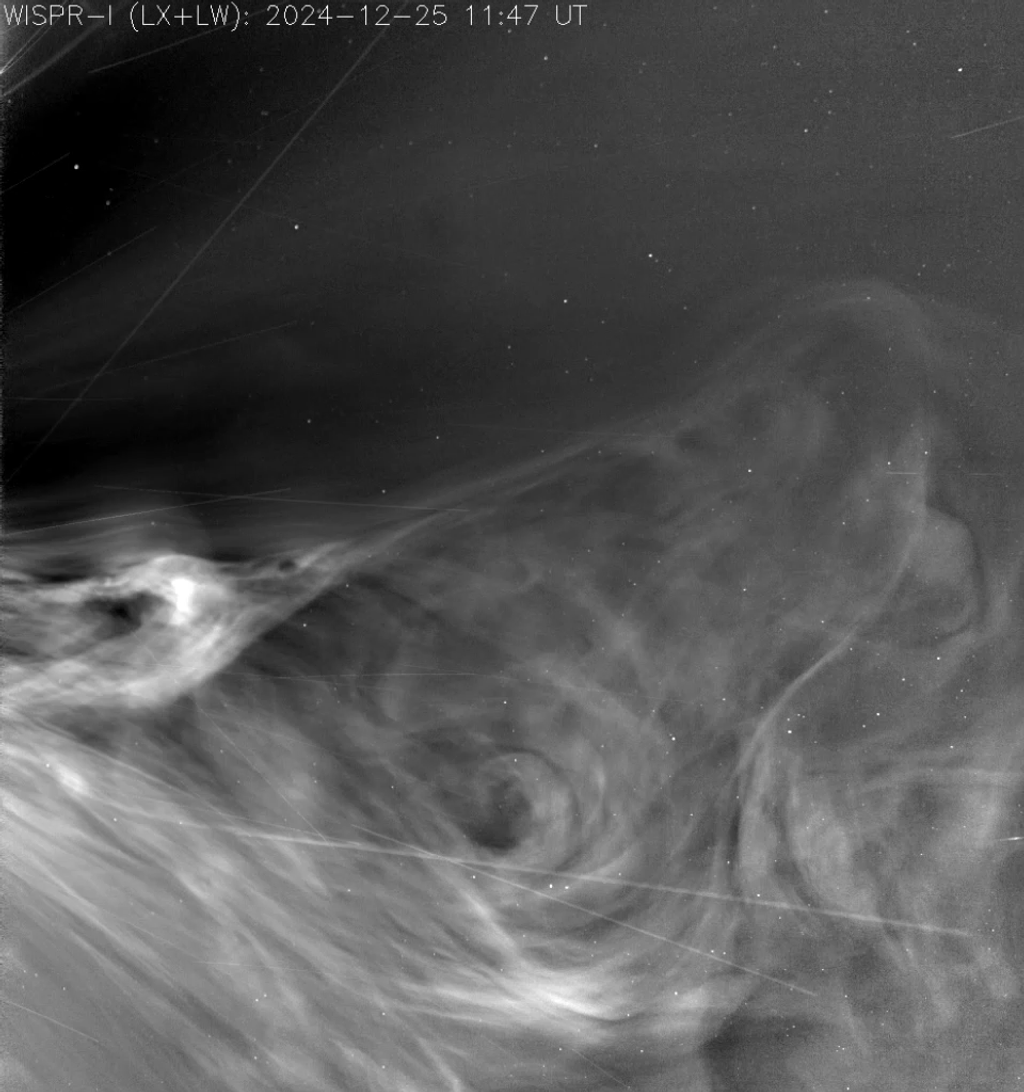

In 2018, NASA used DTN to demonstrate this terrestrial benefit by sending a selfie from the National Science Foundation’s McMurdo Station in Antarctica to the International Space Station, which has been using DTN for science data since 2015.

Starting at McMurdo, DTN software on a mobile phone bundled the selfie data for its journey to the space station. The bundles traveled from the McMurdo ground station to NASA’s White Sands Complex via the Tracking and Data Relay Satellite system, a constellation of relay satellites 22,000 miles overhead. Then, a series of DTN nodes forwarded the bundles to NASA’s Marshall Space Flight Center in Huntsville, Alabama, the access point to the space station’s DTN network. The bundles were then forwarded to the space station via another satellite link. The final DTN node extracted the picture data from the DTN bundles that originated from Antarctica, reassembled the original picture and displayed it on board the station.

Data transmission has always been a challenge for Antarctic researchers. With scant civil infrastructure and very few providers able to service the geographic South Pole, the data demands there far exceed the capabilities needed by scientists hoping to get their data from the arctic back to their research institutions. Communication disruptions can have serious consequences for researchers, disturbing collaboration between distant scientists.

Though Antarctic researchers are not communicating across interplanetary distances, McMurdo’s remote location and minimal infrastructure make it an ideal candidate to benefit from DTN. If DTN data bundles from the Antarctic fail to transmit all the way through the network, they can simply go into storage until an alternate path off the continent can be found. If the bundles were all part of a larger file, that file can be reassembled at the final destination once all the packets have found their way through the network.

…

Before Vint Cerf spoke with the communications and navigation community at Goddard, he went to visit the team working on the Plankton, Aerosol, Cloud, ocean Ecosystem mission, or PACE. PACE is an early adopter of DTN, and will become the first science mission to implement the technology in space for operations.

The PACE mission will advance scientists’ understanding of our oceans and build on decades of NASA research into ocean systems. The PACE satellite has instruments capable of studying everything from the carbon cycle to blooms of phytoplankton, microscopic algae with an outsized impact on ocean ecosystems. The benefits of PACE research will be felt worldwide, aiding in the assessment of water and air quality, providing insight into Earth’s climate system and monitoring ocean ecology.

Andre Dress, PACE mission manager:

ANDRE DRESS

The communications support we need from them has multi-facets to it, right? So, there’s just the real-time telemetry that gets transmitted down to the ground stations through the NEN [Near Earth Network] Network over here at Goddard so that the people at the missions ops control center can see the data.

And then there’s recorded data. So [it] comes in a couple flavors. So there’s housekeeping data — housekeeping data is like temperatures, engineering units on pointing, stuff like that — and then there’s the science data. So, all that data is captured and stored on the spacecraft and then transmitted back through the NEN back here to Goddard, where it’s processed.

From a DTN perspective, it helps in the releasing of memory. So, the faster we can acknowledge that the data is here on the ground and captured and stored, the sooner we can release the memory on the spacecraft.

NARRATOR

As an early adopter of DTN, PACE is proving the technology as an operational capability, encouraging other science missions to use DTN protocols. This will go a long way to infuse the innovation into space communications networks.

The benefits to the PACE mission and to the DTN development effort could change the way we think about both Earth’s natural systems and NASA’s digital ones. It’s a symbiotic relationship — one of the many ways NASA explores as one.

John Verville works to infuse DTN technologies into new missions.

JOHN VERVILLE

Infusion is where you take a technology that sort of is an opportunity — something that we know has a lot of benefit but it doesn’t have a specific mission that’s using it yet or that’s depending on it — and basically sort of “infusing it” means to take it and develop it and build it so that it’s ready to meet mission needs. So we spend a lot of time looking at the needs from an operations point of view, which in many ways are different from the needs from just a pure technologists point of view.

And then operationalization is sort of things like, “How is an operator going to work with this technology or this software? How is it going to interact with the other elements within the network or the system? How are we going to control it? How are we going to get status from it? How is it going to be interacting with other things — sort of what we call “con ops” or “concept of operations.”

And so, that’s the type of things that, working with technologists, we can build up their repertoire and actually operationalize.

…

NARRATOR

Commonly, people refer to something as the “Wild West” to capture a sense of lawlessness. Many describe the early days of the internet as the Wild West, because — at the time internet networking technologies were developed — there were few regulations to guide developers. Pioneers like Vint Cerf had a blank slate to chalk up as they saw fit.

Out of that lawless chaos, our digital age was born.

But — I think the phrase “Wild West” also carries tinges of optimism. It’s about wide open spaces and boundless innovation. It’s about the Pony Express — a logistical marvel that carried mail faster than ever before, connecting two disparate ends of this vast continent. It’s about the internet — a network that connects all of humanity, bridging oceans and forests and even the arctic.

And now: it’s about DTN — a technology that promises to connect us to each other no matter what planet we might call home.

…

This season of “The Invisible Network” debuted in November of 2019. The podcast is produced by the Space Communications and Navigation program, or SCaN, out of Goddard Space Flight Center in Greenbelt, Maryland. Episodes were written and recorded by me, Danny Baird, with editorial support from Matthew Peters. Our public affairs officers are Peter Jacobs of Goddard’s Office of Communications, Clare Skelly of the Space Technology Mission Directorate and Kathryn Hambleton of the Human Exploration and Operations Mission Directorate.

Special thanks to Barbara Adde, SCaN Policy and Strategic Communications director, Rob Garner, Goddard Web Team lead, Amber Jacobson, communications lead for SCaN at Goddard, and all those who have leant their time, talent and expertise to making “The Invisible Network” a reality. Be sure to rate, review and subscribe to the show wherever you get your podcasts. For transcripts of the episodes, visit NASA.gov/invisible. To learn more about the vital role that space communications plays in NASA’s mission, visit NASA.gov/SCaN.