Innovators at Armstrong are exploring how virtual reality (VR) and augmented reality (AR) can progress from the laboratory to the cockpit to help accomplish research missions, inspire current and next-generation workforces, and publicize our center activities.

VR typically refers to replacing reality with a completely virtual environment, and AR refers to layering virtual components into a reality environment. Current and recent activities include:

- Enabling safer and more efficient operation of unmanned aerial systems in the National Airspace System

- Exploring safer and more efficient flight testing at Armstrong

- Helping with aircraft maintenance for our fleet

- Highlighting to the public the exciting research we are conducting

Activities are funded through NASA’s Aeronautics Research Mission Directorate, NASA Center Innovation Fund, and innovation time. This research provides an opportunity to share ideas, collaborate, recruit new members, and showcase center accomplishments. Future plans include a VR/AR Demonstration Day and VR/AR courses as part of the Armstrong University curriculum.

Building Trust

Armstrong researchers are collaborating on an effort to build a basis for certification of autonomous systems by defining metrics for trustworthiness and developing a simulation environment in which these concepts are explored. Part of the Convergent Aeronautics Solutions Project, the Autonomy Teaming and Trajectories for Complex Trusted Operational Reliability (ATTRACTOR) sub-project employs multi-agent team interactions, explainable artificial intelligence (XAI), persistent modeling and simulation, and analyzable trajectories in the context of mission planning and execution. The simulation environment is similar to online gaming environments in which participants interact with each other, affect their environment, and expect the simulation to persist and change.

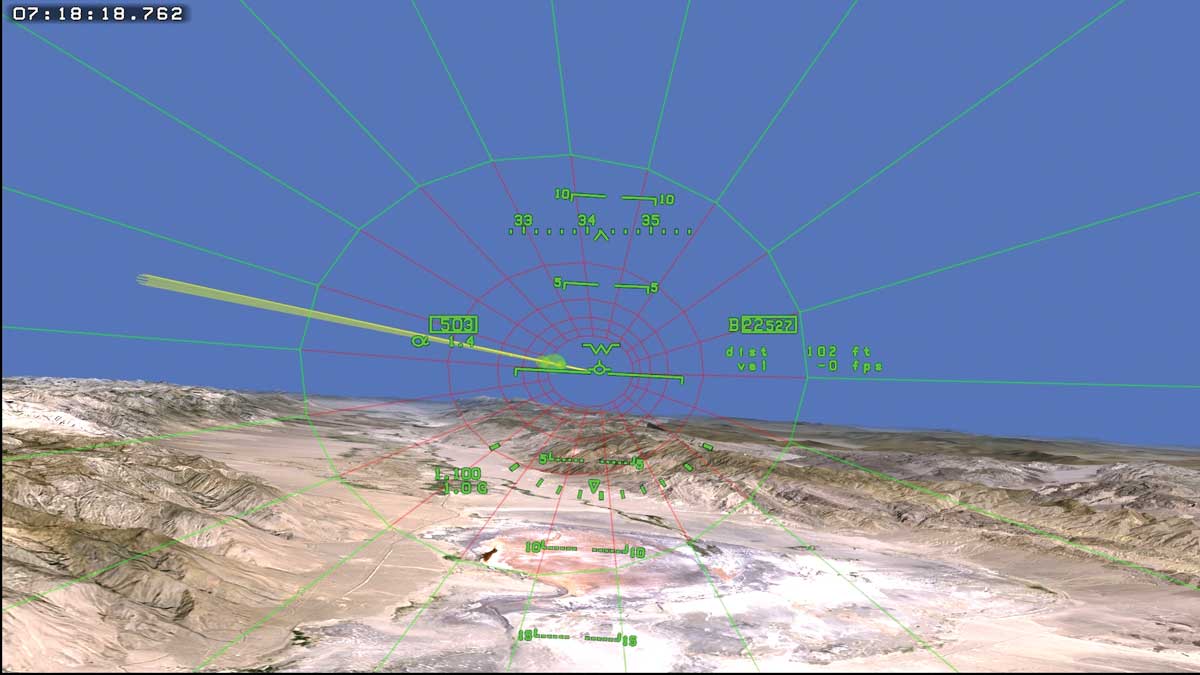

The focus of ATTRACTOR at Armstrong is working with small autonomous unmanned aerial systems (UAS) in a simulation environment to evaluate how virtual and augmented reality systems can be useful during mission planning, real-time execution, and post-test analysis for simulated and real-world environments.

Work to Date

Armstrong researchers are leveraging the built-in gaze, gesture, and voice features of a HoloLens® headset for mission planning and real-time execution tasks.

Looking Ahead

Post-test analysis is the focus of future development. The team is working to import lidar data into the simulation environment where researchers can view datasets in 3D perspective.

Partners

NASA’s Langley and Ames Research Centers

Benefits

- Ground-breaking: Paves the way for autonomous systems into aviation

- Advanced: Offers the capability to view, adjust, and augment mission plans from a 3D perspective

- Reliable: Demonstrates transparency in decision making of autonomous systems

Applications

- Simulation and modeling

- Mission planning

PI: Jason Holland | 661-276-3035 | Jason.L.Holland@nasa.gov

HoloLens is a registered trademark of the Microsoft Corporation in the United States and/or other countries.

VR/AR for Flight Research

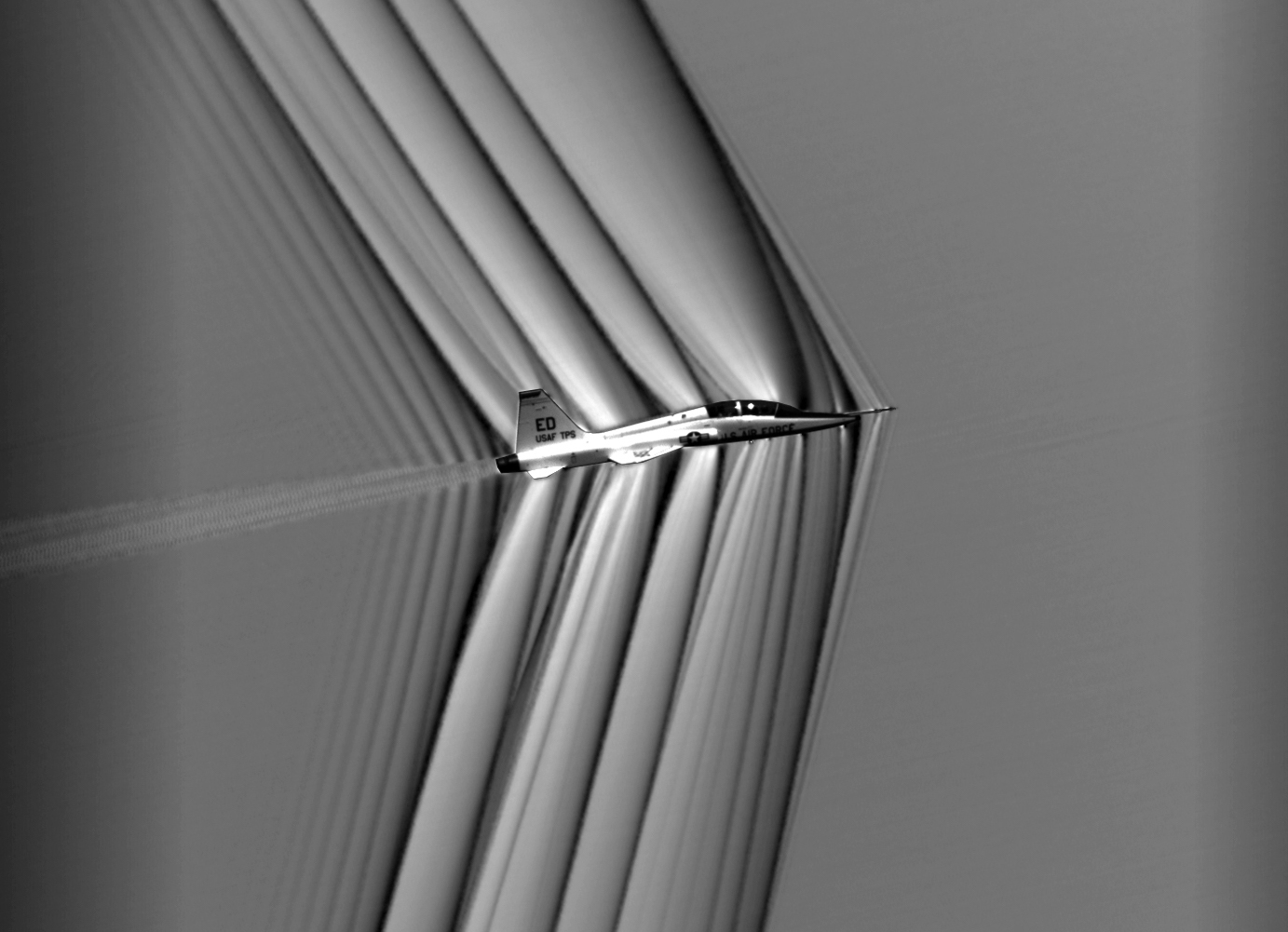

Armstrong researchers are investigating how virtual and augmented reality (VR and AR) systems can make it easier for pilots to receive and visualize critical information during flight testing. Current visualization aids involve 2D displays, requiring pilots to process multiple sources of information while performing flight tasks, such as approach a runway at a specific speed, altitude, and engine setting. Because VR and AR systems excel at gathering, processing, and digesting large sets of data from multiple sources, they can be useful in providing pilots with intuitive flight information. A team is developing a head-up display system that augments reality with virtual objects to decrease pilot workload and improve test point execution during flight testing.

Work to Date

The team purchased hardware and completed laboratory tests simulating an AR environment in an existing simulator to better understand and compare head-down and head-up displays. While investigating mixed reality systems, researchers learned that most AR systems do not have a wide enough field of view; therefore, they developed a system that uses a VR headset with cameras porting in the outside world. The current prototype ports simulation position data into a VR headset, enabling a pilot to wear the VR headset but fly a simulation that includes AR graphics.

Looking ahead

The team is continuing to investigate and refine hardware and simulation data integration. Next steps are to tackle the hurdles of integrating the system into an aircraft for testing.

Benefits

- Decreases pilot workload

- Improves test point navigation

- Enhances data quality

Applications

- Flight testing

- Commercial and general aviation

PIs: Aamod Samuel | 661-276-2155 | Aamod.Samuel

Paul Dees | 661-276-3433 | Paul.M.Dees@nasa.gov

Educating the Public

Armstrong personnel are seeking to increase the visibility of NASA’s aeronautics projects and attract students to science, technology, engineering, and math (STEM) fields. A strong contingent of student interns collaborated with Armstrong researchers and the Office of STEM Engagement to develop an augmented reality (AR) mobile application to educate the public about NASA’s X-planes and aviation research programs. NASA Aeronautics AR showcases advancements and far-reaching impacts that NASA has on the aviation industry.

When the user points a mobile phone’s camera at a flat surface, the free app digitally materializes 3D AR versions of the X-59 Quiet Supersonic Technology (QueSST), X-57 Maxwell all-electric experimental aircraft, and G-III Gulfstream jet aircraft. Users can pinch, zoom, and drag to interact with the models. Some of the model features are animated; for example, users can move the propellers on the X-57 aircraft. While viewing a 3D model, users can read summary information or follow a link to learn more online. The app is available in both Android and iOS versions.

Work to Date

Developed by four student interns working in succession beginning in spring 2018, the app was accomplished with gaming, 3D modeling, and photo editing software. The students imported the actual models NASA engineers used for aircraft flight simulation into the gaming software for animation.

The Android version of this education and outreach tool was released in December 2018 and was developed using Unity® gaming software, C# programming language, and Blender® 3D model editing software. Released in July 2019, the iOS version was developed with SwiftTM programming language, the Xcode® developer software, and Blender software.

Looking ahead

Next, integrate more aircraft into the app.

Benefits

- Versatile: Increases visibility of NASA’s aeronautics

- Fun: Generates excitement for STEM among students

PIs: Rebecca Flick | 661-276-3949 | Rebecca.M.Flick

David Tow | 661-276-3552 | David.Tow-1

Interns: Alex Passofaro, Univ. of Minnesota Duluth; Kendrick Morales, Univ. of Puerto Rico at Rio Piedras; Christopher Morales, Univ. of Puerto Rico at Arecibo; Daniel Williams, Antelope Valley College

Blender is a registered trademark of Blender Foundation. Swift and Xcode are trademarks of Apple Inc. Unity is a registered trademark of Unity Technologies.

Fiber Optic AR System

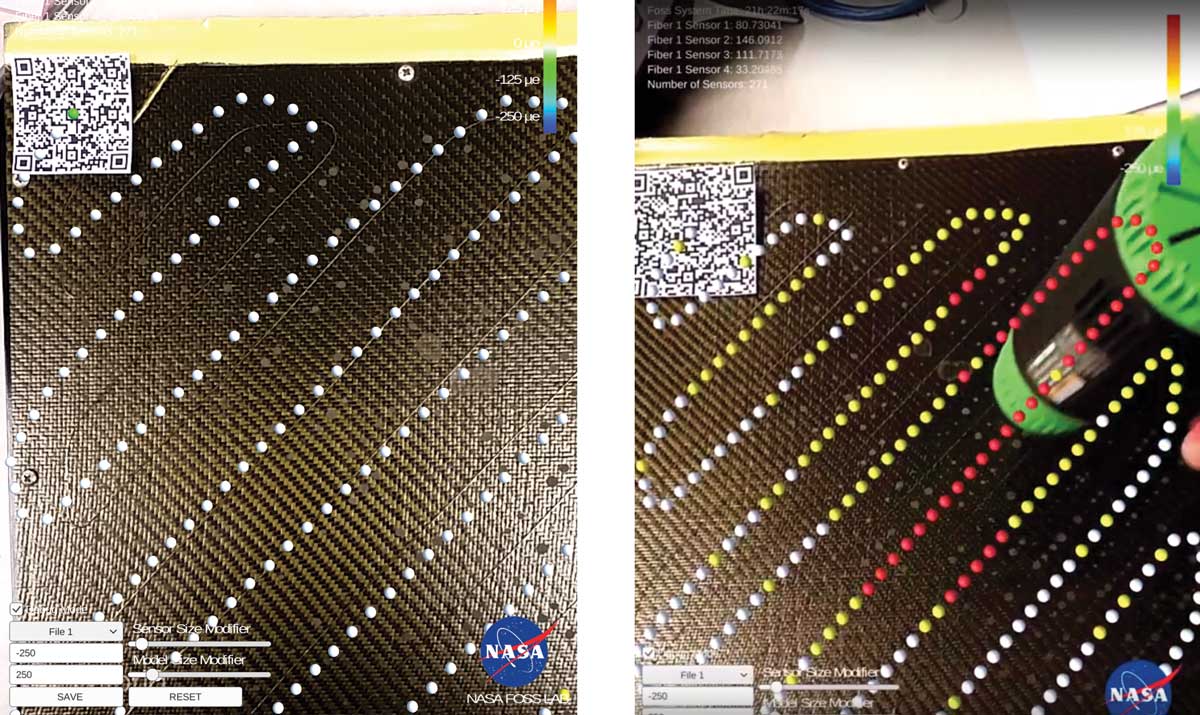

This research effort is working to visualize the individual measurements that Armstrong’s patented Fiber Optic Sensing System (FOSS) provides in a way that helps users understand how to view and analyze the data.

The Fiber Optic Augmented Reality System (FOARS) is a mobile data visualization application that receives FOSS data via a wireless connection and then creates a 3D augmented version, superimposing it over a target image. Currently, users must review and analyze FOSS data via conventional methods such as with Excel® spreadsheet software or LabVIEW® programming, which require on-site computers. In contrast, the stand-alone FOARS app can be downloaded onto any mobile device for easy, portable use. The goal is for users to be able to interact with the app without support from the FOSS team.

Work to Date

Work began in 2016. Resources from NASA’s Center Innovation Fund provided a license for 3D gaming software and time to complete the work. The app was completed at the end of 2017 and is ready to be used as an additional feature to FOSS.

The current version reads an Excel file with information on xyz point locations to create the virtual model of the sensors (Figure 1) as well as the strain input data from the user. The QR code serves as location guidance in superimposing the model over the image of the actual sensors. The model changes color to display strain or temperature changes (Figure 2). In addition to real-time data visualization and analysis, the technology is useful for pretest assessments to ensure that fibers are in place and functioning correctly.

Excel is a registered trademark of Microsoft Corporation.

LabVIEW is a registered trademark of National Instruments Corporation.

Benefits

- Real-time visualization: Enables users to quickly interpret FOSS data

- Automated: Creates color 3D augmented model with upload of target image files

- Portable: Works on a mobile device, without the need for a computer

Applications

FOARS can be used in all FOSS applications:

- Aerospace

- Energy

- Transportation

- Infrastructure

- Medical

PI: Shideh Naderi | 661-276-3106 | Shideh.Naderi@nasa.gov

Excel is a registered trademark of Microsoft Corporation.

LabVIEW is a registered trademark of National Instruments Corporation.