Stefanie Tellex

Brown University

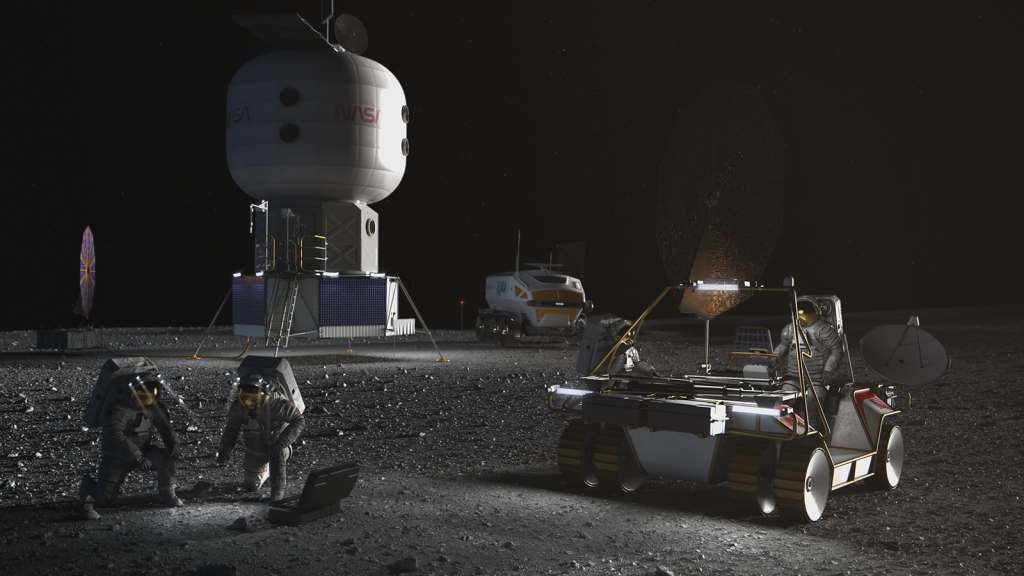

The aim of this proposal is to test the hypothesis that a system can increase speed and accuracy at inferring human intentions and increase the number of robots a single astronaut can supervise by inferring a person’s mental state from their language utterances and actively asking questions when it is confused. The approach is driven by a formal model for human-robot collaboration that enables a robot to make plans without complete information and reason about what a person wants. This inference will enable a person to effectively communicate complex requirements and tasks constraints to the person at very abstract levels (e.g., “Inspect the ISS”) and at very specific levels (e.g., “Move left six inches,”) as well as recovering from failure by asking targeted questions (e.g., “Do you mean the Philips screwdriver or the flat head?”). The end goal of this project is to achieve seamless human-robot cooperation on complex tasks, approaching the ease and accuracy of human-human collaboration. Humans communicating with other humans use language to express very abstract goals as well as very low-level concrete commands. This use of language enables high levels of autonomy but also supports flexible and fluid corrections and replanning. Robots that can use fluid language at multiple levels of abstraction can flexibly respond to a person’s requests. The ultimate impact is a world where robots actively interpret a person’s instructions, asking questions when they are confused, and asking for help when they encounter a problem.