A conversation with Brian Gore, Human Systems Integration Division at NASA’s Ames Research Center in Silicon Valley. Download the NASA TLX research tool on the App Store at https://itunes.apple.com/us/app/nasa-tlx/id1168110608?mt=8

Transcript

Matthew Buffington (Host):You are listening to NASA in Silicon Valley, episode 32. We have been doing this podcast for just over half a year now. So here is short reminder of how it works. We do weekly, episodic podcasts that are simply conversations with a wide range of scientists, engineers, researchers, and all around cool people that work at NASA. Like this very episode, we start with a mini-intro and tell you which episode you are on. Apart from that, we also add audio version of our written stories from NASA.gov. Basically, we take the stories that are posted online and have the authors record an audio version. In the future, we are looking to add other features like lectures, panel discussions, or even episodes where we take questions live. Feel free to let us know what you think about the podcast by using #NASASiliconValley on Twitter.

In this episode, we welcome Brian Gore from the Human Systems Integration Division at NASA Ames. We discuss his work work studying and creating experiments to understand human performance and how humans handle workloads. We also go into the recent release of the NASA TLX app on iOS and how this “task load index” tool can help researchers collect and understand data. So, here is Brian Gore.

[Music]

Host:Tell us a little bit about yourself, Brian. How did you join NASA? How did you get to Silicon Valley?

Brian Gore:That’s a loaded question.

Host:Indeed, it is. For most, it is.

Brian Gore:So I had been working in human factors research at Battelle in Seattle. We were working on automated highway systems and intelligent transportation systems, and coming up with the legislation, or the rules to guide the legislation, that was coming down from the government, NHTSA [National Highway Traffic Safety Administration] and Federal Highway Administration, to mandate to the car manufacturers what should be allowed in a vehicle – so moving maps, intelligent transportation systems, head-up displays, Muth mirrors, all of the things that we see on cars today.

Host:Is your background in engineering, or in computer science and those systems?

Brian Gore:It is. I have an undergrad in human factor psychology. I did my undergrad studying risk appraisal behavior, and developing mathematical models of how people engage in risky behavior.

Host:Oh, really?

Brian Gore:Yeah.

Host:I’m sure insurance companies probably love that kind of stuff.

Brian Gore:Exactly, exactly. And then I went on from there to do my master’s of science at San Jose State University.

Host:Were you always local, or was this happenstance?

Brian Gore:As I said earlier, I was working at Battelle in Seattle, and then I moved down here to do my master’s degree at San Jose State [University]. I started working at NASA while I was doing that. I worked here for – gosh, I can’t remember how many years – maybe about ten years, and then I did my Ph.D. while I was working here.

I completed the Ph.D. – it took a little longer, because I was working full-time while doing it, but I got that completed. That was at the University of Toronto in the mechanical and industrial engineering department.

Host:I imagine growing up you were always very STEM-focused, or always wanting to do that kind of stuff.

Brian Gore:Growing up, academics was always part of it. It never was a question that you would just be continuing in education. As time went on, the employment guided the need for the additional degrees.

Host:As I understand it, you came in through an academic program to get into NASA.

Brian Gore:Exactly.

Host:What were you working on when you first landed over here?

Brian Gore:Oh, training.

Host:Really?

Brian Gore:Yeah. We were looking at air traffic controller training. So, would it be possible to come up with a training program to evaluate air traffic controllers who are training? Is there a criteria level that we can actually identify to measure them? It’s just like you or I get measured when we’re driving a car when you get a driver’s license. Somebody assessed you, and there were certain critical variables that you were assessed on.

Now, air traffic control is incredibly complicated. There wasn’t really anything documented on what does a trainer look for when they evaluate an air traffic controller. So that’s how we started working on that.

Host:Typically, when people think of NASA, they think of rockets, and they think of astronauts, and the first “A” in NASA – it’s “National Aeronautics.”

Brian Gore:Right. And then after that, it was all about flight deck. We were doing the flight deck.

I developed a human performance model of the next-generation in the air traffic management world called “free flight.” They were looking at this concept where they were going to reduce air traffic control of the flight deck, by providing displays and those kinds of pieces of information, so that the pilot could separate themselves from other aircraft.

Host:That’s one of the things I get a kick out of here at Ames, is they have the “FutureFlight Central.” If people can imagine, it’s an air traffic control tower with all of these TV screens around it, and it’s all built in a way to simulate different conditions at different airports. You can make it snow in LA, and you can do all these basic simulations. And so imagine all of that research, and then people can practice and train on how to be air traffic controllers.

Brian Gore:Exactly. Also, with the FutureFlight Central, as I understand it, one of the big things about that capability is that you’re able to actually move towers around. You could say, “I want to change the tower location,” and you can look and see what effect that will have on the air traffic controllers from the tower, because it’s a tower. And so if you move it on the other part of –

Host:If they’re renovating or building a new wing, and they need to temporarily or permanently move the tower, you can actually see what it’s going to look like.

Brian Gore:Exactly. You can test it out beforehand. It’s very smart to be able to do that kind of stuff.

Host:My nephew just joined the Air Force, and he’s doing air traffic control. I showed them FutureFlight Central, and I guess they regularly get trained and practice in very similar situations to learn how to be air traffic controllers.

Brian Gore:Yeah.

Host:So, when you start working on that stuff, how does that roll into what you’re doing now?

Brian Gore:The human performance modeling work, a big part of that is using these computational models. They’re computational representations of a human, so anthropometrically there’s a figure. And what we tried to do is we were trying to drop all of the cognition into the brain, into the head, of that anthropometric character.

We were trying to put in models of visual attention, memory – short-term, long-term , working memory, short-term working memory, all of these different things – as a function of time. As somebody does a set of tasks, if they get interrupted, they might forget this particular task.

Host:Yeah. You get distracted, and you go to something else.

Brian Gore:Exactly. And so what we were trying to do is represent a human computationally that possesses all of those kinds of basic human…

Host:Oh, that’s so funny, because we see all these… We talked to the super computing folks, and all these simulations and models of airplane wings or tilt rotors. But it’s like, “No, you’re working on simulations of the human.”

Brian Gore:Yeah. It’s how the human, if we drop that whole package of things in – like the perception, attention, memory, and the anthropometry, we drop that inside of a cockpit. Can they do it? Can they actually drive or fly a vehicle? Can they drive a car? Can they operate a remote control station? Could they remain on task for a certain amount of time? Do they have perceptual limits? Do we start to lose focus after eight hours on a task?

Maybe we could use our human performance models. And they haven’t been used in this way up until now, but if we could toggle that timeline from left to right, and have us move from time zero through eight hours, and say, “Oh, this is now your maximum performance…”

Host:“Here’s your optimum.”

Brian Gore:Exactly. And can you do these sets of tasks now, or are you going to make mistakes?

Host:I imagine if you’re running those models, then you’re not having to do a lot of human study with a control group and all these different things. You can test it out before you even get to that.

Brian Gore:Exactly. That’s really the ideal time to use a human performance model. You do it before the empirical research, but it’s based off of empirical research, because there are basic, fundamental human performance models that are embedded inside the human, the computational human.

So one of those was workload. That workload piece then brought me down into: Can we predict workload during long-duration missions?

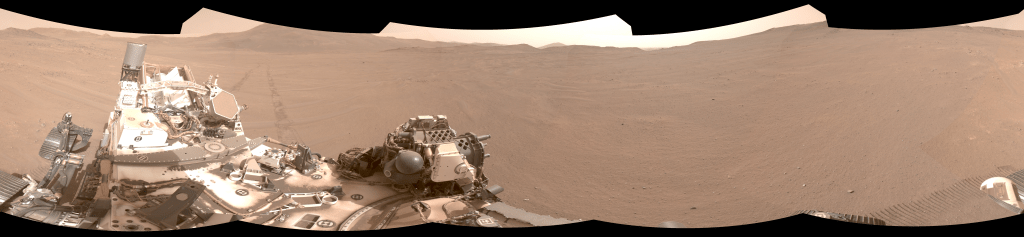

Host:This is like on a journey to Mars?

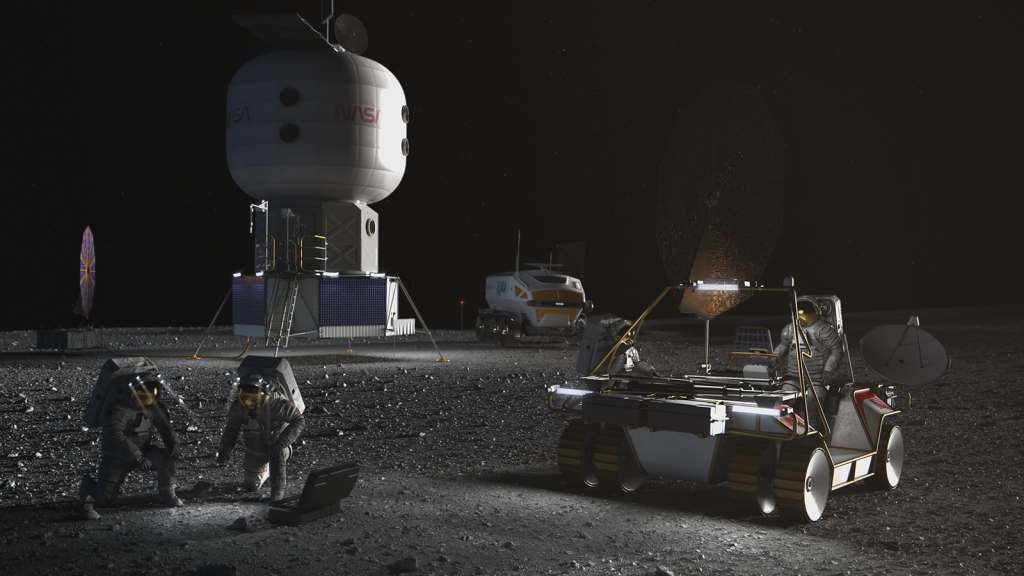

Brian Gore:Bingo. That’s where we’re going with it. I worked a number of years out of the space program, funded by the Human Research Program, in a portfolio called “Space Human Factors Engineering.” After having done that for about three years, working on trying to come up with more of a conceptual model of what parameters need to be considered for a long-duration mission workload as opposed to the short kinds of workload evaluations that we’ve done in the past.

So we were looking at extending that over the eight-hour day, maybe breaking a nine-month mission into chunks and saying, “In the first third of that mission, you’re going to be operating at probably the maximum capability. And then there’s a little period of a doldrum kind of thing in the middle.” And this is rooted in…

Again, there was some research that was saying there is this broken-in-three-phases kind of representation to how humans behave when they’re on a long-duration mission. That happens on a space station. It happens when you’re on a boat or going in a submarine.

Host:Yeah. Anytime when you’re isolated or…

Brian Gore:Exactly, exactly. So that led into trying to develop this workload stuff. I think I ended up needing to generate calls for research before this, and we went and put that out to the academic community. And then we brought it back into NASA, and I was asked to be the deputy for the Space Human Factors Engineering portfolio. And then that transitioned through a number of different programmatic changes, and now it is the Human Factors and Behavioral Performance element.

Host:I know you’re working on this application, the TLX app, which relates to workload. I’m sure that was the natural pivot into – you’re studying workload – “How does this affect humans?” Now here’s an application or a thing to help guide that process. Touch on what exactly is TLX. What does that stand for? What is this app? What does it do?

Brian Gore:NASA TLX stands for the “NASA Task Load Index.” The Task Load Index was developed by Sandy Hart in the mid-to-late 1980s, probably mid-1980s – Hart and Staveland, Lowell Staveland. They created a measure, a subjective measure, of people’s perceived workload.

There had been a number of different scales out there, like the Cooper-Harper – George Cooper from here at NASA Ames. He was a test pilot. He had developed sort of like a manual control, a manual handling, because he was a test pilot – so, “Could I control this aircraft, or was it way too hard to control?”

Sandy built off of that to create a subjective workload scale that said, “Am I going to exceed my perceived cognitive abilities to do these particular tasks?” So there were six dimensions, essentially: mental, physical, temporal, effort, performance and frustration level.

She would assess along those dimensions to see if somebody was going to be in an overload situation. In addition to that, back in the time that she created this, there was something called a “card sorting technique.” The card sort would be you would flip a card up, and a subject would go, “Oh, it’s this one, and it’s not that one,” whatever this or that was. It could be temporal or performance.

What Sandy was saying was, “You’re going to rate and you’re going to weight your estimate on what this particular set of tasks,” what modality it was really channeling. You would do these card sorts to weight it, to actually come up with an overall workload measure at the end of your assessment on those six dimensions.

When she created this, there was a paper and pencil test. It was all done with paper and pencil. There was a little booklet, and you’d go through this booklet. And then you’d have to take all of this data that you collect on paper and pencil, and enter it into a computer, and then you would be able to generate some prediction, some, “This is what the workload was during this condition.”

Host:It’s basically to figure out how productive you are?

Brian Gore:Yeah. It’s a measure, aside from if there’s an experiment that you’re doing, you’re flying an airplane, and you’re measuring the person’s workload as they’re doing a checklist. There’s a little secondary task, like a little light pops up. And you’ve told the subject, “Just note that whenever this light goes, just push a button.” That’s a kind of objective measure of distraction –

Host:Either you hit it or you didn’t.

Brian Gore:Exactly. If they miss it, they miss it. And that means that they’re overloaded. That’s often the way the objective measures were being collected. Well, subjectively, there was always a different piece that you were trying to get at through this objective measure, because oftentimes that kind of subjective impression of workload is building up inside the person. If they start to crack, if they start to fail on tasks, it could be because of this build up of workload.

Host:I notice as you talk about it, you keep saying the “perceived” or the “perception” of it. So this is all about how that subject, how the person, feels. It’s not necessarily what their actual workload is, but it’s their perceived workload.

Brian Gore:Right, because some people can do very well under extremely task-loaded environments. And sometimes that same person will fail in environments that have no task load. So, how do we explain that? That brings into it –

Host:How their perception of that situation…?

Brian Gore:Yeah. And supervisory control theory. There’s a lot of theoretical stuff behind how people operate in these different environments.

Host:That’s so funny. Especially doing this kind of research, the ground level of understanding space exploration, long missions, you have to understand the fundamentals of human psychology, of how can humans cooped up in a capsule for nine months get through this. And not only that, you’re going to ask them to do science experiments on top of it.

Brian Gore:Exactly, exactly. There was a little bit of a push from the community. And I noticed in our division we had a lot of aviation studies being done. We had space studies being done, people in lateral vibration, doing a whole bunch of empirical studies where people are shaking in a seat, to develop a countermeasure for this vibration situation.

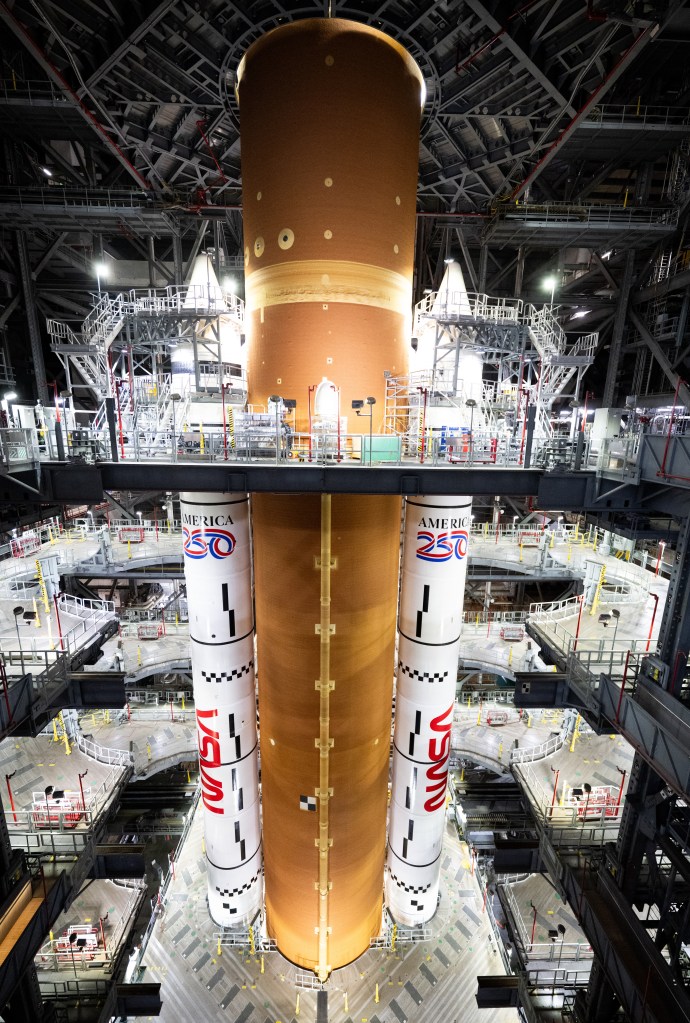

There is a tremendous amount of vibration on the shuttle launch. It was both horizontal, and vertical, and lateral kinds of vibration. He was developing a countermeasure, and that countermeasure was actually in the display. So as the vibration was happening, the display was also vibrating, and the people could actually see it.

Host:You could actually see it?

Brian Gore:Yeah. That’s what he was working on.

Host:It’s like shock absorbers for the display.

Brian Gore:Exactly. This is all part of the space program that we’re doing in our division. If we could actually have somebody doing a workload evaluation, like the subjective workload scale, if you could do it on an iPad, or if you could do it on an iPhone, it would just make it so usable and useful.

Host:I would imagine back in the day, it was just pen and paper, or pencil and paper, then probably like a Scantron or something like that. But now you have spreadsheets, you know, databases.

Brian Gore:That’s exactly what this allows you to do, because once you put in your subject ID and all that kind of stuff – as an experimenter, you put in the subject ID, and then you give it to the pilot. The pilot goes in, and starts flying the aircraft.

Then he or she would be able to evaluate the workload. It could pop up on the iPhone – it’s just a touch screen – and they tab their finger on a timeline for those six dimensions. It actually collects everything. And then you can actually dump it out and shoot it out to a CSV file, and then import it into Excel and do all the processing. It’s really amazing.

Host:It ends up, overall, making it more user-friendly. For this app that you’ve created to replace this pen and paper or even just this database process, was there a researcher involved in it that was crafting what that is going to be? And researchers can use that app on their subjects to get information?

Brian Gore:Inside of our division we’ve got a group of human computer interaction people, individuals who have expertise in human computer interaction. Now, they do a very large amount of development of app systems. And there was a team of researchers who actually helped out on the app development.

We went back in and contacted Sandy, because I can write to her and talk to her. We’re friends. So Sandy, she wrote back and said, “This is what I was trying to do when I developed the scale.” We made the app consistent with the look and feel from what she was trying to capture back in the 1980s. Everything is done to mimic how it would have felt when you were doing it back then.

Host:For people who are listening, you could literally just type in “NASA TLX” in the [App Store], and you can find this app. But I’m also going to assume that the average person would probably download it, look at it, and be like, “What am I doing here?” What is the target audience for this app? Who are you really looking at to take advantage of this?

Brian Gore:The target audience is really the research community. It’s really made for the researcher, or it could be somebody who’s just interested in assessing their own level of workload, because it’s subjective. It’s a subjective measure.

Host:It can be adapted and used…

Brian Gore:Yeah. The one thing that we did spend a lot of effort doing and making sure was the security of the data, because we did not want people to be able to capture this data if they didn’t have rights to it. And so that was a large part of our HCI group’s effort.

They spent a lot of time identifying how we could make sure that the data would be de-identified. If you send it over the airwaves, essentially – through the Bluetooth or something like that – to dump out the CSV file, how best can we secure that data? It gets a little dicey when you start sending stuff over Bluetooth, but we’ve done the best that we can. It is all de-identified, so you can’t really tell. If you have the data file, it wouldn’t really mean anything to you – unless you’re the researcher, and then it would mean something.

It’s really targeted for the research community. The researcher would just go to the App Store, download it. It would then be a resident on their iPhone or iPad. Then, they would be able to asses the workload, send it out to a CSV file, and do an analysis on a desktop computer.

Host:If I’m a researcher, if I’m working on some usability study, or something like that – they could basically use this app, take it, customize it. I guess they then use a version of that app to hand to their subject matter people or…?

Brian Gore:It’s all in the app. Everything is in the app also, so that when they download it, there is an instruction set that’s embedded in it. It tells you what you’re supposed to do with a card sort. Or maybe you don’t even want to do the card sort; you just want to get the person’s subjective impression. You don’t even care about the weighted average. That’s perfectly fine. You can do that.

Host:It’s built so that people can customize it to what their research needs are?

Brian Gore:Exactly, yeah. It’s quite a neat tool.

Host:What’s an example of something that you guys are using this in now here at NASA?

Brian Gore:Right now at Ames, we’ve got. . . Oh, I didn’t bring my data with me, but we’ve got some really good data over the past two weeks, so since it was released. There’s been about 11,000 hits on it, and about 208 active users of the TLX app. For two weeks, that’s amazing that there’s a research community out there that’s –

Host:That’s tapping into –

Brian Gore:Yeah. It’s really amazing. Actually, here’s a quote from one of our test users last year. She was doing a study. It was an aviation study. She said, “The TLX app, the beta version that you guys are developing, is so easy to use, I don’t know why I wouldn’t collect workload on every single study that I do.”

Host:That’s awesome.

Brian Gore:That’s the kind of kudos that the development team really likes to hear. And that’s really what Sandy wanted. She wanted people to use it. And over the past 30 years, this is still the gold standard.

Host:It’s fantastic how it relates back to NASA, of not only doing space exploration for the sake of exploring the universe and understanding, but it’s a matter of, to do science, and to figure out stuff, and better understand the universe, our solar system and beyond – but then working especially at Ames as a research center, helping that research community, making sure all of our tools or results aren’t living in a silo, but sharing that with the research communities so we can all help learn together.

But developing a tool like this, that can help other folks at universities, or other agencies, or other companies even, for that matter. They can use this to better understand how their humans, how their people, how their employees, do workload stuff. It helps everybody.

So, what do you see as the future for this app? What are the next stages? Are you guys looking at different platforms? Are you planning on building different versions?

Brian Gore:Yes. Interestingly, in this first version, we’ve got a couple of capabilities roughed in. One of them is a server capability. A second one is a barcode capability. Those two things are going to be incorporated in the next release of the TLX.

It’s really to assist in when you start up your study, that you could actually take a picture of a barcode, and it will automatically populate your subject ID number and all of the conditions that you’re going to be running the subject under. Then, the server capability will allow you to send your data – so instead of it going to your computer, it will go to a centralized server for secure storage.

We were doing that because a number of years ago, maybe four years ago, I was contacted by a medical doctor who was doing his Ph.D. on the side as he was a resident. He was an ER doctor. He was saying, “I get these pushes, and I want to measure and assess my workload during these pushes. Do you have that kind of capability?” Unfortunately at the time, we didn’t. But I said, “This is a great idea. We’ve got to do this, because it’s something that you need. It’s something that obviously all of our researchers need. Let’s start working on this.”

Host:So for anybody who’s listening, if you are a researcher, a scientist looking to use this, they can just hop on over to the [App] Store, search for “NASA TLX,” and go ahead and download it there.

But also, for anybody who’s listening who has any questions for Brian about how you came up with the app or any other additional information, we are on Twitter @NASAAmes. We are using the hashtag #NASASiliconValley. If any questions come in, we’ll loop you on in and get back to people.

Thanks for coming on. This has been awesome.

Brian Gore:Excellent. Well, thank you very much for having me. I hope people enjoy the app, and give us some comments about it too. We definitely appreciate that. We would also try to modify it for future releases.

Host:Thanks so much.

Brian Gore:Excellent. Thank you.

[End]