Challenges for Future Exploration

May 7-9, 2019

McMurtry Auditorium, Rice University, Houston, TX

The widespread use and increasing complexity of mission-critical and safety-critical systems at NASA and in the aerospace industry require advanced techniques that address these systems’ specification, design, verification, validation, and certification requirements. The NASA Formal Methods Symposium (NFM) is a forum to foster collaboration between theoreticians and practitioners from NASA, academia, and industry. NFM’s goals are to identify challenges and to provide solutions for achieving assurance for such critical systems.

New developments and emerging applications like autonomous software for uncrewed deep space human habitats, caretaker robotics, Unmanned Aerial Systems (UAS), UAS Traffic Management (UTM), and the need for system-wide fault detection, diagnosis, and prognostics provide new challenges for system specification, development, and verification approaches. The focus of these symposiums are on formal techniques and other approaches for software assurance, including their theory, current capabilities and limitations, as well as their potential application to aerospace, robotics, and other NASA-relevant safety-critical systems during all stages of the software life-cycle.

The NASA Formal Methods Symposium is an annual event organized by the NASA Formal Methods (NFM) Steering Committee, comprised of researchers spanning several NASA centers. NFM 2019 is being co-organized by Rice University and NASA- Johnson Space Center in Houston, TX.

The meeting will be comprised of invited talks by leading researchers and practitioners, a panel discussion on the challenges of future exploration that formal methods could address , and more specialized talks based on contributed papers.

- Formal verification, including theorem proving, model checking, and static analysis

- Advances in automated theorem proving including SAT and SMT solving

- Use of formal methods in software and system testing

- Run-time verification

- Techniques and algorithms for scaling formal methods, such as abstraction and symbolic methods, compositional techniques, as well as parallel and/or distributed techniques

- Code generation from formally verified models

- Safety cases and system safety

- Formal approaches to fault tolerance

- Theoretical advances and empirical evaluations of formal methods techniques for safety-critical systems, including hybrid and embedded systems

- Formal methods in systems engineering and model-based development

- Correct-by-design controller synthesis

- Formal assurance methods to handle adaptive systems

Time-Limited Conference Proceedings Access available here (Updated July 22, 2019)

Topics of Interest

We encourage submissions on cross-cutting approaches that bring together formal methods and techniques from other domains such as probabilistic reasoning, machine learning, control theory, robotics, and quantum computing among others.

NFM 2019 Accepted Papers

| Optimising a Verified SAT Solver Authors: Mathias Fleury Abstract: In previous work, I verified a SAT solver with dedicated imperative data structures, including the two-watched-literal scheme. In this paper, I extend this formalization with four additional optimizations. The approach is still based on refining an abstract calculus to a deterministic program. In turn, an imperative version is synthesized from it, which is then exported to Standard ML. The first optimization is the extension with blocking literals. Then, the memory management is improved in order to implement the heuristics necessary to implement search restart and forget, which were subsequently implemented. This required changes to the abstract calculus. Finally, the solver uses machine words until they overflow before switching to unbounded integers. Performance has improved and is now closer to MiniSAT. |

| Extracting and optimizing formally verified code for Systems programming Authors: Eleftherios Ioannidis, Frans Kaashoek and Nickolai Zeldovich Abstract: MCQC is a compiler for extracting verified systems programs to low-level assembly, with no runtime or garbage collection requirements and an emphasis on performance. MCQC targets a subset of the Gallina functional language used in the Coq proof assistant. MCQC translates pure and recursive functions into C++17, while compiling monadic side-effects to imperative system calls. With a series of memory and performance optimizations, MCQC combines verifiability with memory and runtime performance. By handling effectful and pure functions separately MCQC can generate executable code directly from Gallina, reducing the effort of implementing and executing verified systems. |

| Learning-based Testing of an Industrial Measurement Device Authors: Bernhard Aichernig, Christian Burghard and Robert Korosec. Abstract: Active automata learning algorithms have gained increasing importance in the field of model-based system verification. For some classes of systems – especially deterministic systems, like Mealy machines, a variety of learning algorithm implementations is readily available. In this paper, we apply this technique to a measurement device from the automotive industry in order to systematically test its behaviour. However, our system under learning shows sparse non-deterministic behaviour, preventing the direct application of the available learning tools. We propose an implementation of the active automata learning framework which masks this non-determinism. We repeat a previous model-based testing experiment with faulty devices and show that we can detect all injected faults. Most importantly, our technique was also able to find unknown bugs. |

| Clausal Proofs of Mutilated Chessboards Authors: Marijn Heule, Benjamin Kiesl and Armin Biere. Abstract: Mutilated chessboard problems have been called a “tough nut to crack” for automated reasoning. They are, for instance, hard for resolution, resulting in exponential runtime of current SAT solvers. Although there exists a well-known short argument for solving mutilated chessboard problems, this argument is based on an abstraction that is challenging to discover by automated-reasoning techniques. In this paper, we present another short argument that is much easier to compute and that can be expressed within the recent (clausal) PR proof system for propositional logic. We construct short clausal proofs of mutilated chessboard problems using this new argument and validate them using a formally-verified proof checker. |

| Towards a Two-layer Framework for Verifying Autonomous Vehicles Authors: Rong Gu, Raluca Marinescu, Cristina Seceleanu and Kristina Lundqvist. Abstract: Autonomous vehicles rely heavily on intelligent algorithms for path planning and collision avoidance, and their functionality and dependability could be ensured through formal verification. To facilitate the verification, it is beneficial to decouple the static high-level planning from the dynamic functions like collision avoidance. In this paper, we propose a conceptual two-layer framework for verifying autonomous vehicles, which consists of a static layer and a dynamic layer. We focus concretely on modeling and verifying the dynamic layer using hybrid automata and UPPAAL SMC, where a continuous movement of the vehicle as well as collision avoidance via a dipole flow field algorithm are considered. This framework achieves decoupling by separating the verification of the vehicle’s autonomous path planning from that of the vehicle autonomous operation in a continuous dynamic environment. To simplify the modeling process, we propose a pattern-based design method, where patterns are expressed as hybrid automata. We demonstrate the applicability of the dynamic layer of our framework on an industrial prototype of an autonomous wheel loader. |

| Transaction Protocol Verification with Labeled Synchronization Logic Authors: Mohsen Lesani. Abstract: Synchronization algorithms that provide the transaction interface are intricate. We present an algorithm description language that explicitly captures the type of the used synchronization objects and associates labels to method calls to explicitly capture their intra-thread order. We use the language to capture architecture independent representations of transactional memory algorithms. We present a novel logic that enables reasoning about synchronization algorithms that are described in the language. The logic quantifies over program labels and provides specific predicates and intuitive inference rules to reason about the inter-thread execution and linearization orders of labeled method calls. In particular, the logic assertions can directly capture orders that are fundamental to the correctness of transactions. We present a denotational semantics for the language and prove the soundness of the logic. We have formalized the logic in the PVS proof assistant and mechanically constructed the challenging correctness proof of the TL2 transactional memory algorithm. |

| Using Binary Analysis Frameworks: The Case for BAP and angr Authors: Chris Casinghino, Michael Dixon, Jt Paasch, Cody Roux, John Altidor and Dustin Jamner. Abstract: Binary analysis frameworks are critical tools for analyzing software and assessing its security. How easy is it for a non-expert to use these tools? This paper compares two popular open-source binary analysis libraries: BAP and angr, which were used by two of the top three teams at the DARPA Cyber Grand Challenge. We describe a number of experiments to evaluate the capabilities of the two tools. We have implemented a value-set analysis and a call graph comparison algorithm with each tool, and report on their performance, usability, and extensibility for real-world applications. |

| Model Checking of Verilog RTL using IC3 with Syntax-guided Abstraction Authors: Aman Goel and Karem Sakallah. Abstract: While bit-level IC3-based algorithms for hardware model checking represent a major advance over prior approaches, their reliance on propositional clause learning poses scalability issues for RTL designs with wide datapaths and complex word-level operations. In this paper we present a novel technique that combines IC3 with syntax-guided abstraction (SA) to allow scalable word-level model checking using SMT solvers. SA defines the abstraction implicitly from the syntax of the input problem, has high granularity and an abstract state-space size completely independent of the bit widths of the design’s registers. We show how to integrate IC3 with SA efficiently, prove the correctness of our procedure, and demonstrate its effectiveness on a suite of open-source and industrial Verilog RTL designs. Additionally, SA aligns easily with data abstraction using uninterpreted functions. We demonstrate how IC3+SA with data abstraction allows reasoning that is completely independent of the bit-width of variables, and allows scalability irrespective of the state-space size or complexity of operations. |

| Design and Runtime verification side-by-side in eTrice Authors: Sudeep Kanav, Levi Lucio, Christian Hilden and Thomas Schuetz. Abstract: eTrice is a mature commercial model-based software engineering tool, based on the ROOM methodology. It is currently used in the industry for the development of high-end power tools. eTrice natively incorporates mechanisms for runtime verification, providing an excellent platform for the application of model-checking in practice. At the request of the developers of eTrice, we have incorporated model checking in their toolchain, by partly reusing the existing runtime architecture. We report on the implementation of the tool, experiments that we conducted, and lessons learned regarding the synergies between the two verification techniques. |

| Generation of Signals under Temporal Constraints for CPS Testing Authors: Benoit Barbot, Nicolas Basset and Thao Dang. Abstract: This work is concerned with validation of cyber-physical systems (CPS) via simulation based on sampling of the input signal space. Such a space is infinite and in general too difficult to treat symbolically meaning that the only reasonable option is to sample a finite subset of it and simulate the corresponding system behaviours. It is thus of great interest to choose a finite sample so that it best “covers” the whole space of input signals. We use timed automata to model temporal constraints, in order to avoid spurious bugs coming from unrealistic inputs and this can also reduce the input space to explore. We propose a method for low discrepancy generation of signals with temporal constraints recognised by timed automata. The discrepancy notion reflects how uniform the input signal space is sampled and additionally allows deriving validation and performance guarantees. We also show how this notion can be used to measure the discrepancy of a given set of input signals. We describe a prototype tool chain and demonstrate the proposed methods on a Kinetic Battery Model (KiBaM) and a Sigma Delta modulator. |

| Symbolic Model Checking of Weighted PCTL using Dependency Graphs Authors: Anders Mariegaard, Mathias Claus Jensen and Kim Guldstrand Larsen. Abstract: We present a global and local algorithm for model checking a weighted variant of PCTL with upper-bound weight constraints, on probabilistic weighted Kripke structures where the weights are vectors with non-zero magnitude. Both algorithms under- and over approximate a fixed-point over a symbolic dependency graph, until sufficient evidence to prove or disprove the given formula is found. Fixed-point computations are carried out in the domain of (multidimensional) probabilistic step functions, encoded as interval decision diagrams. The global algorithm works similarly to classic value iteration for PCTL in that it evaluates all nodes of the dependency graph iteratively, while the local algorithm performs a search-like evaluation of the given dependency graph in an attempt to find enough evidence locally to prove/disprove a given formula, without having to evaluate all nodes. Both algorithms are evaluated on several experiments and we show that the local algorithm generally outperforms the global algorithm. |

| Using Standard Typing Algorithms Incrementally Authors: Matteo Busi, Pierpaolo Degano and Letterio Galletta. Abstract: Modern languages are equipped with static type checking/inference that helps programmers to keep a clean programming style and to reduce errors. However, the ever-growing size of programs and their continuous evolution require building fast and efficient analysers. A promising solution is incrementality, aiming at only re-typing the diffs, i.e. those parts of the program that change or are inserted, rather than the entire codebase. We propose an algorithmic schema that drives an incremental usage of existing, standard typing algorithms with no changes. Ours is a grey-box approach: just the shape of the input, that of the results and some domain-specific knowledge are needed to instantiate our schema. Here, we present the foundations of our approach and the conditions for its correctmess. We show it at work to derive two different incremental typing algorithms. The first type checks an imperative language to detect information flow and non-interference, and the second infers types for a functional language. We assessed our proposal on a prototypical implementation of an incremental type checker. Our experiments show that using the type checker incrementally is (almost) always rewarding. |

| Local Reasoning for Paramaterized First Order Protocols Authors: Rylo Ashmore, Arie Gurfinkel and Richard Trefler. Abstract: First Order Logic (FOL) is a powerful reasoning tool for program verification. Recent work on Ivy shows that FOL is well suited for verification of parameterized distributed systems. However, specifying many natural objects, such as a ring topology, in FOL is unexpectedly inconvenient. We present a framework based on FOL for specifying distributed multi-process protocols in a process-local manner together with an implicit network topology. In the specification framework, we provide an analysis technique to reason about the protocols locally, in a process-modular way. Our goal is to mirror the way designers often describe and reason about protocols. By hiding the topology behind the FOL structure, we simplify the modelling, but complicate the reasoning. To deal with that, we use an oracle for the topology to develop a sound and relatively complete proof rule that reduces reasoning about the implicit topology back to pure FOL. This completely avoids the need to axiomatize the topology. Using the rule, we establish a property that reduces verification to a fixed number of processes bounded by the size of local neighbourhoods. We show how to use the framework on two examples. |

| Formal Methods Assisted Training of Safe Reinforcement Learning Agents Authors: Anitha Murugesan, Mohammad Moghadamfalahi and Arunabh Chattopadhyay. Abstract: Reinforcement learning (RL) is emerging as a powerful paradigm to automatically develop autonomous components or agents for sophisticated safety-critical systems such as self-driving cars, unmanned aircraft, etc. RL-based agents learn by exploring possible actions and receiving feedback from the environment over time. However, the approaches fundamentally lack the notion of rigorous and thorough safety considerations. Also, their behaviors are completely based on the training situations that are far limited in time and geographical horizons in comparison to their actual operating environment. Hence, guaranteeing their safe operation — an imperative in the safety-critical domain — remains a challenge. To that end, we present a safety-driven training approach in which RL is augmented with (a) advanced simulators to provide a rich set of training situations, and (b) formal verification tool (SMT-solvers) to rigorously ensure safe properties are upheld during training. This approach guides the RL agent to learn behaviors within the pre-defined zone of safety, over a wide range of scenarios. In fact, it also helps assess the adequacy of pre-defined safety properties and training situations. In this paper, we explain this approach and illustrate it using a case example. |

| Traffic Management for Urban Air Mobility Applications Authors: Sudarshanan Bharadwaj, Steven Carr, Natasha Neogi, Hasan Poonawala, Alejandro Barberia Chueca and Ufuk Topcu. Abstract: Urban Air Mobility (UAM) is a term for an on-demand and automated passenger or cargo-carrying air transportation service within an urban area. Traffic management in UAM systems is challenging as it requires dealing with a dense network of vehicles inhabiting a complex, dynamic environment, with each agent possessing independent and possibly competing requirements. We seek to perform mission planning for vehicles in a fleet, while guaranteeing system safety requirements such as maintaining separation between vehicles. For such fleet systems operating over a large scale, centralized planning is infeasible. In this paper, we present a localized hierarchical planning procedure for the traffic management problem of a fleet of (potentially autonomous) UAM vehicles. We apply decentralized Markov decision process (MDP) policy synthesis on individual vehicles for route planning. We then use reactive synthesis to generate local runtime monitors or shields that handle conflict management and guarantee safety requirements in local sectors of operation. Shields re-route vehicles at runtime when safety violations are about to occur in their respective sector. We present a correct-by-design synthesis procedure that generates a local shield for each sector that must also satisfy assume-guarantee contracts with its neighbours. We show that satisfying these contracts guarantees the entire network of shields is correct with respect to the safety specifications with each shield only able to act in its local sector of operation. |

| Structured Synthesis for Probabilistic Systems Authors: Nils Jansen, Laura Humphrey, Jana Tumova and Ufuk Topcu. Abstract: We introduce the concept of structured synthesis for Markov decision processes. A structure is induced from finitely many pre-specified options for a system configuration. We define the structured synthesis problem as a nonlinear programming problem (NLP) with integer vari- ables. As solving NLPs is not feasible, we present an alternative ap- proach. A transformation of models specified in the PRISM probabilistic programming language creates models that account for all possible sys- tem configurations by nondeterministic choices. Together with a control module that ensures consistent configurations throughout the system, this transformation enables the use of optimized tools for model check- ing in a black-box fashion. While this transformation increases the size of a model, experiments with standard benchmarks show that the method provides a feasible approach for structured synthesis. We motivate and demonstrate the usefulness of the approach along a realistic case study involving surveillance by unmanned aerial vehicles in a shipping facility. |

| Formalizing CNF SAT Symmetry Breaking in PVS Authors: David Narváez. Abstract: The Boolean satisfiability problem (SAT) remains a central problem to theoretical as well as practical computer science. Recently, the need to trust the results obtained by SAT solvers has led to research in formalizing these. Nevertheless, tools in the ecosystem of SAT problems (such as preprocessors, model counters, etc.) would need to be verified as well in order for the results to be trusted. In this paper we explore a step towards a formalized symmetry breaking tool for SAT: formalizing SAT symmetry breaking for formulas in conjunctive normal form (CNF) using PVS. We believe this is an interesting undertaking because it requires us to go beyond the mere formalization of CNF formulas and transformations at the formula level. We need to jump out of the realm of CNF formulas into the language of graphs and then back to CNF formulas. We show how to exploit the PVS type system in order to express these transformations while avoiding notation bloat. |

| Online Parametric Timed Pattern Matching with Automata-Based Skipping Authors: Masaki Waga and Étienne André. Abstract: Timed pattern matching has strong connections with emph{monitoring} real-time systems. Given a log and a specification containing timing parameters (that can capture uncertain or unknown constants), emph{parametric} timed pattern matching aims at exhibiting for which start and end dates, as well as which parameter valuations, a specification holds on that log. This problem is notably close to robustness. We propose here a new framework for parametric timed pattern matching. Not only we dramatically improve the efficiency when compared to a previous method based on parametric timed model checking, but we further propose optimizations based on skipping. Our algorithm is suitable for online monitoring, and experiments show that it is fast enough to be applied at runtime. |

| Practical Causal Models for Cyber-Physical~Systems Authors: Amjad Ibrahim, Severin Kacianka, Alexander Pretschner, Charles Hartsell and Gabor Karsai. Abstract: Unlike faults in classical systems, faults in Cyber-Physical Systems will often be caused by the system’s interaction with its physical environment and social context, making them much harder to diagnose. To further complicate matters, knowledge about the behavior and failure modes of a system are often collected in different models. We show how three of those models, namely attack trees, fault trees, and Timed Failure Propagation Graphs can be converted into Halpern-Pearl causal models, combined into a single holistic causal model, and use actual causality reasoning to detect and explain unwanted events. Halpern-Pearl models have several advantages over their source models, particularly that they allow for modeling preemption, consider the non-occurrence of events and can incorporate additional domain knowledge. Furthermore, the holistic model allows us to reason across model boundaries and detect and explain events that are beyond a single model. Our contribution is a semi-automatic process to (1) convert different models into Halpern-Pearl causal models, (2) combine these models into a single holistic model, and (3) reason about system failures. We illustrate our approach with the help of an Unmanned Aerial Vehicle case study. |

| MLν: A Distributed Real-Time Modal Logic Authors: Moussa Amrani, James Ortiz and Pierre-Yves Schobbens. Abstract: Distributed Real-Time Systems(DRTS) can be characterized by several communicating components whose behavior depends on a large number of timing constraints and such components can basically be located at several computers spread over a communication network. Extensions of Timed Modal Logics(TML) such as, Timed Propositional Modal Logic(TPML), Timed Modal μ-calculus and Lν have been proposed to capture timed and temporal properties in real-time systems. However, these logics rely on a so-called mono-timed semantics for the underlying Timed Labelled Transition Systems(TLTS). This semantics does not capture complex interactions between components with their associated local clocks, thus missing possible action sequences. Based on Multi-Timed Labelled Transition Systems(MLTS), which are an extension of TLTS in order to cope with the notion of distributed clocks, we propose MLν, an extension of Lν that relies on a distributed semantics for Timed Automata (TA) instead of considering uniform clocks over the distributed systems, we let time vary independently in each TA. We define the syntax and the semantics of MLν over executions of MLTS with such a semantics and we show that its model checking problem against MLν is EXPTIME-complete. |

| Composing Symmetry Propagation and Effective Symmetry Breaking for SAT Solving Authors: Hakan Metin, Souheib Baarir and Fabrice Kordon. Abstract: SAT solving is an active research area aiming at finding solutions to a large variety of problems. Propositional satisfiability problems often exhibit symmetry properties, and exploiting them extends the class of SAT problems that state-of-the-art solvers can handle. Two classes of approaches have been developed to take benefits of these symmetries: Static and Dynamic Symmetry Breaking based approaches. They bring benefits for complementary classes of problems. However, and to the best of our knowledge, no tentative has been made to combine them.In this paper, we study the theoretical and practical aspects of the composition of two of these approaches, namely Symmetry Propagation and Effective Symmetry Breaking. Extensive experiments conducted on symmetric problems extracted from the last seven editions of the SAT contest show the effectiveness of such a composition on many symmetrical problems.

|

| Data Independence for Software Transactional Memory Authors: Jürgen König and Heike Wehrheim. Abstract: Software Transactional Memory (STM) algorithms provide programmers with a synchronisation mechanism for concurrent access to shared variables. Basically, programmers can specify transactions (reading from and writing to shared state) which then execute in a “seeming” atomicity. This property is captured in a correctness criterion called opacity. For model checking the opacity of an STM algorithm, we — in principle — need to check opacity for all possible combinations of transactions with all possible values to be written. This leads to several sources of infinity during model checking: infinitely many unique data values, infinitely many possible accesses in transactions, and infinitely many transactions being executed. In this paper, we propose a technique for avoiding the first source of infinity: infinitely many unique data values. To this end, we employ a notion of data independence and provide two results. First, we prove that opacity as a correctness criterion is data independent.Second, we develop conditions for checking data independence of STM algorithms and show their soundness. Together, these results allow to reduce model checking (of data independent STMs) to transactions with a single choice for values written.

|

| A Mixed Real and Floating-Point Solver Authors: Rocco Salvia, Laura Titolo, Marco Antonio Feliu Gabaldon, Mariano Moscato, Cesar Munoz and Zvonimir Rakamaric. Abstract: Reasoning about mixed real and floating-point constraints is essential for developing accurate analysis tools for floating-point programs. This paper presents FPRoCK, a prototype tool for solving mixed real and floating-point formulas. FPRoCK transforms a mixed formula into an equi-satisfiable one over the reals. This transformed formula is then input to different off-the-shelf SMT solvers for resolution. The proposed tool is integrated inside the static analyzer PRECiSA, which computes a sound estimation of the round-off error of a floating-point program. FPRoCK is used to detect unfeasible computational paths, improving the accuracy of PRECiSA. |

| Automated Backend Selection for ProB using Deep Learning Authors: Jannik Dunkelau, Sebastian Krings and Joshua Schmidt. Abstract: Employing formal methods for software development usually involves using a multitude of tools such as model checkers and provers. Most of them again feature different backends and configuration options. Selecting an appropriate configuration for a successful employment becomes increasingly hard. In this article, we use machine learning methods to automatise the backend selection for the ProB model checker. In particular, we explore different approaches to deep learning and outline how we apply them to find a suitable backend relying on given input constraints.

|

| Towards Full Proof Automation in Frama-C using Auto-Active Verification Authors: Allan Blanchard, Frederic Loulergue and Nikolai Kosmatov. Abstract: While deductive verification is increasingly used on real-life code, making it fully automatic remains difficult. The development of powerful SMT solvers has improved the situation, but some proofs still require interactive theorem provers in order to achieve full formal verification. Auto-active verification relies on additional guiding annotations (assertions, ghost code, lemma functions, etc.) and provides an important step towards a greater automation of the proof. However, the support of this methodology often remains partial and depends on the verification tool. In this paper, we report on an experiment made with the C software analysis platform Frama-C and its deductive verification plugin WP. We performed a complete functional verification of several C programs from the literature and real-life code using auto-active verification, focusing on the use of automatic solvers to verify properties that are classically verified with interactive provers. We discuss the benefits of this methodology and the current limitations of the tool, as well as proposals of new features to overcome them. |

| Fly-by-Logic: A Tool for multi-drone fleet planning using Temporal Logic Objectives Authors: Yash Vardhan Pant, Rhudii Quaye, Houssam Abbas, Akarsh Varre and Rahul Mangharam. Abstract: Safe planning for fleets of unmanned aerial systems (UAS) performing complex missions in urban environments has typically been a challenging problem. In the United States of America, the National Aeronautics and Space Association (NASA) and the Federal Aviation Authority (FAA) have been studying the regulation of the airspace when multiple such fleets of autonomous UAS share the same airspace, outlined in the Concept of Operations document (ConOps). While the focus is on the infrastructure and management of the airspace, the Unmanned Aerial Systems (UAS) Trac Management (UTM) ConOps also outline a potential airspace reservation based system for operation where operators reserve a volume of the airspace for a given time interval to operate in, but it makes clear that the safety (separation from other aircraft, terrain, and other hazards) is a responsibility of the drone fleet operators. In this work, we present a tool that allows an operator to plan out missions for fleets of multi-rotor UAS, performing complex time-bound missions. The tool builds upon the correct-by-construction planning guarantees of our previous work by translating missions to Signal Temporal Logic (STL). Along with a simple user interface, it also has fast and scalable mission planning abilities. We demonstrate our tool for one such mission through an example. |

Panelists

Joe Caram

NASA (JSC), US

Title: Chief, Exploration Mission Planning Office

Education:

Bachelor of Science, Texas A&M University (1986)

Master of Science, Texas A&M University (1989)

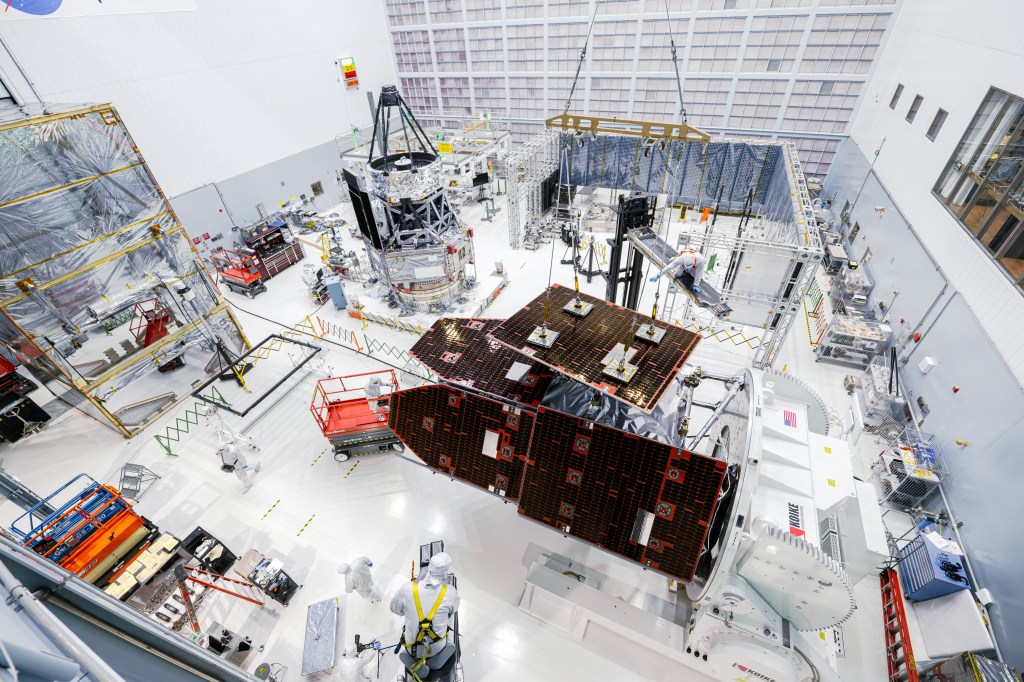

Mr. Joe Caram leads the Systems Engineering and Integration Team for concept maturation of the cislunar spacecraft – Gateway. His agency wide team is responsible for refining the overall concepts for the Gateway. His work includes defining the integrated system requirements, concept of operations, and element functional allocations that make up the Gateway spacecraft.

Prior to his current assignment, Joe has held key leadership roles in various projects, programs, and organizations including the lead Flight Dynamics Officer for the X-38 Project, Aerothermodynamics Team lead for the Columbia Accident Investigation, the Systems Engineering and Integration Chief Engineer for the Space Shuttle Return to Flight, Manager of the Integrated Systems Performance Office in Constellation SE&I Office, held Deputy Manager positions in both the Systems Architecture and Integration Office and the Technical Integration Office in the JSC Engineering Directorate, and was the manager of the Exploration Mission Planning Office of the JSC Exploration Integration and Science Directorate. He is the author or co-author of 24 technical papers.

Robert Ambrose

NASA (JSC), US

Title: Division Chief: Software, Robotics and Simulation

Education:

Ph.D. in Mechanical Engineering, University of Texas

Master of Science, Washington University

Bachelor of Science, Washington University

Dr. Robert Ambrose co-chairs the HX sub-committee on robotics and autonomous systems with Dr. Joy Kelly of Jacobs. Additionally, he co-chairs NASA’s Robotics and Autonomous Systems roadmap team.

Dr. Ambrose is the robotics lead for NASA’s human spaceflight architecture studies and is NASA’s POC for the National Robotics Initiative (NRI). Dr. Ambrose serves as the Division Chief of the Software, Robotics and Simulation Division at NASA’s Johnson Space Center in Houston Texas. He is responsible for flight spacecraft software, space robotics and system simulations for human spaceflight missions.

He received his Ph.D. from the University of Texas at Austin in Mechanical Engineering and his M.S. and B. S. degrees from Washington University in St. Louis. He is married to Dr. Catherine G. Ambrose and lives in the Houston’s Clearlake area.

Emily Nelson

NASA (JSC), US

Title:

Flight Director, Flight Operations Directorate

Acting Deputy Chief, Flight Director Office

Education:

B.S. in Mechanical Engineering, University of Texas (1998)

Honors and Awards:

NASA Outstanding Leadership Medal

NASA Exceptional Service Medal

NASA Exceptional Achievement Medal

Experience:

Emily came to JSC as an employee of United Space Alliance (USA) in September of 1998 as an International Space Station (ISS) Thermal Operations and Resources Flight Controller (ThOR). She supported on-orbit operations in ISS Expeditions 0-15, and supported ISS assembly missions ISS 2A.2A (STS-101), ISS 4A (STS-97), ISS 5A (STS-98), ISS 6A (STS-100), ISS 7A.1 (STS-105) and ISS 11A (STS-113). Emily served as lead ThOR for ISS Expeditions 3, 5, 7 and 8 and the ISS 9A (STS-112) and ISS 12A.1 (STS-116) assembly flights. In 2004 she was hired by NASA and continued to support the ISS program as a ThOR and the Constellation program as a leader in information architecture development until May, 2007.

In May of 2007, Emily was selected as a Flight Director and began ISS support with Expedition 16 in December 2007. Emily served as an ISS Flight Director in Houston’s Mission Control during the ISS 1J (STS-124), ISS ULF2 (STS-126) and ISS ULF3 (STS-129) missions of the Space Shuttle to ISS. She also supported the ISS 1JA (STS-123) and ISS 2JA (STS-127) missions as an International Partner Liaison Flight Director from the Japanese Space Agency’s SSIPC Control Center in Tsukuba, Japan. Emily served as lead Flight Director for ISS Expeditions 18, 27, 33, 46 and 49 and the lead ISS Flight Director for STS-132/ISS ULF4 and the third mission of the Orbital-ATK Cygnus vehicle (the OA-2 mission).

Emily is currently serving as Deputy Chief of the Flight Director Office, is also the lead Flight Director for a series of spacewalks to repair the Alpha Magnetic Spectrometer research platform 2019, and continues to support continuous ISS operations in Mission Control Houston.

Team Name:

Each NASA Flight Director chooses a symbol/color to represent his or her team. Ms. Nelson has chosen Peridot as the symbol for her flight control team because in addition to being a lovely stone, it’s a gemstone known to be found in meteorites. This “space stone” represents all of the extraordinary things, familiar and unfamiliar, we’re bound to find as we pursue exploration further and further from our beautiful blue planet.

Bill Othon

NASA (JSC), US

Title:

Gateway Verification and Validation Lead

Education:

Bachelors in Aerospace Engineering, University of Texas at Austin

Masters in Computer Science, University of Houston Clear Lake

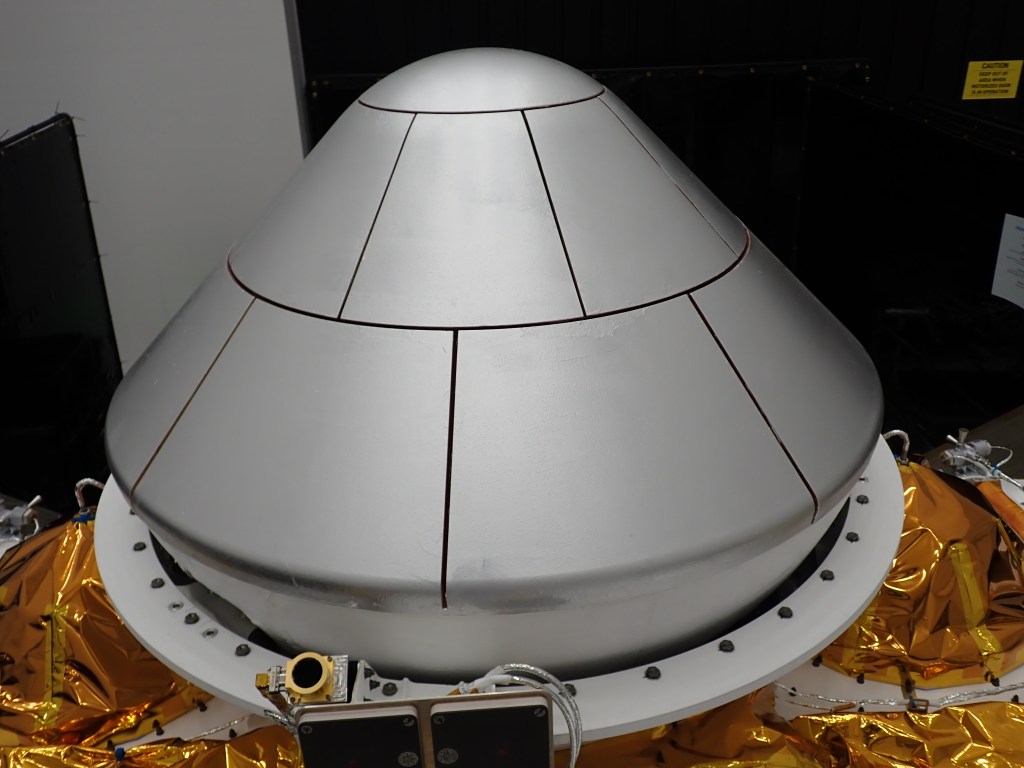

Bill Othon is the acting lead of Verification and Validation for the Gateway Program. Bill’s team is responsible for verifying the performance of the integrated Gateway vehicle, assembled in cis-lunar space over a number of missions and with contributions from US and international partners.

Bill is also the lead for Ground Testing for the NextSTEP cis-lunar habitat activity in the AES program. The team will conduct evaluations on a number of ground habitat prototypes developed by US Industry partners, in preparation for exploration missions in the Proving Ground of cis-lunar space.

Bill has been at JSC for over 30 years, and involved in both spacecraft operations and technology development projects. Bill has a Bachelors in Aerospace Engineering from the University of Texas at Austin and a Masters in Computer Science from the University of Houston Clear Lake.