Brian Okorn

Carnegie Mellon University

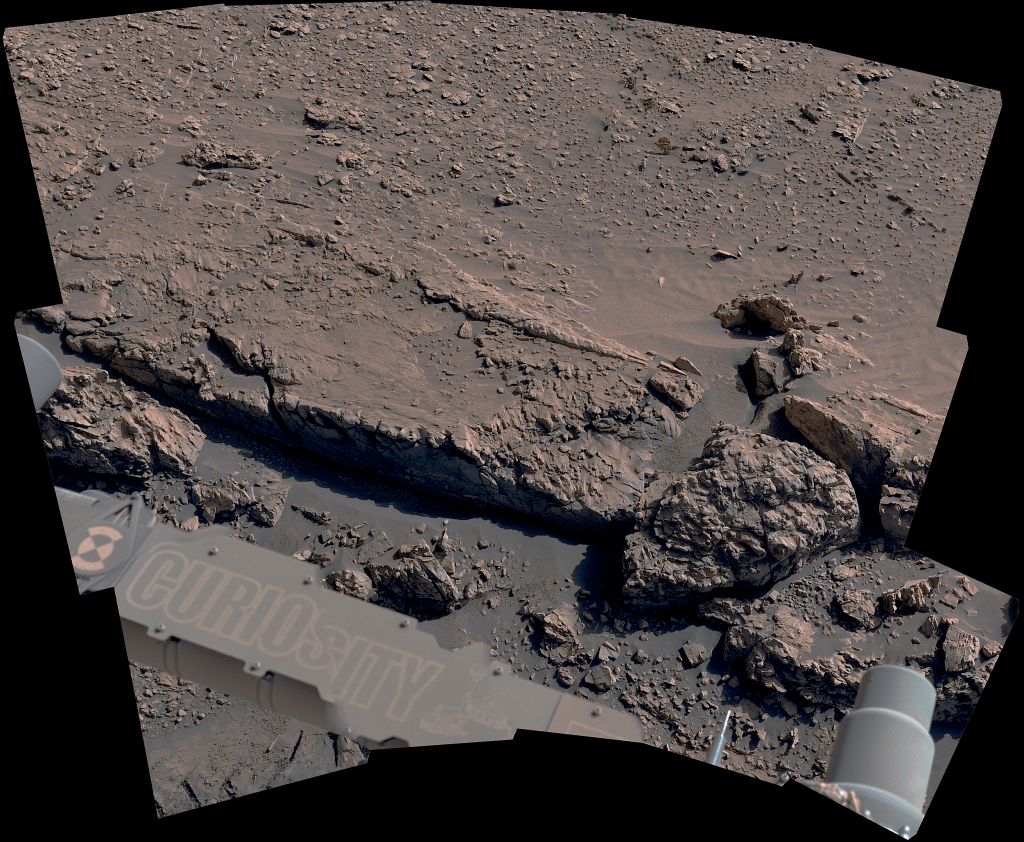

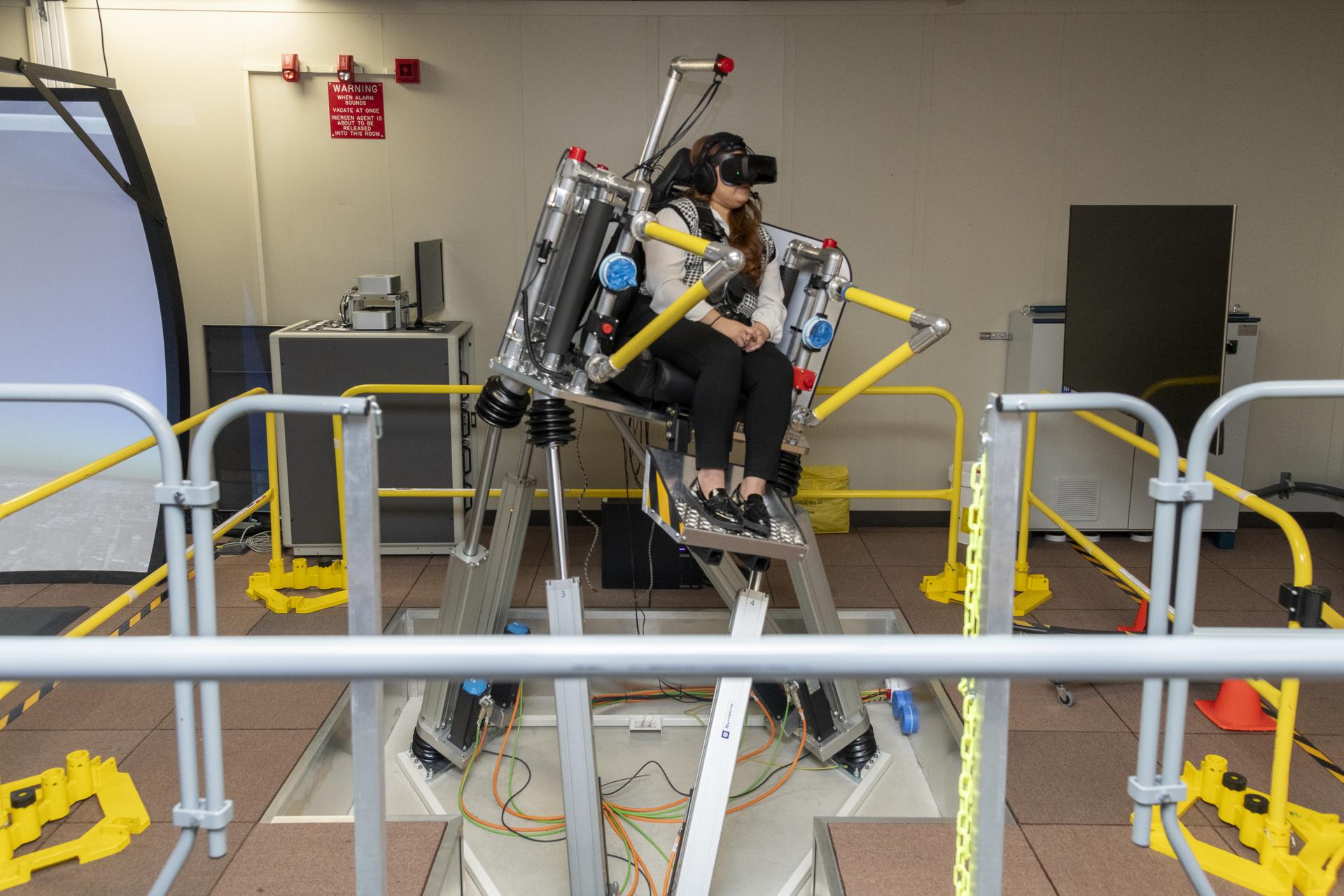

With the increase of robotic manipulation tasks (TA4.3), specifically dexterous manipulation tasks (TA4.3.2), more advanced computer vision algorithms will be required for robots to autonomously interact with their environment. Largely, teleoperation has been used up to this point, but this strategy is not effective for extra-planetary robotics. One of the major advantages of robotic manipulation is as a force multiplier, allowing one astronaut to supervise and manage multiple robots or have a robot, such as humanoid Robonaut 2, assist a human astronaut in completing what would be a multi-person task. Without autonomy, most robots require at least one, sometimes multiple, dedicated operators to successfully complete a task. Additionally, long-range teleoperation from a ground control is not viable for dynamic tasks due to the large latency and potential for communication dropouts associated with extraplanetary missions. With teleoperation no longer an option, autonomous manipulation becomes a requirement. To this end, there have been huge advancement in planning and manipulation, but most of these systems require fiducially tagged objects or only work on a small subset of accurately modeled objects. Without an accurate, real-time vision system in the loop to continually reduce uncertainty, these manipulation systems lack the robustness required for actual field work.

We are proposing a visual-world model that is consistent with the known properties of our environment, such as static stability and non-intersection, as well as the physics of the sensors. This can be broken down into three major components: the generative model, the physics filter, and the exploration action planner. Tactile and visual data are processed by the generative model to produce candidate object models and poses. These models and poses are tested in the physics simulator for static stability and inter-penetration. The results of these tests are fed back into the generative model to improve the next prediction. Data about regions of high uncertainty are passed to the exploration action planner so uncertainty reducing actions can be recommended.