Transcript

Host (Matthew Buffington):You are listening to the NASA in Silicon Valley podcast, episode 62. Joining me for the intro is Frank Tavares. You’ll recognize his voice from a recent story he did on Pluto, so Frank, tell us a little about that.

Frank Tavares:Hey, sure Matt! Yeah, so during the New Horizons flyby mission in 2015, we got some great imagery of this really strange terrain on Pluto. We found essentially these giant ice formations that look like giant spikes or ridges coming up in high elevations. And the official name for it is bladed terrain, cause it kinda looks like, you know, knife blades sticking up, you know maybe if you opened up your kitchen drawer, and the blades were all pointed up, it would look like that. So the big mystery was, we didn’t really know how these things got there. But recently, a science team at Ames led a research investigation that found that these things are actually made of methane ice and found out the whole history of how these things came to be, and it actually has taught us a lot about Pluto’s own climate and geological history, and how it came to be the object it is today.

Host: Cool! And you can catch that story on NASA.gov, there’s also a couple of videos they have up on YouTube, Facebook, Twitter. And obviously, there’s the audio version that Frank recorded on the podcast. But switching over to today, our guest is Ved Chirayath. Ved is a research scientist and lead for the Laboratory for Advanced Sensing at NASA Ames. And Frank, I believe you heard Ved speak at a couple events recently?

Frank Tavares: Yeah! I listened to a talk he gave recently announcing the start of his new NeMO-Net project he’s a part of, and essentially this is focusing on mapping coral here on Earth. The imagery was amazing, but what stuck out for me was when Ved said that there’s only one species on Earth that can be seen from outer space, and that’s coral. And we’re killing it, at an incredibly high rate. And that really gave me such a sense of what the stakes are for understanding coral and what we’re doing to it. So like, if aliens were out there are doing all the same stuff we are to try and look for life in the universe, what they would be able to see from Earth, from outer space, would be coral, not humans. And that’s a form of life that’s going extinct. So yeah, I mean Ved’s a really incredible scientist doing really important work, and actually even renently won the Ames Early Career Research Award here at NASA’s center in Silicon Valley.

Host: Excellent, so before we jump on in, I just wanted to do a couple quick reminders, you can call us, for folks listening, it’s at (650) 604-1400. You can leave a message or a question for the podcast. We’re also using the #NASASiliconValley, if you prefer digital. You can find us on iTunes, Google Play Music, SoundCloud, and through a normal rss feed that works with nearly all podcast apps. And recently, we are starting to add an audio version to YouTube as well, that Frank has actually been working on. But you know, if you like what you hear, do us a favor and leave us a review, that really helps others to find the podcast. And of course, we are a NASA podcast, but we are not the only NASA podcast, so don’t forget to check out This Week at NASA, NASA Casts, and Houston, We Have A Podcast. But for now, let’s listen to our conversation with Ved Chirayath.

[Music]

Host: Tell us a little bit about yourself. How did you end up in Silicon Valley? How did you end up at NASA to begin with?

Ved Chirayath: This was a very concerted effort since I was about five years old.

Host: You were determined. Five-year-old Ved was like, “I’m going to get a job there.”

Ved Chirayath: I knew I wanted to work at NASA. I saw the Mars rover landing coming in as a sojourner in ’99. We saw the images coming in live at JPL in the open house. And I just knew then and there that’s what I wanted to do.

Host: Really? So then is that your whole — high school, college was all centrally focused on “This is where I’m going to be”?

Ved Chirayath: It was. I actually created a master plan to become an astronaut. When I was around seven, I decided to study astrophysics and particle physics and then go to school in Russia and then try to come back to the United States to do school at Stanford. All those things actually worked out, fortunately. But there were some complications along the way.

Host: This is as a seven-year-old?

Ved Chirayath: Yeah, I was a very determined seven-year-old. I got into amateur astronomy. I started doing a lot of backyard astronomy. And then at around the time there were a lot of exoplanets being detected using this transit method that actually the Kepler project uses. I wanted to be the first kid on the block to try to find an exoplanet using that transit method, but with amateur equipment. I went to some telescope companies in the area of Los Angeles and I begged and pleaded to get a larger telescope.

Host: I was going to say, I’m imagining your parents thinking like, “Really? We’re going to buy a $10,000 telescope?”

Ved Chirayath: Yeah, they weren’t. That’s why there was a lot of science fairs and then I got a three inch telescope that we saved up for. And then I tried to go these telescope companies and say, “Hey, if we were able to make this discovery it would be on your instruments. So wouldn’t it be a great idea if you were to sponsor me as a high school student?”

Host: Wow, that’s hilarious.

Ved Chirayath: So we got two of them in the competition and then one of them finally gave us a nice instrument. I had a mentor at USC and I tried to go out to Mount Pinos, north of LA. I spend about a year there just taking observations. I’d filled my own camera system and eventually discovered a planet. That was one of the first. It was really large. By today’s standards, it was an easy detection. But at the time, it was the first detection on an amateur instrument.

Host: That was college?

Ved Chirayath: That was high school.

Host: Really? So then where did you end up going to school? What were you studying? How did you do that?

Ved Chirayath: As part of that project, I got to go to the International Science Fair and one of the awards you get when you win at the top places is a lunch with astronauts. So I got to meet Pinky Nelson and gave him my master plan and said, “Hey, I’ve been meaning to meet an astronaut one day. So what do you think of this idea? I want to be an astronaut. I want to go to potentially study in Russia to learn Russian and learn physics.” And he said, “That’s a great idea.” I went with that and I graduated from high school early and went to Moscow State University for four and a half years.

Host: How’s your Russian by now? Did you figure it out?

Ved Chirayath: Now it’s great because four and a half years of intensive education in Russian will do that to you.

Host: That’s also very smart just for the sake of NASA. One of the cool things is we don’t have to do this alone. We have international partners. We have private partners. And obviously teaming up with Russia on the Space Station and things is a crucial part of what NASA does.

Ved Chirayath: Absolutely, and I’ve always admired the ability for immigrants coming into the United States to speak English fluently and almost natively. We almost expect it of everybody. Yet, when our astronauts speak Russian, no offense to our astronauts, the accents are wrong, the cases are wrong and Russian is a language is so rich and exacting. You really have to get it right to come across correctly.

Host: I always think of college is hard enough during, especially, a master’s program. It’s hard enough in your own language so huge props to anybody who can go study in a different language. The content is already hard enough, but the language barrier — man, that’s rough.

Ved Chirayath: Yeah, I realized that the first year. I bit off more than I could chew. But after a couple of years of intensive training, it got better. People do this all the time when they come to the United States from a different country and they just have to, not only learn the language, but the academic system — how things get done, how research is done. It’s a mind shift for sure, but incredibly useful.

Host: It get’s mentally exhausting switching between the languages. But it’s an exercise and it stretches you. Were you studying astrophysics at that point and then did you land that into a master’s program later on back here? Or how did that work?

Ved Chirayath: So my plan was to finish my bachelor’s degree or master’s degree equivalent in Russia and then come here for graduate school. This is also partly motivated by financial means because United States universities are very expensive and my scholarship I got would cover one year of an Ivy League school here, but it would cover my entire education in Russia. I went with that option. It saved a lot funds and then I had to actually leave Russia early due to an uptick in a lot of violent attacks, neo-Nazi attacks. I got attacked a few times and I just said, “It’s not worth spending the last couple of months here.” I applied to Stanford as a transfer student and said, “My goal was to apply here as a graduate student, but I’m in this predicament.” And they actually took me as a transfer student and gave me a path to a PhD and full funding. That was really tremendous.

I spent four and a half years in Moscow and then in 2009 transferred to Stanford to finish my bachelor’s in physics with a concentration on astrophysics. And then I went on.

Host:You basically also grew up in LA, California. Now you went to the northern California in the bay area. So you basically spent most of your time over here.

Ved Chirayath: Yes, and I really like the bay area.

Host: Then coming to NASA, was it like an internship, a contractor thing? How did you end up making that leap on over?

Ved Chirayath: I had started graduate school at Stanford after finishing my undergrad and I’d started picking up the research that I’d started actually when I was in high school and looking with amateur telescopes again. I went back to the same companies and I said, “Hey, I’m back again. I need a larger instrument.”

Host: “Remember me? Now I speak Russian.”

Ved Chirayath: It was actually really generous because I drove down in this very questionable looking van. I know I made a presentation and said I’m interested in doing high resolution imaging through the earth’s atmosphere. One of the issues we have looking at objects in space is that the atmosphere blurs everything. Stars twinkle for that reason. So I was developing a technique at the time called atmospheric lensing that would use the atmosphere as a lens to try to look at objects at high resolution. But I needed big telescopes to do it and big telescope time costs a lot of money. My graduate advisor said, “Sure, go for it.” So I went and I got — actually I was very successful — I came back with three 20 inch telescopes. We set them up on our department building and I started testing this out again.

It was an image of the sun actually that got me an invitation to Ames to give a talk to a group interested here. I’d finally gotten to the theoretical limit of the telescope’s resolution effectively imaging through the atmosphere’s disturbances very well. I imaged the Venus transit in 2012 and so I presented that work here. The algorithm was still very basic at the time, so I didn’t really understand why so many things were working the way they were. But we had essentially achieved the result of an equivalent of a space instrument, a very small ground-based instrument. It was at that talk that I gave that the center director at the time and a lot of other scientists said, “Have you considered working with us?” And then coming here as an intern, so I was brought in as an intern and now I’m a civil servant here.

Host: Did you get pulled into — they call it the Pathways Program or whatever its predecessor was?

Ved Chirayath: At the time it was USRA and then it went into a Pathways fellowship in 2013, so a year after that.

Host: Cool. Where are you sitting right now? What kind of stuff are you working on?

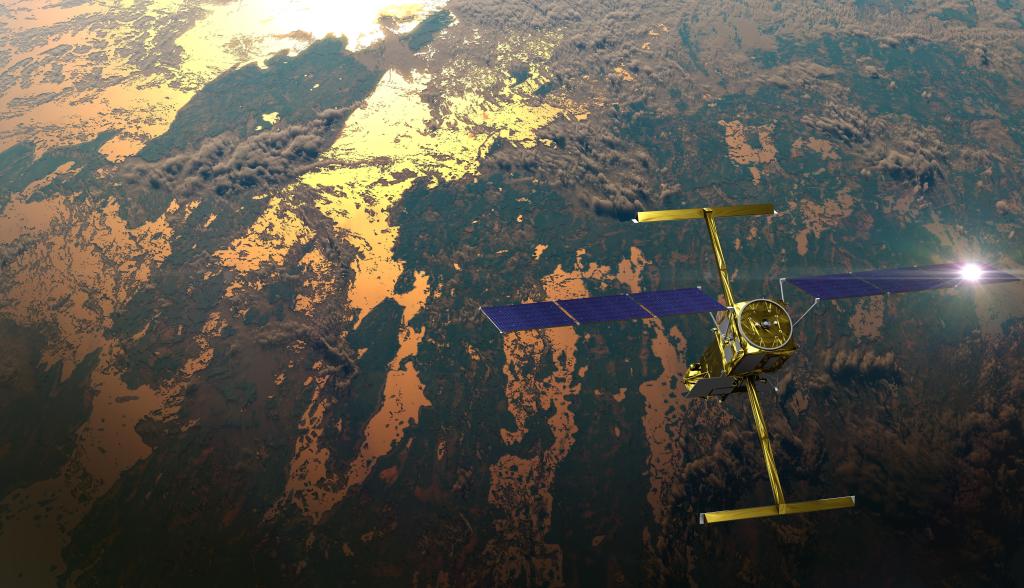

Ved Chirayath: Now I got pulled over to the blue side. I first looked at celestial objects and through the Earth’s atmosphere and I started imaging satellites and perhaps things that I should not have been imaging at high resolution. One of my colleagues, actually the partner of one of my colleagues in marine biology, says, “Have you looked at the ocean’s surface and doing this through ocean waves? Because right now, we’d have no way of looking through the refractive distortions coming from the surface of water.” I said, “No, I haven’t done that. But actually that sound like a much easier problem than the atmosphere because it’s just one surface that causes this distortion.” I put together a project I’d been working a lot with UAVs at the time, small Unmanned Arial Vehicles or drones. And so we deployed my technique on a coral reef to look through the ocean’s surface and it worked remarkably well.

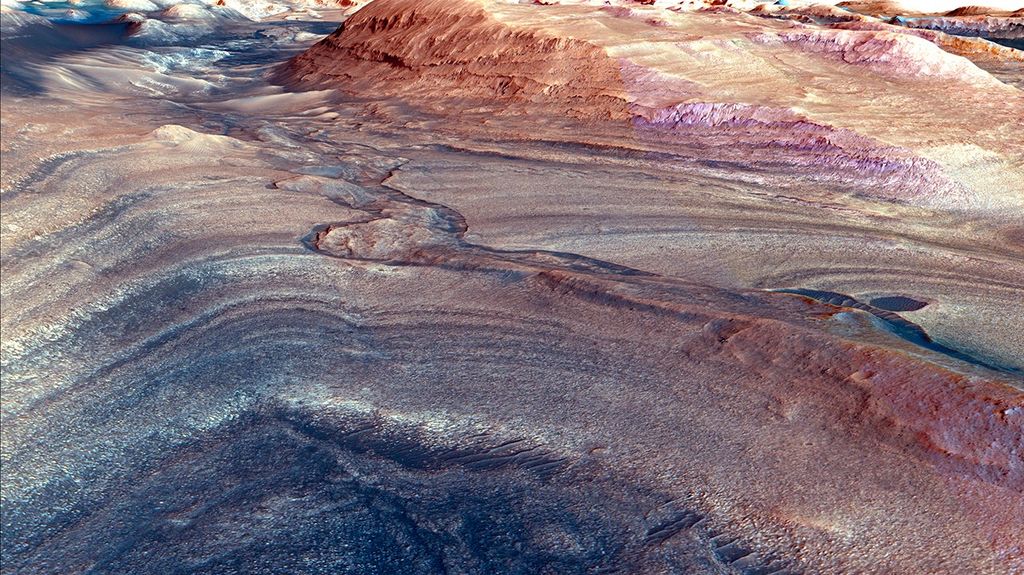

I got completely shifted to looking through the air-water interface and trying to figure out how to look through at the ocean. I was really surprised to learn that we’ve mapped more of Mars and the moon combined than we have of our own ocean floor, which is startling because no one lives on those places. But our life is really dependent on the ocean so it became — my mentors here at NASA Ames really convinced me that my place was in Earth Science and to try to develop instruments to figure out how to protect our spaceship first. Then go off and try to do something outside of space. That’s how I got pulled over into Earth Science.

Host: Well then there’s an obvious parallel to then, as we look and try to find exoplanets or even celestial bodies in our own solar system. Especially, we mentioned before starting, talking about Europa or Enceladus or some of these places that have water even underneath and a lot of ice. There’s just parallels of what we’ve learned looking at our own planet can be tweaked to look at other planets as well.

Ved Chirayath: Absolutely. And a lot of the technologies we developed for space science come out of Earth science instruments and testing and validating them on frankly the coolest planet that we know of, the only one that has life, the only one that has a huge diversity of life and multiple environments. As I looked up to do space science, I realized I’m sitting on a gold mine already. I found a planet that has oxygen, that has large organisms, that has incredible diversity. I think I could spend the rest of my life studying planet Earth and not get bored.

Host: You were talking about the lensing and looking through liquid, is that also like visual spectrum kind of stuff? Because I know if you’re looking through the ocean, at a certain point, the light just doesn’t get down that far. Were you working on that stuff as well? How do you correct that or look further than where light gets?

Ved Chirayath: The Ocean causes a lot of problems for how we view things. Humans have been really good at exploring electromagnetic spectrum since we first discovered it and trying to figure out all the different frequency regimes we can look at. But when light interacts with water, you’re really stuck with just a few frequencies that transmit. They just happen to be the optical frequencies that we naturally see. So, blue light in clear tropical waters has a very deep penetration depth. It’s usually around a hundred meters before it’s mostly attenuated. Beneath that it’s dark. Once you get —

Host: You’re not seeing anything after that.

Ved Chirayath: Right. Even under the best circumstances in the open ocean, past a hundred meters, three hundred meters, you’re out of what we call the photic zone, which is where visible light penetrates. And then it’s just dark. What’s interesting is the ocean’s average depth is four kilometers so most of our understanding of the ocean is this tiny little layer at the top. That’s not actually where all the life may be. It may be in different areas. One problem is optical absorption. The second problem then comes from just the interface of air and water at the surface. There’s a refractive index jump between the two, which means that light going across that boundary will get bent.

Host: And I’m sure that bubbles and air pockets and other —

Ved Chirayath: We’ll call those higher order defects or floating —

Host: Too far down. Let’s go back to the basics.

Ved Chirayath: But for the large part, if you’re trying to look at a target underwater — and you can see this at a swimming pool, and you look down at your friend underwater — you see the blurring effect of waves first. And then the second effect you’ll see is as he gets deeper, there’s less and less color that you see in the person. You start just seeing one wavelength coming through and that’s because of the absorption of water molecules of certain frequencies and then a scattering of those frequencies.

The technology I had to develop had to address two issues. One was this distortion from ocean waves. And the second is this incredible absorption with depth. That’s a fundamental physical limit we have on how much you can see. So, the algorithm I developed, fluobenzene, explored something called caustics. When you look at a pool on a sunny day, you’ll see at the bottom of the pool these dancing, bright bands of light. This really intrigued me. I did a lot of research into how these were formed and this really formed the basis of the algorithm that eventually we ended up using as part of this instrument. These caustics get formed because light get essentially magnified from a wave.

Host: Almost like a magnifying glass or those glass pyramids or prisms, where it looks and you can isolate to have a dot.

Ved Chirayath: Absolutely. It’s actually a question that has intrigued people for a long time. Aerie in 1894, I believe, first asked this question. What are these bright bands of light and how bright do they get? If you figured out the properties of that band of light, you actually could figure out a lot about what the ocean surface is doing because you know what shape it has. Just as if you had a magnifying glass and you were to focus the light, you’d notice, “Okay, this is a dot of high intensity, enough to fry something and it comes from this lens shape.”

I started off trying to do this experimentally. It was very difficult because it’s a complicated system and it moves. Finally on the supercomputer at Ames, we just simulated the entire structure. If you look at a Pixar film, or some other computer graphics generated film, you’ll notice that light gets — they can simulate the interactions of light very accurately. There’s a lot of advanced rendering techniques we have to do that on a supercomputer. I set up basically a swimming pool in the supercomputer and we tried to create ocean waves and then see what the patterns of light were. Sure enough, caustics emerged and we see these bright bands going around. Then if you look at the intensity of those caustics, you notice that they’re actually — this is something no one had ever figured out before — they can be almost a hundred times as bright as sunlight above the water. This is a really interesting result because it means that everything that evolved underwater, first of all, has figured out a way to deal with bands of light hitting them that are a hundred times the intensity of normal sunlight. That’s something that terrestrial organisms may not have to evolve with but certainly fish with very sensitive eyes somehow manage to cope with this incredible intensity.

Host: And not go blind.

Ved Chirayath: Yes. I have a theory that perhaps some of our suntan response underwater, I’ve noticed just diving a lot you get tan very quickly even though you’re not above the surface.

Host: That makes sense. You’re in a magnifying glass.

Ved Chirayath: And although the average intensity may be much lower, the instantaneous intensity from one of these bands hitting you can be incredibly high. A lot of organisms respond to that using either a skin pigmentation change or some other feature. That was the first main result is that these caustics are bright and when they form, the traditional concept of optical absorption with depth goes away because you have now this bright band of light that can penetrate much, much deeper than just the normal sunlight that’s coming through a diffuse surface. So that was the first part of this puzzle.

The second was trying to figure out how to tie these bands to the surface of the ocean, or the surface of a pool and figuring out what the lenses are essentially. If you look down at a pool and you see this caustic band you’ll also notice that wave that’s causing that band to form is acting like a magnifying glass and it magnifies whatever object is beneath the surface. Essentially I had to teach a computer to find those magnifying events when they went over an object and they magnified it many, many times higher than even than natural sensor could see, and then also tell the algorithm to track those caustics so that it wasn’t just magnifying the object, but it was lighting it up with a bright band of sunlight. Those two together allow you to create something called a [foo] lensing algorithm, which is what I developed to image, not only at high resolution so to use the ocean’s surface as a magnifying lens or a telescope essentially between you and whatever object is imaging it from the surface, and then also as a scanning lighting system. We have these bright bands going across them and they will light up the target instantaneously so you can fix these two issues we have with ocean world sensing — the optical absorption of light through these caustic bands and then second is the refractive distortions actually end up helping you because they act like little lenslets that you can magnify the target. It only works under certain conditions. You can’t do this exactly globally, but for shallow marine systems, this has really been a game changer. We can now look at a coral reef from an aircraft at a resolution of a half a centimeter or less in three dimensions. We understand how these ecosystems look for the first time. We’re able to map huge areas of islands in the Pacific.

Host: You’re not just reliant on divers going down or taking photos. You can, whether it’s a drone or an airplane, map it so much faster and understand it so much more.

Ved Chirayath: That comes with a whole bunch of other problems. You have these huge datasets. But for the first time you have a map, a 3D map of what’s going on under the surface. These coral reef ecosystems have a biodiversity that’s just above anything else we know on earth. If you go to the Amazon rainforest, the average number of species per cubic meter is around 100 times less than the same number in a coral reef system. These systems are not just pretty but they also support a huge amount of biodiversity in the oceans, they shelter island systems from tsunami events and storm surge events, they perform this natural barrier and they support a lot of humans essentially with a food source, a very steady food source that supports fisheries. We’re just understanding now for the first time —

Host: These are the beginning stages.

Ved Chirayath: — right, what they look like and then before we’ve really had to look with diver data to understand things at the spatial scale. Corals right now are changing drastically because the ocean is changing a lot of properties at once. The temperature is changing of the ocean’s surface, the salinity of a lot of places is changing, but most importantly, the acidity of the ocean is changing. As we pump out a lot of carbon dioxide, that carbon dioxide gets absorbed, fixed by the ocean directly. Almost 80% of the carbon dioxide we produce goes into the ocean. As it goes into the ocean, it’s changing the acidity or the ph of the ocean. So, if you’re a coral reef and you’re made of calcium carbonate skeleton, your whole structure, your skeleton is based upon having a certain acidity and if that acidity changes, it starts dissolving. A lot of corals are undergoing bleaching events or they’re essentially dying off and they are losing the structure that is so crucial to supporting fisheries in those areas as well as providing a natural barrier to storm events.

Host: What’s interesting is some of the, what you talk about, especially marine life, but as you start looking at that biodiversity, or even in some extreme situations or even where they’ve evolved or figured out how to survive with this magnified light on them. How then that can correlate to being shocked at how life can survive in some of the most crazy extreme conditions. And then what does that mean for us understanding these oceans, understanding how things are surviving under these crazy conditions? What does that mean when we’re looking on Mars, when we’re looking in Europa, when we’re looking outside of our solar system? We can get a better look at some of the planets that Kepler has found, that understanding then we know, “Hey, life has existed in this way under these circumstances here on earth. What does that mean?” Maybe some places that you typically rule out, you don’t have to rule out, or you really don’t know.

Ved Chirayath: Absolutely. I guess to me it’s very strange because we’re here at NASA. One of our main goals is to find life. I like to think that we’re the Star Trek of all the agencies. But really the only defining trait of humanity thus far is the mass extinction of life on Earth. We’re very good at killing things off, large amounts of species and it’s baffling to me that we are so interested in finding new life forms when we’re sitting on a trove of them and we’re losing them at an unprecedented rate.

Host: Looking at the fluid lensing, looking at even how you apply that to looking through the atmosphere, what do you see as the next step? What’s the next phase? What are you looking at five years from now from all the research and stuff that you’ve done? Where do you hope that it’s going or where do you see it moving?

Ved Chirayath: My job now is really just to invent new instruments and get them in the hands of the science community to use them to understand either this coral ecosystem or different types of ecosystems. So my main goal right now is getting this technology mature enough to fly on a spacecraft. NASA’s unique vantage point in space helps us understand the planet like no one else can. So right now, we’ve been testing them on drones and flying around different coral reef systems producing very large data sets and really maturing the technology to show, not only does this produce a very high resolution result, but it tells you something very important about this ecosystem. It tells you the breakdown of the different species or the abundance of the different types of morphologies. That’s a key science question that will motivate a satellite instrument.

I’m looking at CubeSats. I’d like to have a nice fleet of CubeSats that’s doing this around our planet. We still have not mapped all the corals at this resolution. We’ve probably mapped one ten thousandth of the entire coral surface of the earth with this technique. It’s a hard problem, surveying the entire planet at half a centimeter resolution on just the coral reefs alone is a very large amount of data. The other development we have going on right now is a lot of machine learning tools to take in all that data and just have a human train a small portion of it to understand how to classify all of it. We’ve been very successful in using the centimeter scaled data in particular to assess the percent cover of the coral reef with very little error. We’re now down to about 5% error — before it was around 30% error. Just that metric alone will help us understand how these systems are changing with the changing climate, how our impacts in certain environments are affecting the ecosystem before it becomes too late. For example, if we start dredging or dumping a lot of pollutants in one area, we can see that change on a weekly timescale rather than our current sensors, which have very low resolution so they only see them after it’s too late and the entire coral is bleached through the yearly timescale.

Host: It’s fascinating to see the entire tapestry of what NASA does. Of looking at the stars and have your algorithms. Moving into looking at the ocean and using a supercomputer to then create new instruments that’ll go on small sats or whether it’s small missions, large missions. It’s everything that they do is touching it in one way or another.

For folks who are listening who want to learn more about some of the cool stuff that you’re working on and any direct questions for Ved, we are using #NASASiliconValley, we’re on Twitter @NASAAmes. Thanks so much for coming.

Ved Chirayath: Thanks so much for having me.

[End]