A conversation with Uland Wong, senior computer scientist in the Intelligent Robotics Group at NASA’s Ames Research Center in Silicon Valley.

Transcript

Host (Matthew Buffington): Welcome to NASA in Silicon Valley, episode 52. As of today, it has been exactly one year since this podcast launched last summer. We want to give a huge thanks to everyone that has listened to us this year. This has been a ton of fun putting together and chatting with a wide range of folks at NASA. Just so you all know, we do follow the #NASASiliconValley and check out the podcast metrics to understand how many people listened and what conversations have done particularly well. To that end, we would love to add more interactivity to the podcast interviews. We could add questions live on-air or have questions sent to us in advance, or even, take requests on any particular topic or guest you want to hear from. So go ahead and use #NASASiliconValley on Twitter and let us know your thoughts.

Similarly, no matter what podcast app or platform you use, go ahead and leave us a review, whether it is iTunes, Google Play, SoundCloud, or any other app. Reviews really help podcasts get noticed by other people that might not know about it yet. Again, thanks for all the support and don’t hesitate to let us know how we can improve things.

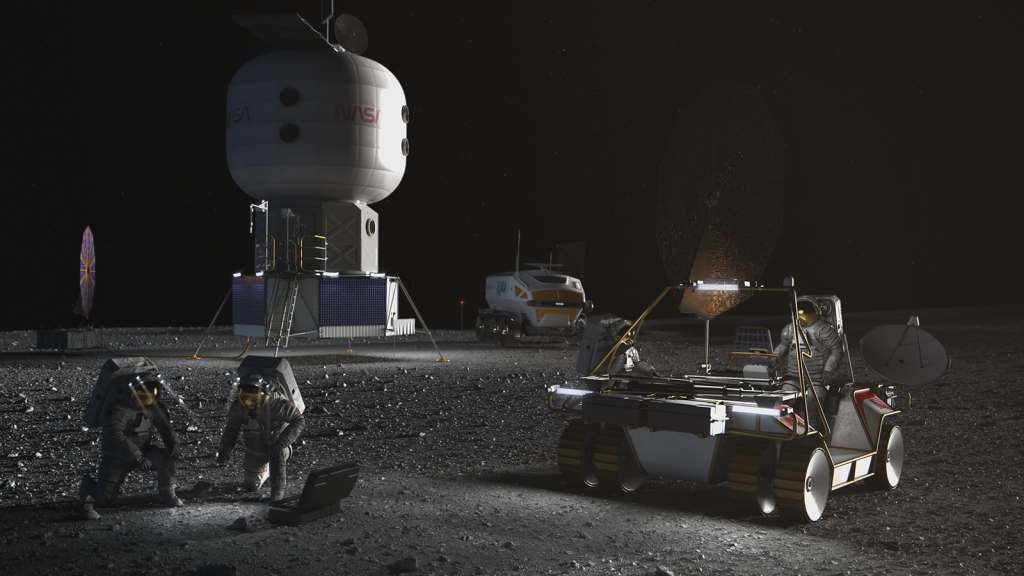

This week we’re talking to Uland Wong, a robotics researcher and computer scientist with the Intelligent Robotics Group here at NASA’s Ames Research Center. Uland works on perception and physics-based vision for mobile robotics. Using special stereo-camera systems, Uland and his team built a sandbox filled with Moon dirt as a simulated lunar environment, to understand how to navigate in the challenging lighting conditions at the Moon’s poles for future missions, looking at planetary and subterranean robotics. In fact, check out our Ames Facebook page for a recent Facebook Live we just did at the Lunar Simulant Testbed Lab here at Ames. And now, here’s Uland Wong.

[Music]

Host: Tell us a little bit about yourself, what brought you to Silicon Valley, how did you join NASA? Tell us your story.

Uland Wong: First off, thanks for having me. I came to NASA in 2015, so not too long ago.

Host: So you’re new.

Uland Wong: Yep. I was in one of these research scientist positions at Carnegie Mellon [University] in Pittsburgh, Pennsylvania. I did my Ph.D. there in robotics under professor Red Whittaker. He’s the leading roboticist of today. He did all the self-driving vehicles and he won the [DARPA] Urban Challenge, and he was my advisor.

Host: I always think of Pittsburgh as the land of sweet potato fries. It’s the one place they put sweet potato fries on salads, on sandwiches, everything.

Uland Wong: That’s true. And they have pierogies. They like their pierogies.

Host: Growing up, did you study engineering and robotics when you were going to Carnegie Mellon?

Uland Wong: Growing up, I knew I wanted to be an engineer or a scientist. And in high school, I had an internship at the local university up in Portland, Oregon. We did robotics and that captured my imagination and I was hooked since then. I did my undergrad in computer engineering at Carnegie Mellon and I stayed there. I knew all those people and I stayed through the Ph.D. and even for two years after the Ph.D. in the research scientist role.

Host: And so how did that end up leveraging into NASA?

Uland Wong: During my Ph.D., I did a lot of robots for underground work – mining, cave exploration, things like that, tunnels, some defense work. And NASA, at the time, was very interested in exploring underground caves. So, out of that project, I met a lot of NASA people. I always was interested in space and so one thing led to another. Eventually I applied here and got accepted and came all the way to San Francisco.

Host: Wow, so this is relatively soon. It’s been just a little bit over a year?

Uland Wong: Yeah, about 20 months actually.

Host: Nice. When you came over here – I’m guessing you’re still working on robotics – how did you get launched into things?

Uland Wong: I knew a lot of the people before I came because surprisingly, the robotics group here has a lot of CMU alumni. I was very comfortable with those people. Some of my friends from grad school were already here in the Intelligent Robotics Group. So I dove right into the work. They had a need for people who did computer vision and people who had experience with perception in dark environments like caves. I did that, so I fit right in and got to work on day one.

Host: Excellent. Yeah, thinking of Carnegie Mellon, for folks who are local, if you’re on [Highway] 101 and you see the big hangar from where NASA is, there’s a chunk of it that is NASA. Then we have another chunk called the NASA Research Park. Carnegie Mellon even has some space there. So it seems like a perfect place for a partnership.

Uland Wong: We have a satellite campus there. A lot of people work there and then go to Pittsburgh or do the switch around. We’re very familiar with NASA.

Host: It’s a nice comfortable landing place over here. When you came in – obviously you’re taking your expertise from working in dark conditions and caves – are you looking at sensors or the actual robots themselves?

Uland Wong: Exactly. My area of expertise particularly is perception for robots. Those are the sensors. You can imagine the “eyes” of the robots. We look at cameras and lasers, radar, thermal imagery, most of that has to do with optical phenomenon [sic] like sensing of light. But that provides a way for robots to navigate and interact with the environment and understand the environment.

Host: Is that fairly new? Obviously, humans, you have to be able to see. For robots, are these sensors just getting more and more sophisticated? What are you playing with? Are you making these more in tune?

Uland Wong: So in the robotics community, everybody used cameras. That was the only sensor that they knew how to use in the 70s and 80s. That followed naturally. In the 90s, some companies came up with these sensors called LIDAR – Light Detection and Ranging. And it basically shoots a light or laser pulse and measures the range. And it actually, these devices give you 3-D information directly and it’s not like cameras where you just kind of see a picture or you have to set up two cameras to do some stereo thing.

NASA is just getting to that stage now. The industry in the early 90s developed this technology. You can see it on all the cars at Google and Uber Drives.

Host: I was going to say, most self-driving cars have some sort of LIDAR on top.

Uland Wong: Exactly. No NASA robot currently uses that. It’s a very low TRL technology.

Host:TRL?

Uland Wong: Technical Readiness Level. It means they haven’t developed it to the point where they’re comfortable flying it yet.

Host: Yeah, because I remember reading something I frequent on – there’s a subreddit of r/SelfDrivingCars. They’re always talking about how expensive those LIDAR sensors were for so long. And it’s only been fairly recently that that price has gone down that made some of this realistic.

Uland Wong: To be fair to NASA and space exploration though, that technology does not carry directly over to space environments, like the hard vacuum of space, the radiation, thermal and heat – all those things are difficult for a sensor to perform well [in]. So they have to develop the sensor to handle those environmental conditions before they can fly it. And that’s been difficult.

Host: It’s really cold.

Uland Wong: There are several groups at NASA, Goddard, JPL – a lot of people are looking at lasers for robots right now. We are too.

Host: When you’re working on robotics, are you looking at the environments and understanding those environments? Are you doing some sort of analog for here on Earth for what people may expect on an asteroid or on Mars or anywhere?

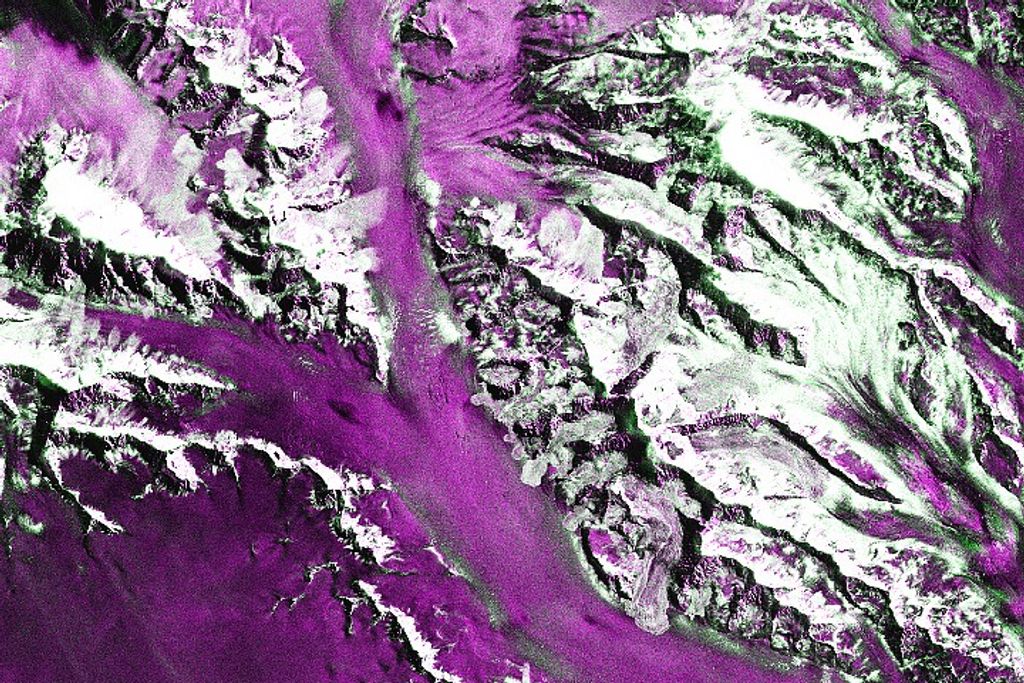

Uland Wong: Yes. We try to recreate some aspects of the environment here in a lab or sometimes we can find them out in nature on Earth too, so that we can test things like instruments or sensors. One of the analogs that we are working on now is an appearance analog for the poles of the Moon that we’re developing at Ames. The poles of the Moon are a very interesting environment optically. You can go there and it will look like nothing else in the solar system.

The Sun comes in when you’re at the polar regions of the Moon at a very oblique angle because the Moon rotates in the same plane as the solar system. So the Sun comes in at a very low angle and there’s no atmosphere to scatter the light. All your lighting is very direct, from a point source, and you get these very long black shadows. It’s a very curious environment. You’re unlikely to find it anywhere on Earth, because Earth has atmosphere. What you get are dark shadows and very bright regions that are directly illuminated by the Sun. The Italian painters in the Baroque period called it chiaroscuro – alternating light and dark. It’s very difficult to be able to perceive anything for a robot or even a human that’s going to analyze these pictures because cameras don’t have the sensitivity to be able to see the details that you need to detect a rock or a crater. It just looks like a black spot. It’s hard to tell if it’s a shadow or there’s actually –

Host: Something black there.

Uland Wong: Yeah, you might fall into something. We’re building these analog environments here and lighting them like they would be on the Moon with solar simulators, in order to create these sorts of appearance conditions. We take a lot of pictures and use these sensors. We are doing two things. One, we are working on algorithms, so the robot can safeguard itself in these environments. And two, we’re collecting all this data so that we can train people to interpret it correctly and command the robot where to go.

Host: So as a bit of a callback on one of our earlier podcast episodes, we had Terry Fong over and he was talking about how–

Uland Wong: He’s my boss.

Host: How nice. He was talking about how looking at environments and doing 3-D mapping and even working with some VR stuff, but then came down to the robot needed to understand that whole 3-D environment. It’s not just a camera or a picture. It needs to, in a 3-D world, map it and understand where its place is. Then that was that evolution over time of the technology and then realized, “I don’t need the VR stuff per se, but we really need to know what the terrain is.” Is that some of the stuff that you’re looking at?

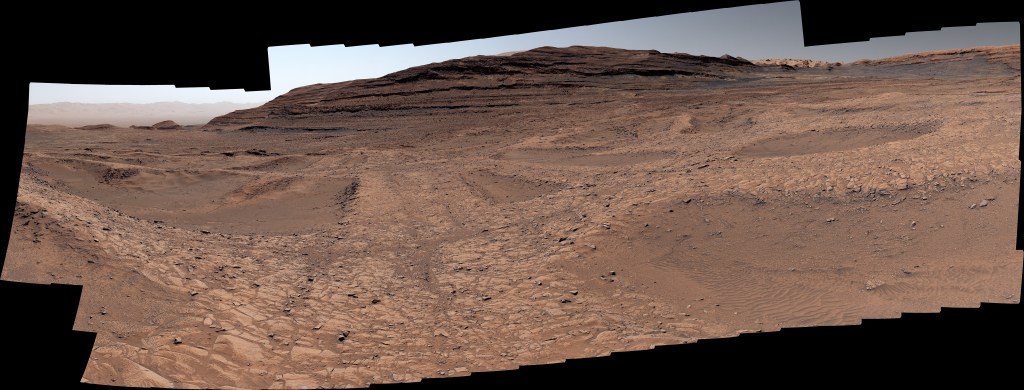

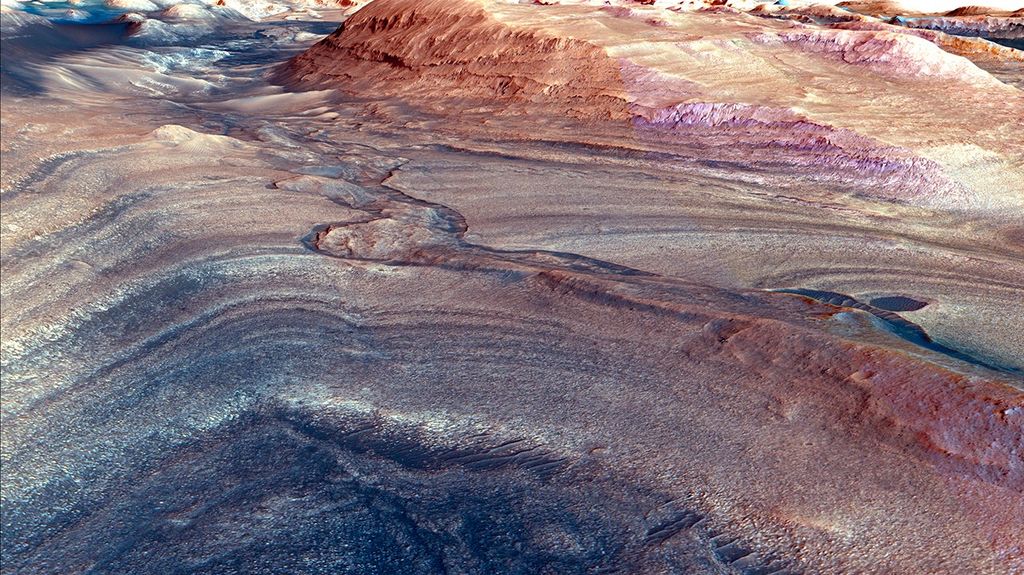

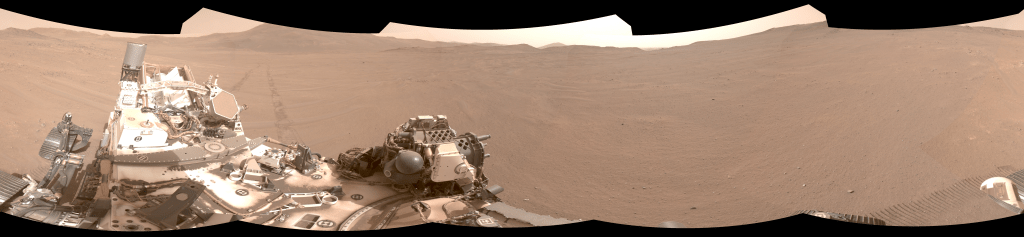

Uland Wong: Exactly. What he was talking about is pretty well understood on Mars and on Earth. These are simpler, easier environments optically because of atmosphere. When you have atmosphere, light scatters, shadows aren’t as harsh. You get this sort of ambient light that you can see in the dark areas and cameras will operate a robot fairly well in these conditions. The Mars Rover’s just use stereo vision and they’ve used it for 20 years and they work perfectly fine. A lot of robots on Earth operate in the daylight well using stereo cameras. We don’t know whether stereo cameras will work in the polar regions or actually on any airless body. NASA has never landed a rover and navigated in the polar regions of any airless body.

One of the missions that’s in “phase A” right now, Resource Prospector, that I have the privilege of working on, might be the first mission to land a robot and navigate in the polar regions of the Moon. And in order to do that, we have to figure out what these polar regions are going to look like on the surface. Nobody’s ever been there. We have to use a combination of physics to predict what it looks like, and also some observations from orbit that we can get an inkling of what these environments might be. I mentioned the dark caves previously, we know from operating in those kind of conditions and bringing your own light where it’s dark, there are operational and navigational considerations that we’re trying to get more of a grasp on.

Host: As you start building these technologies, creating new sensors, trying to figure out how to do this, are there places on Earth that you’ve tested, like in caves? Or is it for the most part that you have to do algorithms, use a supercomputer or test it in simulations? Or is it a mix of both?

Uland Wong: You exactly described what we’re doing.

Host: All right. Knocked it out of the park.

Uland Wong: For a lot of missions, you can find an analog naturally and test that. The other option is to create an analog in a lab environment if you can’t find that. So we chose that route – to create one. We make fake Moon terrain with fake lighting in a lab. But the other part of that is you can only shovel so much dirt. We are also leveraging physics based rendering. We’re trying to photo-realistically recreate the illumination in these environments. This allows us to use a supercomputing [sic] to render a bunch of images using models that we have decent confidence in and this gets us a lot more information than we would taking pictures in a lab with three people, for example.

Host: You’re talking about the polar Moon surface, there’s another call back to another episode we did with Dan Andrews who was talking about some of the work that he was doing over there. How it’s such a unique environment because if you have a crater at the polar spot on the Moon, the light is coming at such an angle – long shadows. But inside those craters, some spots in there have never seen light, ever. Powder almost becomes silky and really fine. Is that what you’re simulating in the labs?

Uland Wong: Yes. In my case, we’re simulating how they would look to the sensors so that we can navigate around there. But these are very interesting environments and nobody actually knows exactly what that powder is going to be like. That powder could be rock hard, and I’ve heard other people argue that it’s soft and fluffy, for example. From the point of view of cameras, that part doesn’t matter very much. The other things that matter include how bright the soil is – we call that regolith actually – how reflective it is. Those have repercussions to optical sensors.

Host: The robot doesn’t have to necessarily touch it, but it’s got to see it and understand how it’s going to –

Uland Wong: Yes. And if it shows up as something like black ice, humans find it very difficult to drive in environments where there is black ice. It’s very cold there, and there might be moisture that’s been frozen in and we don’t know how that behaves optically so we’ve got to simulate some of these properties in order to figure out what our operations will be.

Host: You talk a lot about the robots and the sensors and sometimes that also, as we get further and further away from Earth, there’s a time delay that we always have to deal with. And then that ends up – called the speed of light – but then that often will pivot into looking at intelligent systems and ways that humans and robots can work together with some smart software where the robot can actually handle quite a bit on its own, but then use human interaction to help refine it. Does that play into some of the work you’re doing?

Uland Wong: Yes, actually. The Moon is a lot closer to Earth than Mars but the communication setup that we’re using actually introduces, I’ve heard, somewhere around a 30 second latency. We’re working with an operation right now where we think we’ll command the robot to drive about four meters and it will drive a four meter path and then stop. And along that path while it’s driving, the human is not going to be “babying” the robot. The robot will drive on its own, and it will sense if the path goes through an obstacle that’s unsafe or it’s deviating because it’s slipping and the robot will stop itself and inform the human operators. There are some “automated safeguarding” we call it. But it totally won’t drive itself. The human will command in small steps where the robot is going to go. We intend to have human operators and scientists who will really look for where the best paths are and where these interesting goal locations will be.

Host: On the stuff that you’re working on and as you’re looking at these sensors and the technology and trying to perfect them or just for future campaigns, missions – what do you see, five years from now, what are you seeing in the future of where you’re hoping some of this work is going to lead to?

Uland Wong: My first hope is eventually we will have robots that can operate in these polar conditions and that’s a first step to robots operating in caves on other planets, like on Mars. They recently found these holes on Mars that are sections of collapsed lava tube, so one day a robot might go in there, into these total dark environments that have never seen light. And the work that we’re doing here is going to lead to that. My hope is that, in the future, robots will go past daylight operations in atmospheric environments to really the toughest, most extreme environments. In order to do that, the robots need to be able to perceive better. That’s where the leap is needed.

Host: Yeah, because when you think about all the rovers and the things that have been landed on Mars thus far, we’re literally and figuratively just scratching the surface. And there’s a whole lot more on that planet to look at.

Uland Wong: Some theorize that underground is where all the life is going to be, all the extremophiles. When you’re underground, you don’t have the massive temperature extremes. That might be where liquid water has been holed up. So underground is very interesting. In the polar regions, in those “permadark” craters, that’s where the water is, so we’re going to those locations and they’re all extreme.

Host: Even looking at that, that throws in a whole host of other questions of when you’re building a rover that can dig a little bit or go into caves, then you’re thinking about the battery packs. You’re in a cave, there’s no solar energy to “battery empower” the rover. It just gets exponentially more and more difficult as you go down there.

Uland Wong: Even in the permanently dark craters, there’s no sunlight for battery, so our robot is going to need to manage its own –

Host: Recharge and come back.

Uland Wong: Yes, exactly, manage its battery while it’s in the dark regions and then be able to come back because if you estimate how much power you have wrong, then you’re going to die in the dark regions and never make it back to the sunlight.

Host: Obviously, a lot of the stuff that you’re working on, computer science, into robotics – but obviously, we’re sending these robots and developing the software and these instruments for science. At the end of the day, we’re going there to see what can we learn that we don’t learn. How does that interact with your work? Are you working a lot with the scientists that actually have a hypothesis that they’re trying to pull together and you’re just helping them pull together instrumentation to make it happen?

Uland Wong: A little of the above. Not all of the above. The scientists do have an idea of where they want to sample, for example. They have spectrometers, drills, other sensors for Resource Prospector. They have ideas [of] where they want to use these sensors and as a roboticist we’ve got to make sure the robot can get to these locations safely. And we’ve got to make sure that they get to multiple such locations. So, all that ties in together and we definitely work with the scientists. I know a lot of them and they’re my friends.

Host: Going forward, is there one big game changing technology that you guys are hoping to pull together or something for future missions?

Uland Wong: In my opinion, Resource Prospector itself is the game changing technology it’s an incredibly low-cost mission. NASA’s not tried anything this courageous in a while. It’s going to go to the polar regions of an airless body, which is a first, and it’s going to drill and it’s going to mine water. That’s pretty game changing because if they do find water there, imagine all the possibilities with fuel and Moon bases – all that stuff.

Host: Excellent. For folks who are looking for more information on the stuff that you’re working on, be it robotics or Resource Prospector, can they just go to NASA.gov?

Uland Wong: Exactly. You could also search for the Intelligent Robotics Group at NASA Ames, Resource Prospector, as you mentioned, and Game Changing Development which funded some of this work as well.

Host: Excellent. So for folks that have questions for Uland we are on Twitter @NASAAmes and we’re using #NASASiliconValley. Thanks for coming on over.

Uland Wong: Awesome. Thanks for having me.

[End]