A conversation with Terry Fong, Senior Scientist for Autonomous Systems and Lead for the Intelligent Robotics Group at NASA’s Ames Research Center in Silicon Valley. For more information on the Intelligent Robotics Group, visit: https://ti.arc.nasa.gov/tech/asr/intelligent-robotics/

Transcript

Matthew C. Buffington (Host): Welcome to the NASA in Silicon Valley podcast, episode 24. As our first conversation of 2017, this is also being released during the Consumer Electronics Show in Las Vegas. There are a number of NASA booths at CES right now showing off NASA technology and how it is not only launching us towards the journey to Mars, but also how that technology can be transferred and used by the private sector. In attendance at CES will be our very own guest this week, Terry Fong, who leads up the Intelligent Robotics Group at NASA Ames. Terry has been involved in a wide array of NASA technologies that are peaking the interest of popular culture at the moment. This includes virtual reality, 3D mapping, autonomous vehicles, and even artificial intelligence. If you happened to be walking around the CES show floor, be sure to give him a shout out at the NASA booth. Now before I spoil too much, here is Terry Fong.

[Music]

Host: Tell us a little bit about yourself. Tell us how did you join NASA, how did you come to Silicon Valley, what brought you here?

Terry Fong:Oh. Well, you know, I joined NASA because ever since I was a kid I wanted to make airplanes and rockets and that kind of stuff. NASA has always been my dream job. So, I went to school. I decided I would study aeronautics. Then I got into robotics and space robotics in particular. And then afterwards, you know, I landed here.

Host:Was it always here in California, or where did you grow up?

Terry Fong:Yeah. So I grew up in Chicago. I went to school in Boston. I came out here after I got my master’s degree. Worked at Ames for about four years. Left.

Host:Really?

Terry Fong:Yeah. Left for, boy, 10 years.

Host:Okay.

Terry Fong:I got a Ph.D., and I ended up coming back here.

Host:A lot of people come in as either doctoral students or something. Did you just apply to a job online, or how did that happen?

Terry Fong:Well, when I came here the first time, it was because I knew somebody here. I came here with a master’s degree. And I worked here for four years. And then after I left and then decided to come back, I came back because a good friend of mine was running the robotics group here at the time. And we’d always wanted to work together. So, you know, I came back here. We worked together for I guess about three months. And he says, “Oh, guess what? I’m leaving. Take over the group.”

Host:[Laughs] Surprise.

Terry Fong:Surprise. No, but it’s been great. It’s been really great ever since.

Host:Excellent. What were you working on when you first came on over?

Terry Fong:When I first — when I first came here at that time, the robotics group was working on several different things. Part of that was basic research in mobile robots. But the thing which really — I think sort of the claim to fame of the group — this is back in the early ’90s — was the use of virtual environments to really monitor, to understand what’s going on with robots, to use these interactive, 3-D graphical environments. It could be in a head-mounted display. It could be on a computer screen. It could be on a projected stereo display. But basically the use of interactive 3-D graphics to understand what’s going on with robots.

Host: I came accross a Oculus subreddit, and it had these old photos from NASA Ames, and it actually came out of a historian book of people with these head-mounted displays. It was like the early stages of that. Was that the kind of stuff that you were working on?

Terry Fong:That was exactly it. And, you know, NASA Ames was at the forefront of the — at least one of the prior waves of virtual reality.

Host:It was fits and starts through the ’90s.

Terry Fong:Well, you know, I think a lot of technology goes through waves. You try something, people get very excited, then they get very disillusioned because it doesn’t quite work out, and there are these problems. And then it goes away for while, and then it comes back again. You know, we see this over and over. You think about all the devices we use now, all of our tablets. Well, it’s not just today. I mean, they’ve been around for a long time. Way back in the day there was the Apple Newton.

Host:Yes.

Terry Fong:You know? This was back in the late ’80s, early ’90s. It didn’t work out so well I guess for Apple. The next time around it worked out really well. The same thing is true, I think, for the whole area of virtual reality. Back in the ’90s, there was so much excitement, so much hype. You know, at that time we really got into it because we were interested in using technology like that to work with robots. And we ended up working with lots of different people. There was a huge set of these trade conferences at the time. And we even started a virtual reality users group.

Host:Really?

Terry Fong:Yeah. Back in the day, there used to be these user groups —

Host:Before the forums.

Terry Fong:Before the forums, yeah. I mean, they were sort of hobbyist, birds-of-a-feather kind of groups. And we had one of those. And it was a lot of fun. It was also really great to see how this — at that time, you know, virtual reality was put into place. But then a few years later it all sort of died off. And part of it was that people I think realized that the graphics weren’t good enough. They were not high enough resolution. It was certainly not fast enough. The head-mounted displays were very heavy.

Host:There was probably latency and stuff.

Terry Fong:There was a lot of latency. So you move your head, and then sometime later the graphics update. You know? So it’s not really the kind of thing that makes it really —

Host:It’s not exactly giving you presence or immersion.

Terry Fong:Well, the thing is that you can get presence. I tell people you can get presence with an 8-bit videogame. It depends on how invested in it — you know, how much you’re into the game. And as soon as you’re into it, you don’t notice the details like, “Oh, it’s blocky graphics,” or it’s very slow. It’s the fact that you are really in that world.

Host:Another thing that always caught me off-guard is when — almost like your lizard brain takes over, when you’re either standing on a ledge or you’re doing something. And when you have that first moment of you know you’re in a fake world, but yet your stomach is pulling like you’re falling; that was when — it was like, “Oh wow, this is neat.”

Terry Fong:Yeah. That was the case for us back then, too. The graphics we were using were — they were pretty poor by today’s standards. But I’m sure 10, 15 years from now we’re going to look at today’s technology and we’re going to say, “I can’t believe you guys got by on that.”

But the thing is that, you know, that was a wave of virtual reality. NASA Ames was a real leader at that time. And the Human Factors division, were doing lots of real sort of basic, fundamental core research that has led to a lot of things we see today. And the robotics group at that time, was interested in using virtual reality for robots. And that was really the claim to fame of the group.

So back in the early ’90s when we started looking at virtual environments, we were interested in trying to solve the problem of how do you allow people to operate robots when you have a very poor communication link between the human and the robot? So the idea is that if you can’t send a lot of data, lots of high-resolution images, or 3-D models, or even just basic information about what’s going on — instead of trying to send all this very large data across a very small pipe, very limited bandwidth communication link — or in the case where you have a very time-delayed system —

You know? Instead of doing that, try to use a simulated 3-D world locally. So where the human is. And then you update that, and you only send very small bits of information back and forth. For example, instead of trying to just joystick the robot, you work locally in this 3-D world with the robot. And when you’re satisfied that you have the commands right, only then do you send off the very limited things such as, “Oh, go to XYZ point in space,” you know? Or, “Turn left.”

So you operate with a robot in this kind of local simulated way, and then based on the results of that, then you command the robot and you don’t have to worry about bandwidth limitations, you don’t have to worry about delay. It also allows you to take advantage of something that NASA does really well, which is to acquire lots of high-resolution information about remote environments.

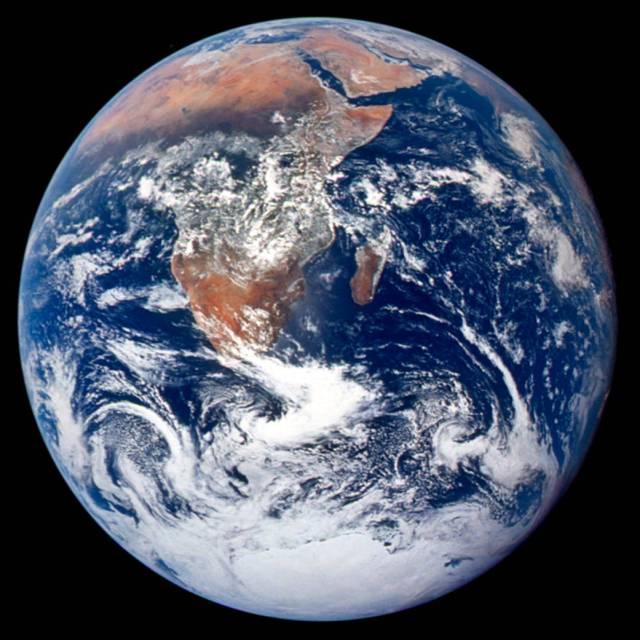

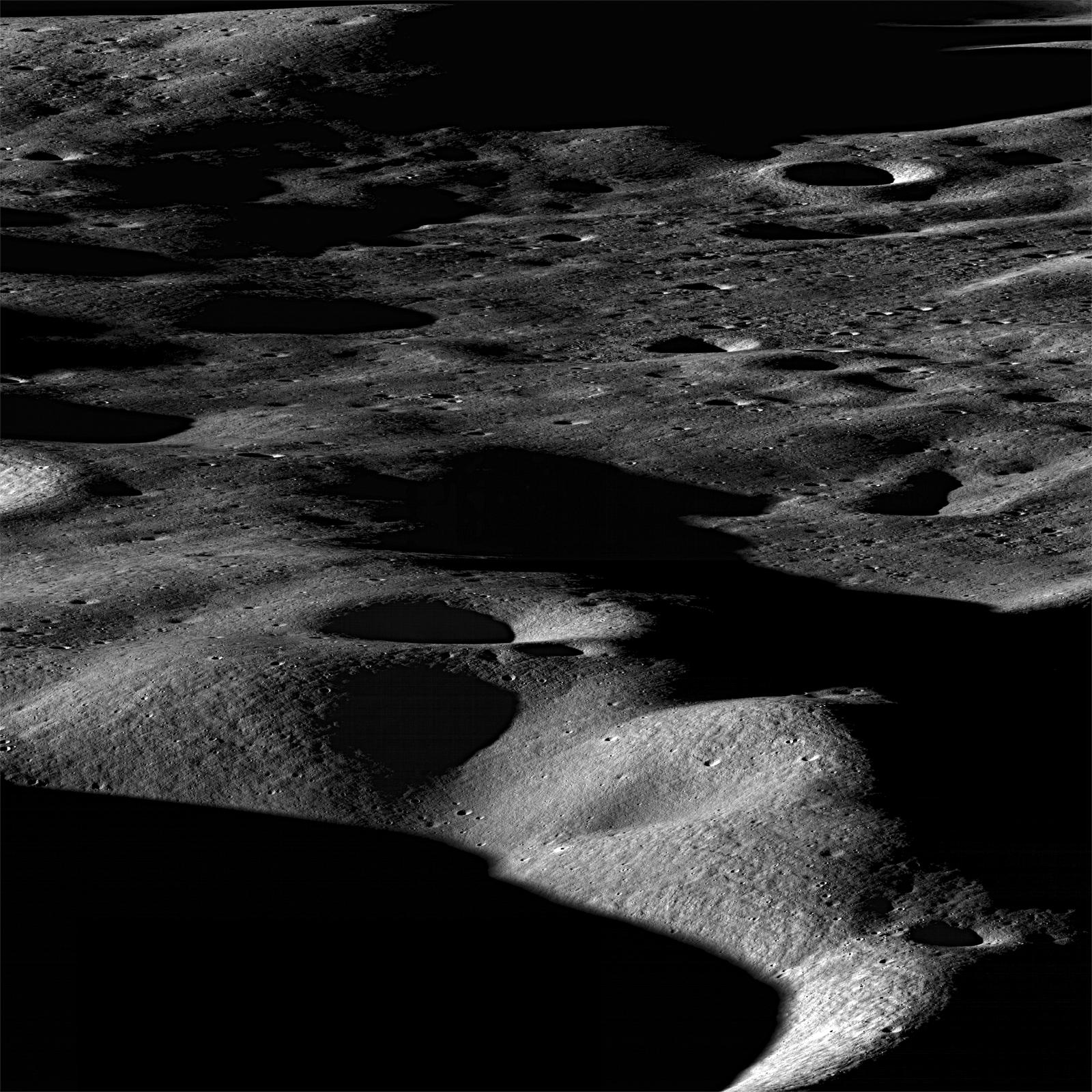

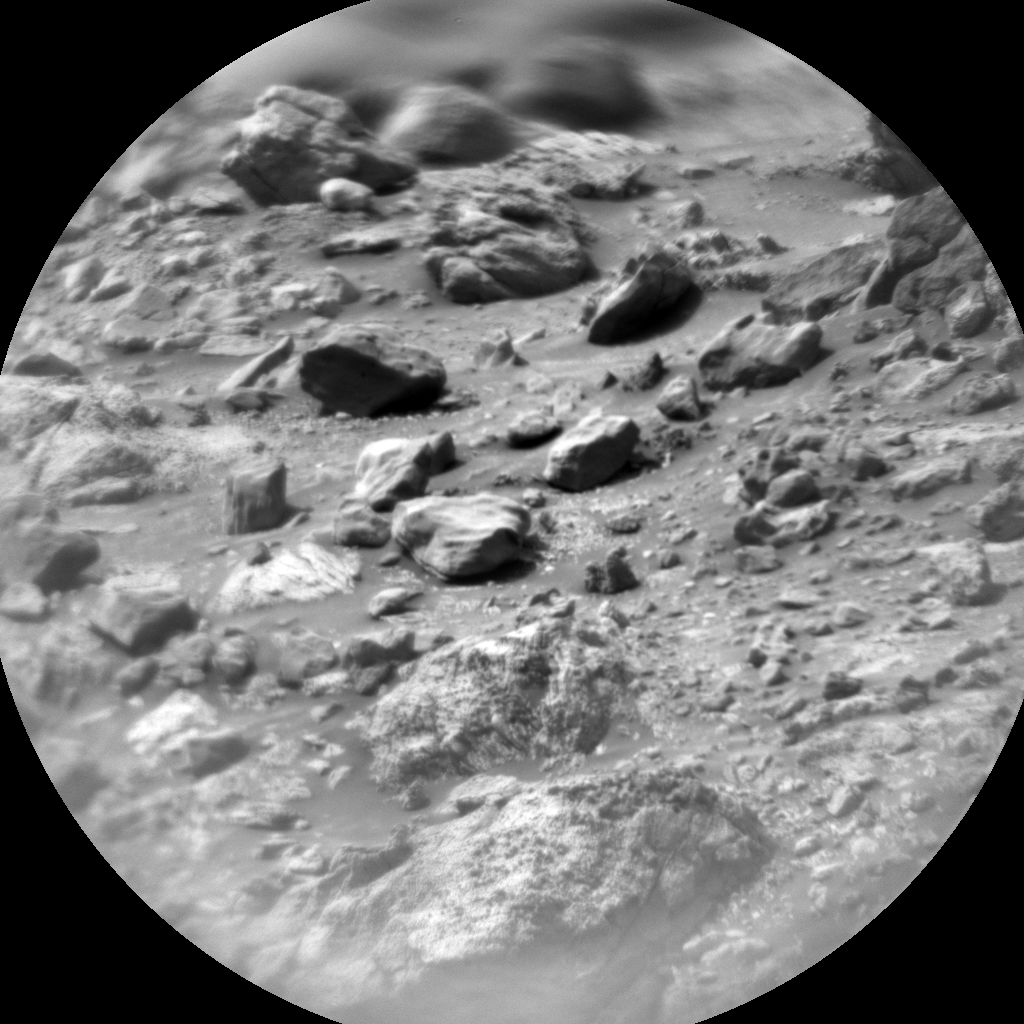

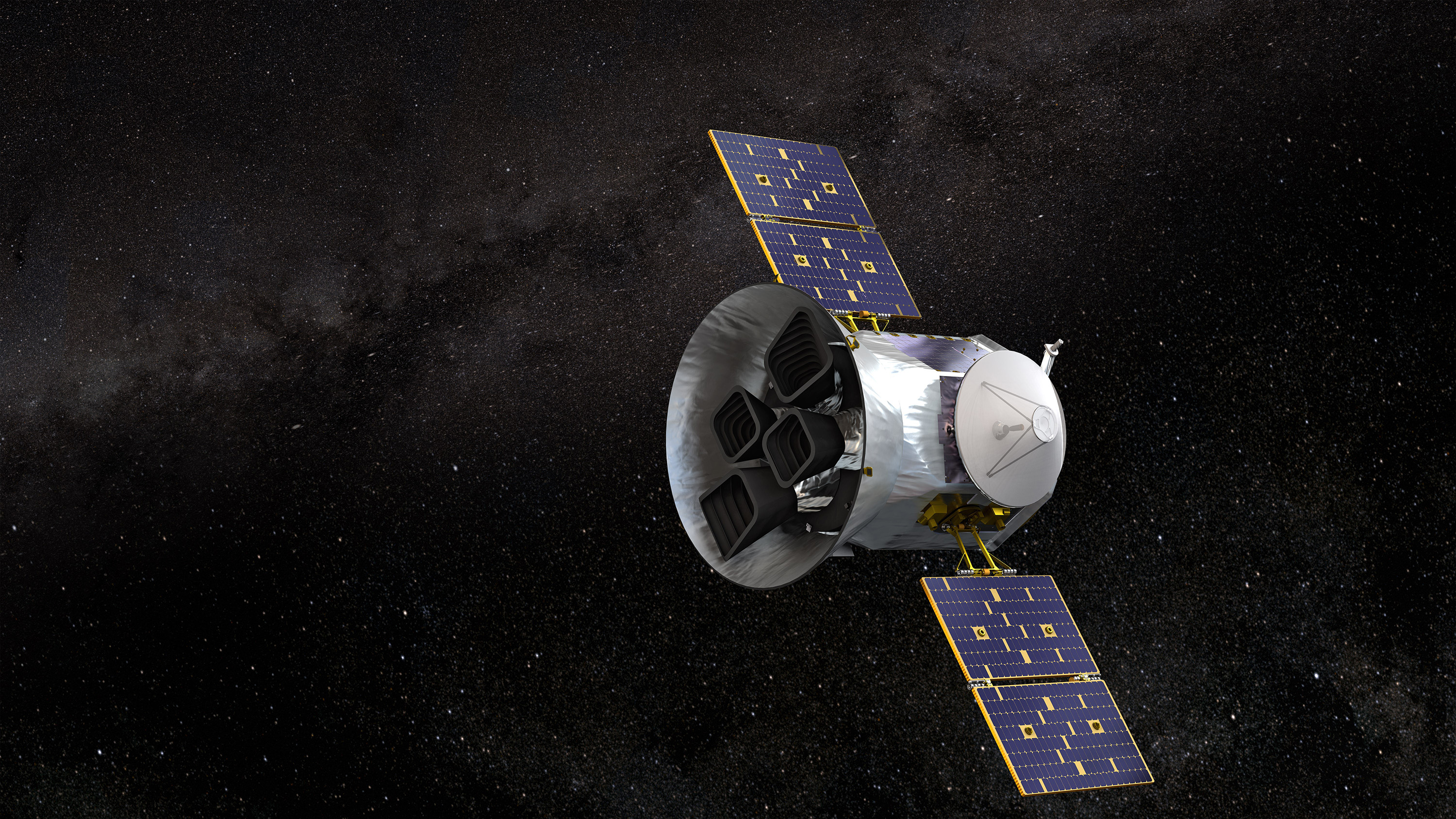

We have these satellites that orbit the moon and Mars and return incredibly high-resolution 3-D data. So, we don’t need to send that information over and over and over and over again. We can build that environment once, as long as the environment doesn’t really change. And it’s not like the moon and Mars change the same way we have here on Earth. So we can build sort of the background.

Host:Okay. So you’re sending the essential data, not necessarily stuff that we already know.

Terry Fong:Exactly. So, the updates, the things that we care about sending over our, as I said, limited communication link, you know, are only those things which change. So, the deltas basically. And everything else — all the background, the base environment, the 3-D terrain — anything that we can just model once, we model once. And then we are smarter that way so we don’t have to keep sending it all the time. And that was really the basis of the work that we did back in the ’90s, was to try to use these 3-D virtual worlds to allow us to better understand what’s going on with the robots that are operating, you know, in faraway, difficult, hazardous environments.

Host:And I was thinking, even if that small pipe became a really big pipe where you can send huge packets of data, if you’re on Mars or Jupiter, you’re further out, there’s the speed of light. You’re going to have a time delay. And so, does this also kind of morph into almost like artificial intelligence or even like an autonomous kind of aspect to it that you’re working on?

Terry Fong:Yeah. One of the other things that we were trying to do at that time was to make this whole concept work. You’re not trying to control the robot in real time. You’re not trying to joystick or control it the way you might do like an RC car or today a drone. But instead, you are talking to the robot in some sense at a higher level. You’re trying to tell it, “Go carry out this task.”

So rather than me saying, “Hey, Matt, I want you to take two steps forward, turn right five degrees, move forward one-half step, then reach your arm up exactly this high” — you know, I might say, “Hey, Matt, can you get me that cup of coffee?” You know, it’s a different level of interaction. And to do that, it means that you have to have some ability to operate autonomously. You have to be capable of understanding what does it mean to get a cup of coffee, and how do you do that action?

And so we were trying to focus on ways that would allow the robots to also act in a similar manner, so that we could — instead of having to send all these low-level, detailed, high-bandwidth commands, we could send single higher-level ones. And so that gets into the whole question of how do you make a robot more autonomous? How do you make it capable of operating independently, reliantly, robustly, when you don’t have the ability to control every little tiny action?

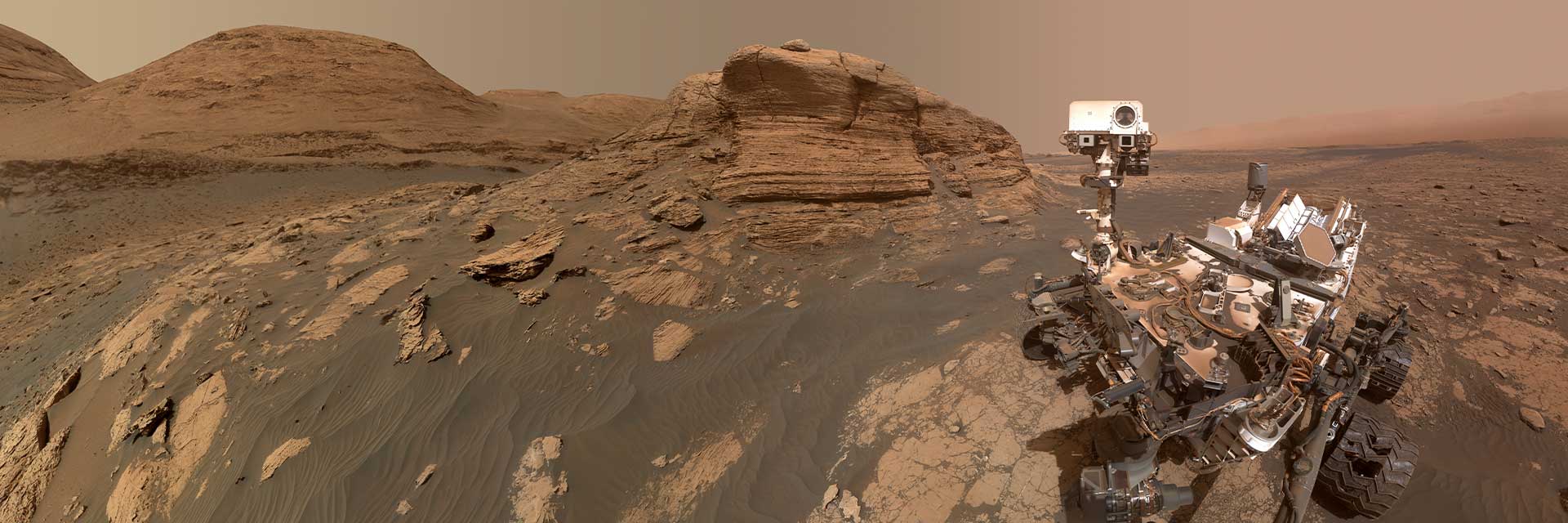

Host:So, how does some of this leverage into the stuff that you’re working on now? I believe NASA Ames had also done work either on rovers or different things to kind of use these concepts to actually put it in a thing that landed on — that’s either on Mars or on the moon or whatever, you know?

Terry Fong:Yeah. So, as I said, back in the ’90s we were interested in using virtual reality to work with robots. And at that time, virtual reality really was the things that most people think of as VR, the idea that you use a head-mounted display, and it’s not just that it’s head-mounted but it’s, you know, stereo display, so it looks like it’s a 3-D world.

Host:3-D, yeah.

Terry Fong:Yeah. It tracks your head position. So as you turn your head — or maybe track your body, and so as you move your body you also move in that world. That was a fully immersive VR experience. And you see that today in video games. But what’s interesting to us is that over the past, you know, 10, 15 years or so, we have found that working with robots that the essential is not necessarily the fully immersive, real-time, “I’m sitting in this weird head-mounted thing looking around the world.” But what’s important is the real-time, 3-D representation of the remote environment.

So we still use the same software pretty much that we did back in the ’90s to take 3-D information, to take robot information, and visualize it in a real-time, 3-D display. But we don’t put that into a head-mounted display. I mean, we can work with this on a tablet or a laptop. So the essential thing is not the immersive experience. The essential thing is the 3-D experience. We want to visualize the world in 3-D because robots, especially planetary rovers or free-flying robots, they operate in 3-D worlds. And so, for us, it’s not sufficient to look at a text display or a 2-D display. We want to look at a 3-D representation of the world.

So we’re still working with VR from the standpoint of we display a virtual robot in a world that was created from data. But we don’t try to put that into a VR helmet. We can. We can.

Host:You could.

Terry Fong:We can. But the essential part, the important part is that for us to understand what the robot is doing, whether we’re just monitoring it or trying to plan what it should be doing next, is to do that with a 3-D graphics display.

Host:Okay. And so, you’ve been doing a lot of the work of how that rover — basically how it has eyes, and ears for that matter, and how it can — if a rover is moving on Mars, it knows, “There is this rock in front of me.” It maps it all out, so it knows where it’s going and what it’s doing.

The cool thing about NASA is we get to work with these neat toys and come up with these big concepts. But obviously there’s other people in the world that have an interest in this, more of late; not necessarily back in the ’90s. Talk a little bit about what is NASA doing of like taking it technology and then also making it available for other people to pick up that baton and keep working on it.

Terry Fong:Yeah. I think one interesting thing to us is that the overall approach of using interactive, real-time 3-D graphics to understand what robots are doing is something that you see in many, many places today. Certainly within the robotics research community across the world, people are now using 3-D graphics to understand what the robots are doing.

I think one of the things that we still here, at Ames, started way back in the ’90s was the idea that you could use this not just in the lab, but you could apply this to real-world problems. You could take robots out of the lab, put them in the real world, and better understand what’s going on. That’s one part.

The other part that we’ve really been using over the past several years is this whole notion of, with these interfaces that allow you to understand what’s going on with a robot, how does that allow the human to support the robot?

You know, this whole area of how do you team humans and robots for me, for a long time, has been focused on the wrong thing. People have thought of robots for many, many, many decades now as just these tools. It’s like a hammer. You pick it up, you use it. You have a remotely operated, four-wheeled mobile robot, you just joystick it. So it’s basically an extension of you. It’s a tool.

But as robots become more autonomous, as they have the ability to function by themselves, they’re not just tools. Just like our kids. We start off with our kids, they’re actually kind of tool-like when they start off. You tell them, “Hey, I want you to” —

Host:Go mow the lawn.

Terry Fong:Yeah, “Mow the lawn, go do this, go do that, go do that.” And it’s like, “Do, do, do, do, do.” But as they get older and become more independent, the interaction becomes more like you and I. I’m not going to sit here and tell you, “Hey Matt, I want you to drink your coffee by picking it up in this way.”

Host:Exactly.

Terry Fong:But instead, you know, “Hey, if you’re thirsty, go ahead.” And so, the whole dynamic, the interaction becomes more of a peer-to-peer kind of interaction, more of a partner-type interaction, where we’re treating each other as individuals that are self-sufficient that can do things, that can function autonomously. And in that situation, you know, the best way I can team, the best way that I can work with you, is to support you when you’re having problems.

Host:Yeah.

Terry Fong:So if you have a problem and you say, “You know, I don’t know quite how to solve this,” and if I have an idea of how to solve it, I’ll tell you. I’ll say, “Well, why don’t you try this?” And so, we’ve been trying to apply that same philosophy to working with our robots.

So, here at NASA Ames, for example, we’ve been developing a lot of robot navigation systems for a long time. Our planetary rovers, the K10s and the KRex, they can drive very well, most of the time, from point A to point B. They look at the world, they use their onboard cameras, their laser scanners, to figure out what’s safe and what’s not safe. We’ve tested those robots not just here at NASA Ames. We’ve taken them out to the field. We’ve tested on Devon Island in the Canadian Arctic. We’ve tested in Arizona at Black Point Lava Flow. In Washington state at Moses Lake Sand Dunes. A lot of different places where we’ve taken them out. These are places that are planetary analogs, so they’re similar in one or more ways to the moon or Mars. And for us it’s a way to really challenge ourselves to say, “Hey, can we make our systems work under more realistic conditions?”

But the overall approach we’ve had is this idea of supporting the robot in a way that we would support another human teammate. So, the K10 and KRex rovers, even though they’re very good in general terms of looking at the world, figuring out how to go from point A to point B, every once in a while, every once in a while they’ll have problems. They’ll get stuck. They’ll basically come to a point where they’ll say, “Well, I don’t know. Is this an obstacle or not? Can I turn left or right?” And they may just sort of spin and be stuck there. And if we don’t do anything, they could get stuck there forever.

So in those situations, the overall approach is, well, they do well most of the time, so we will only intervene, we’ll only provide assistance when they get stuck. And it’s just the same way that I think you and I would go through the world. And you say, “Hey, I’ve got a problem. Can you help?” I’ll help you at that time. I mean, you don’t want me sitting there, sitting behind you all the time, telling you, “Do X, Y, and Z.”

Host:I’d imagine there’s also some advantage of letting the machine kind of work itself through it, as opposed to us micro-managing and being helicopter robot overloads.

Terry Fong:Yeah. Or a backseat driver. I mean, part of the advantage is that the robot makes as much progress as it can. It reduces the workload that you and I have to go through, because we’re not worrying all the time, “Oh my god, what do I do next?” but we’re letting the robot carry out something by itself. In the case of having robots that are delayed because of the distance that they are — so time delay in communications means that we can be more efficient in the way that they operate, you know?

Instead of looking forward and figuring out, “Oh, can I turn left or turn right,” and then carrying out that command, and then having to wait a long period of time because of the communication delay, we can just say, “Hey, go to this far off location,” and the robot will make much faster progress in driving, because it’s not waiting for the human in this long, say, time-delayed loop because of communications.

The other thing here, too, is that the robot being more independent is able to make sort of real-time, fast, reflexive, safe-guarding type actions that we ourselves couldn’t do if we’re sitting in the control loop. The idea that the robot can pick up, “Oh, there’s a rock right in front of me right now, and I don’t need to go ask Matt should I stop. I’ll just stop.”

Host:“No, I need to stop.”

Terry Fong:“I’ll just stop. Then I’ll tell him, and then he can figure out whether or not that’s something that we need to worry about.” It’s just like, you know, as I said earlier, letting our kids become more independent. In fact, I think if you want to summarize it, my mission is to let my robots grow up in the same way that my kids grow up. I want them to be independent. I want them to go off and see the world. I want them to be off doing things by themselves, but still asking me when they have problems —

Host:“How do I” —

Terry Fong:— “How do I get through this?”

Host:Excellent. We’ll actually have this episode out in early January. I know you’re heading over to Las Vegas to the Consumer Electronics Show. We were talking how these 3-D environments, it’s awesome and great for rovers; but man, that’s really good for self-driving cars. So talk a little bit about what you’re going to be showing off or talking about over at CES.

Terry Fong:The things that we’ve been trying to do here at NASA Ames in the robotics group have really focused on three core ideas. One is the use of real-time 3-D graphics, you know, virtual reality-type interfaces to understand what the robot is doing in the remote world.

Number two, the idea the robot is more autonomous, more independent and can function by itself, but sometimes will need help. Which leads to the third thing, which is how do humans and robots team together? When do humans support the robot by providing assistance or guidance, or just that little bit of information the robot needs to get through a problem. Those are things that have worked very well for us with our space robots, but we’ve also observed that it can be used in lots of other areas.

And for the past year and a half, we’ve been working very closely with Nissan Research Center, Silicon Valley — it’s just down the road from here, about five minutes away from NASA Ames — to apply that same set of things, those three things – 3-D graphics; autonomous system, you know, autonomous robotics software; and human-robot teaming — to autonomous vehicles, to these self-driving cars that Nissan is working on.

I think the really fascinating thing here is that we see today a lot of companies — you know, not just Nissan, but —

Host:I was going to say, because this is open source stuff. This is stuff that NASA has worked on, and now it’s open for any company that wants to join together.

Terry Fong:So what Nissan has been working on with us — but I think this is the same thing that would apply to just about any other system out there — are those three ideas. The idea you can use 3-D graphics, that you can have a robot or a car be very autonomous, but that you can use humans to help out these systems —

Host:Help nudge it along.

Terry Fong:Yeah. Help move it along when there are problems is something that I think is very powerful. The whole notion of, you know, a team is better than an individual. As good as we can make robots, or as good as people might make these autonomous self-driving cars, the reality is there will always be situations that are just a little bit too difficult or unexpected, situations that you couldn’t preplan, and those are the kinds of situations where humans are really good at figuring out.

You know, the idea that if you have a planetary rover and it gets stuck, and it’s not clear — do you turn left, do you turn right? But with a little information, the human can say, “Oh yeah. No, actually you need to go straight.” That same approach can be applied to self-driving cars. You know, cars may have difficulty as the car, for example, is approaching a construction site. Something that’s unusual. You don’t expect normally that you’re driving down the road to run into a situation where the lane in front of you is blocked because there’s a backhoe there.

But as humans we’re taught, you know, we’ll drive up, we’ll approach it, we’ll assess what the situation is using our human thought, our human capability to assess unusual things. And then we’ll decide, “You know, in this situation it’s okay to drive on the wrong side of the road for a short period of time, to drive around, say, a construction site.”

Host:It’s kind of like, let the robots do what they’re really good at, and humans do what they’re good at. And I’d imagine that over time, as it gets perfected, that amount of human interference over time gets less and less and less, because the machine will still learn. But it’s like that team effort, doing what we’re good at.

Terry Fong:Yeah, and we hope that that’s the kind of thing that we see in all kinds of situations. I’ve talked a couple times about, you know, kids. And I think that when we as parents have kids, they start off, there’s a lot of interaction. We’re doing a lot of hand-holding. As they get older, we do less and less hand-holding, hopefully. Maybe a little bit less infrequent and in different kinds of levels.

But, you know, even through the rest of our lives, they’re not fully divorced from us. We’re still there to lend a hand when needed. And I’m convinced that robots in the future, that self-driving cars in the future, that autonomous toasters in the future will be like that, you know? We want them to be successful. We want them to be able to operate independently, autonomously, reliantly. But when they have problems, you know, we want to be there to help because together we can do far more. We can be more successful than just, you know, fumbling our way through it alone.

Host:Yeah. It’s almost like you’re never really taking humans out of the loop. You’re just maybe just changing up that loop a little bit, you know, over time.

Terry Fong:Yeah. Exactly. I think that it’s been a very powerful thing for us in NASA, trying to think this way, of how do we go from using robots as tools to making them more as our partners. And part of that is because we’re trying to make the systems be more productive. We’re trying to achieve more complex, greater goals with the robots that we use in our missions. And part of that means we have to add more and more autonomy to our robots, more and more autonomy to our missions.

I’m convinced the right way to do that is through teaming. It’s not just, “Hey, I’m going to make something that’s so great I can just fire and forget it.” But rather that I’m going to make something that is more capable, but that means it’s going to have to deal also with more complex situations and things which I can’t fully predict. And the right way to do that is not to say, “Hey, I don’t know what’s going on. I’m going to stop,” but rather, “I don’t know what’s going on, so I’m going to ask a question. I’m going to ask for help.”

Host:And that makes sense, especially considering NASA — you know, when it takes months to get all of the New Horizons data back from Pluto, you have these huge time delays which we have yet to figure out how to fix that space-time continuum. The robots that we’re sending far out are going to need to learn to work on their own, because we’re not going to be able to babysit them.

Terry Fong:Yeah. And I think that’s the real key here. We don’t want to have to babysit our robots. I would think that people who are working on self-driving cars don’t want to babysit their cars. You don’t want to be the backseat driver. I mean, if you think of it, if you have a self-driving car, part of the goal is to make it so that you don’t have to drive, okay? And if not driving means, “Oh, I’m just going to sit in the backseat and watch all the time, and be the backseat driver all the time,” that’s really not a whole lot better than driving, right?

Host:Yeah, I can imagine the anxiety levels.

Terry Fong:Right? On the other hand, if you’re driving a self-driving car and it tells you, “Hey, guess what? I’ve been driving now for the past 10 hours, but I’m a little confused here, or I’m having a little problem” —

Host:The construction signs — or any matter of complication you could think of.

Terry Fong:Any matter of complication. It’s like, you know, it’s always far better, I think, you know, when you go on a car trip to —

Host:“Am I good?”

Terry Fong:Yeah. Well, to go on a car trip when it’s not just you in the car, but it’s you with someone else.

Host:Yeah, totally.

Terry Fong:And I think that as we move forward and we have these vehicles that are more autonomous, I like to think of them as really being, you know, our copilot, or that we’re going along with them, and it’s not just us being taken for a ride, but we’re going someplace together.

Host:So if anybody wants more information on autonomous systems and some of the stuff you’re working on, I’m guessing NASA.gov?

Terry Fong:Yeah, NASA.gov is a great place. You can also take a look, the group that I run at NASA Ames, the Intelligent Robotics group, we also have a website, IRG, Intelligent Robotics Group dot ARC, Ames Research Center, dot NASA.gov.

Host:Excellent. So, as people see stuff coming up from CES, hear more stuff about autonomous driving, feel free to reach out. We are actually on Twitter @NASAAmes, and we are using the #NASASiliconValley. This is awesome, Terry. This is exciting stuff.

Terry Fong:Yeah. I mean, I’m thrilled to death by where we are today. I just love the fact that robots are becoming more capable. I want to see them everywhere. I think the future is robots everywhere. So, the question is, how do we make that happen sooner, and how do we make that work in a way that just makes it better for all of us?

Host:Anybody who’s roaming the CES floor, be sure to check out — look out for Terry and chat him up. Thanks a lot.

Terry Fong:Sure. My pleasure.

[End]